Welcome to the Recap#2 of our Foundation Model Operations (FMOps) series. In our first, introductory Token, we asked a few questions:

Is running foundation models (FM) in production really that different and difficult from running regular models?

Is there an FMOps stack as we (almost) have with MLOps?

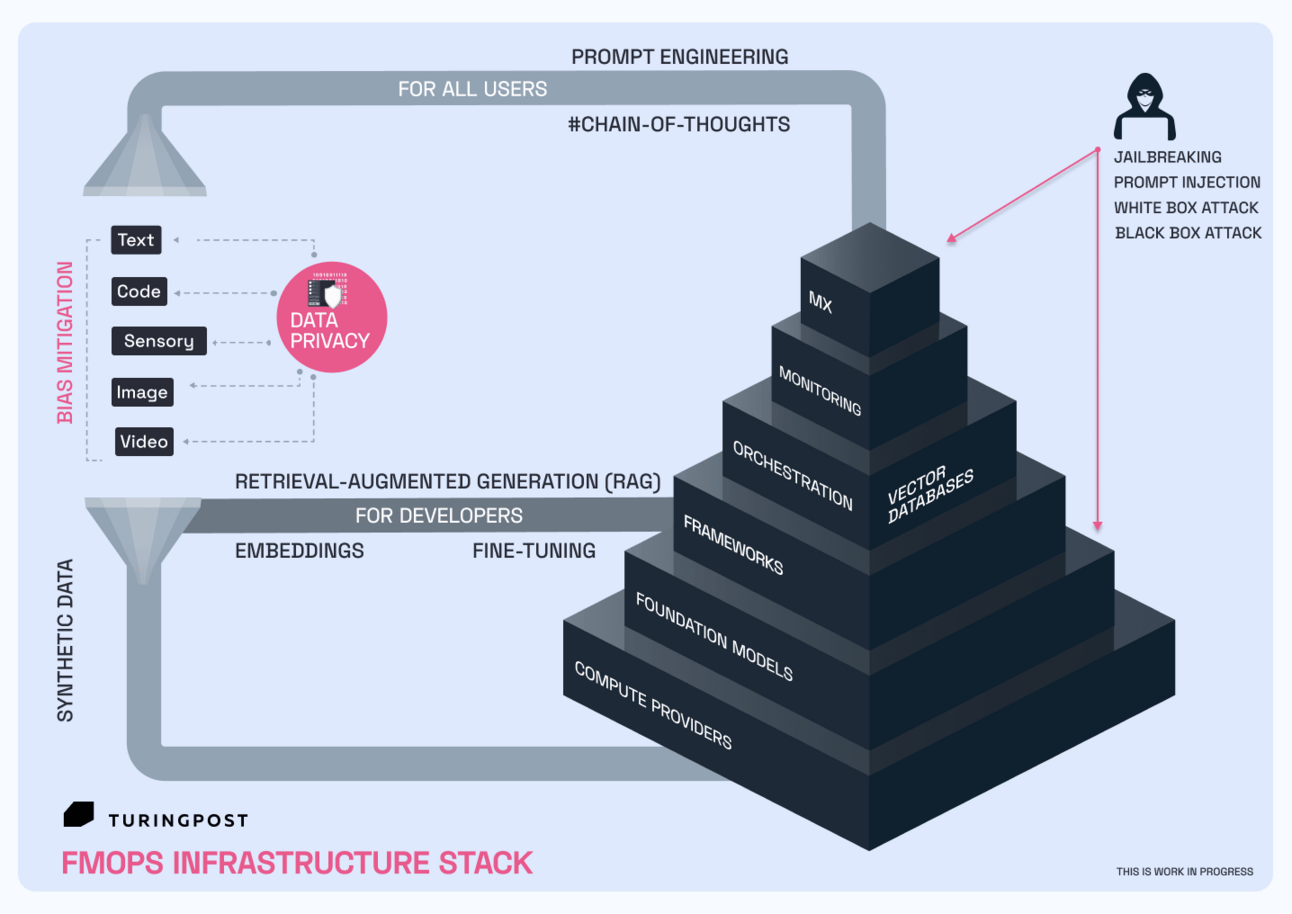

What should the FMOps Infrastructure Stack look like to make productizing FM easier?

No fully mature FMOps stack yet exists comparable to the MLOps landscape. Of course, there is also an element of hype surrounding any buzzword in the rapidly evolving AI space. But we believe that differences between FMOps and MLOps are feasible. The core principles of MLOps – bringing ML to production reliably – still apply, but FMOps/LLMOps require adaptation and a few additions to the process.

While MLOps prioritizes the training-deployment cycle: data preparation, model training, evaluation, deployment, and continuous monitoring, FMOps emphasizes adaptation and fine-tuning of large, pre-trained models. It includes selecting the right FM, prompt engineering, data preparation for fine-tuning, evaluation focused on the specific task, and continuous monitoring for output quality.

While MLOps focuses on structured data used for training and serving the ML model, FMOps involves unstructured data (text, image, etc.) on which FMs have been pre-trained. Fine-tuning data needs to be carefully curated based on the downstream task.

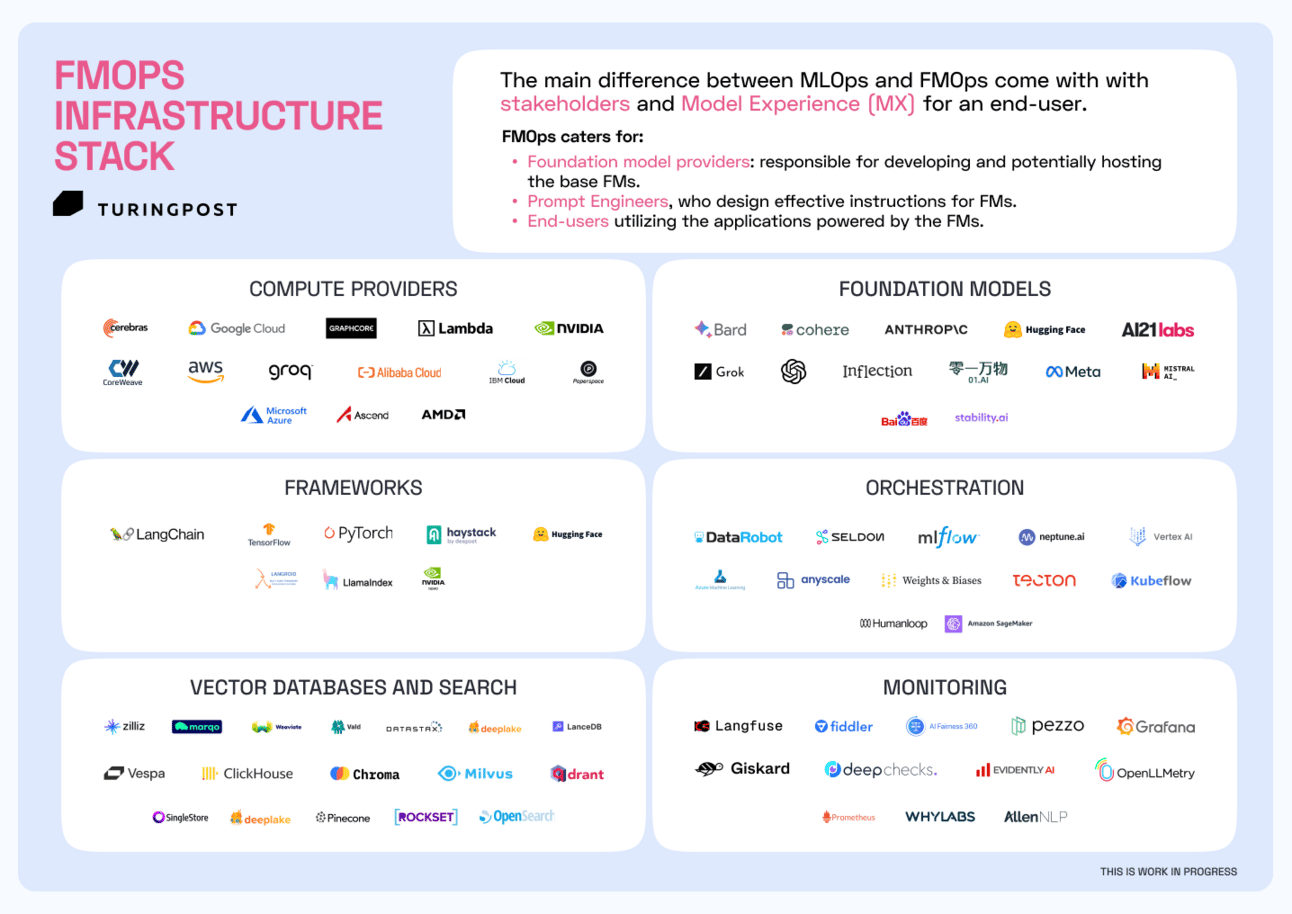

But the main difference come with with stakeholders and Model Experience (MX) for an end-user.

MLOps is operated by data scientists, ML engineers, and DevOps teams.

FMOps also include:

FM Providers: Responsible for developing and potentially hosting the base FMs.

Prompt Engineers: Experts in designing effective instructions for FMs.

Consumers: End-users utilizing the applications powered by the FMs.

MLOps does not cater to end-users directly and does not necessitate a model experience in that sense. In contrast, FMOps – which is precisely why ChatGPT soared in popularity – provides a comprehensive Model Experience (MX) where anyone, regardless of their development experience or computer knowledge, can interact with a model and tailor it to their specific needs using prompts.

In the Recap#1, you will find an invaluable collection about the basics of foundation models and how to make the right choice for your project, including techniques for models' adaptation and how to think about model alignment (including hallucinations and RLHF).

Recap #2 provides a visualization of the FMOps Infrastructure Stack along with our best explanatory tokens about it, including a list of open-source tools, libraries, and companies. This is work in progress, let us know what companies should be on this infographic.

To have access to each and every one of these articles, please →

That way you will also support our cause of spreading AI knowledge

Token 1.18: How to Monitor LLMs?

Ensuring Your LLMs Deliver Real Value

www.turingpost.com/p/monitoring

How did you like it?

Share with at least three of your peers and receive one month of Premium subscription for free 🤍