Generative models have a rich history dating back to the 1980s and have become increasingly popular due to their prowess in unsupervised learning. This category includes a wide array of models, such as Generative Adversarial Networks (GANs), Energy-Based Models, and Variational Autoencoders (VAEs). In this Token, we want to concentrate on Transformer and Diffusion-based models, which have emerged as frontrunners in the generative AI space.

It’s time to be more technologically specific. We will:

explore the origin of Transformer architecture;

discuss Decoder-Only, Encoder-Only, and Hybrids;

touch the concepts of tokenization and parametrization;

explore diffusion-based models and understand how they work;

overview Stable Diffusion, Imagen, DALL-E 2 and 3, and Midjourney.

Transformer-Based Models

Basics

On the basic level, Transformer is a specific type of neural network. We encourage you to read The History of LLMs, where we trace the story of neural networks from the very beginning to Statistical Language Models (SLMs), and to the introduction of Neural Probabilistic Language Models (NLMs) that signified a paradigm shift in natural language processing (NLP). Rather than conceptualizing words as isolated entities, as is done in SLMs, neural approaches encode them as vectors in one space to capture the relationships between them.

This brings us to our first key concept in understanding Transformers: word embeddings. They are dense vector representations of words that capture semantic and syntactic relationships, enabling machines to understand and reason about language in NLP tasks.

Word embeddings are the output of specific algorithms or models, with word2vec being one of the most prominent examples of its time. These embeddings vary in dimensionality, and it's worth noting that higher-dimensional embeddings offer richer, more nuanced contextual relationships between words.

How do Transformers work?

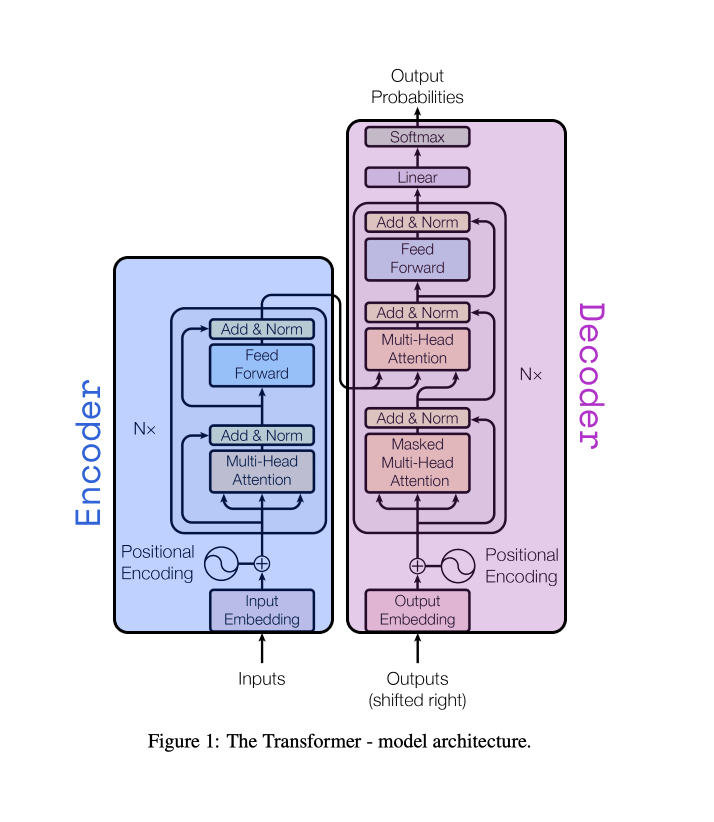

The encoder-decoder framework serves as the backbone of the Transformer architecture.

Source: original paper

Source: original paper

According to the Deep Learning book, this framework was initially proposed by two independent research teams in 2014. Cho et al. introduced it as an "encoder-decoder," while Sutskever et al. named it the "sequence-to-sequence" architecture. It was originally envisioned in the field of recurrent neural networks (RNNs) as a solution for mapping a variable-length sequence to another variable-length sequence for translation task.

Encoder-decoder architecture. Source: DL book

Let’s review the roles of the encoder and decoder and how they work.

Encoder

In a transformer model, the encoder takes a sequence of text and converts it into numerical representations known as feature vectors or feature tensors. Each vector or tensor corresponds to a specific word or token in the original text. The encoder operates in a bi-directional manner, leveraging a self-attention mechanism.

What does bi-directional mean here? It means the encoder considers words from both directions – those that come before and those that come after a given word – when creating its numerical representation.

And what about self-attention? It allows each feature vector to include context from other surrounding words in the sequence. Self-attention mechanism computes weights using Query, Key, and Value vectors, then applies these weights to get the output. In simpler terms, each feature vector isn't just about one word; it also encapsulates information from its neighboring words.

Decoder

Following the encoder's lead, the decoder takes these feature vectors as its input and translates them back into natural language. Unlike the encoder, which works bi-directionally, the decoder operates in a uni-directional fashion. This means it decodes the sequence one step at a time, either from left to right or from right to left, considering only the words in that particular direction as it goes along.

This uni-directionality also goes hand-in-hand with masked self-attention. For any given word, the decoder incorporates context from only one direction – either the words that precede it or those that follow it.

Additionally, the decoder is auto-regressive, which means it factors in the portions of the sequence it has already decoded when processing subsequent words. In other words, each step in the decoding process not only relies on the feature vectors from the encoder but also on the words that the decoder has already generated.

Illustration of how encoder and decoder work together. Blue rectangles represent the encoder part, green rectangles – are the decoder part, and red rectangles – are the output of the model. Source: Hackmd

Decoder-Only, Encoder-Only, and Hybrids

While the original 2017 transformer architecture featured both encoder and decoder units, subsequent developments have demonstrated that these components can operate independently. Furthermore, each component – whether it's the encoder or the decoder – specializes in certain types of tasks.

Source: Ahead of AI newsletter

Encoder-only models, like BERT and RoBERTa, are derived from the encoder module of the original transformer architecture. They break down and understand input text, producing continuous representations or embeddings. These embeddings are then used for various predictive modeling tasks, such as classification. While all encoder, decoder, and hybrid models are sequence-to-sequence (seq2seq), only encoder-only models avoid autoregressive decoding, instead, they focus on understanding input and producing task-specific outputs.

Decoder-only models, like the GPT series, are primarily used for generating text. These models generate outputs one token at a time, using previously generated tokens as context, a process known as autoregressive decoding. They exhibit emergent properties, meaning they can perform tasks like summarization, translation, and question-answering without specific training. Their capabilities arise from next-word prediction pretraining, and they can adapt to new tasks without parameter updates through in-context learning.

Encoder-decoder hybrids blend the strengths of both encoder and decoder architectures. Models like BART and T5 exemplify this, often incorporating novel techniques and objectives. These hybrids are adept at tasks like text translation and summarization, translating complex mappings between input and output sequences.

Other essential concepts to understand foundation models

Tokenization: Imagine you have a jigsaw puzzle of a text. Tokenization is like breaking that text into individual puzzle pieces so a machine can understand and process each piece. In the context of language models, tokenization is the act of splitting a text into smaller units (tokens), often words or subwords, which the model can then process.

Parametrization: Think of a foundation model as a sophisticated robot. Just like a robot has adjustable settings and parts to help it perform tasks, a foundation model has parameters (often millions or billions of them) that are adjusted during training. These parameters learn patterns from data. When we say "parametrization," we're referring to the settings and adjustments made to these parameters so the model can make accurate predictions or generate relevant content.

In essence, tokenization helps convert text into a format the model can understand, and parametrization is about adjusting the model's internal settings for optimal performance.

Or as Yann LeCun explained recently: "It makes absolutely no sense to write:

PaLM 2 is trained on about 340 billion parameters. By comparison, GPT-4 is rumored to be trained on a massive dataset of 1.8 trillion parameters."

It would make more sense to write:

"PaLM 2 possesses about 340 billion parameters and is trained on a dataset of 2 billion tokens (or words). By comparison, GPT-4 is rumored to possess a massive 1.8 trillion parameters trained on untold trillions of tokens."

Parameters are coefficients inside the model that are adjusted by the training procedure. The dataset is what you train the model on. Language models are trained with tokens that are subword units (e.g. prefix, root, suffix).”

Parameters are what you deal with when doing fine-tuning and prompt engineering. We will talk about it in detail in later Tokens.

That’s it for the wrap-up about transformer-based generative models. Next stop →

Diffusion-Based Models

Diffusion-based models have emerged as another key player, finding applications in areas such as image tasks like text-to-image conversion and inpainting; video applications such as synthesis and retargeting; and in the realm of audio, both speech and music synthesis.

Cutting-edge tech firms such as Nvidia, Google, Adobe, and OpenAI have thrust diffusion models into the spotlight. Models like DALL-E 2 and DALL-E 3, Stable Diffusion, and Midjourney changed the way we think about image creation.

Basics

In 2015, Stanford University and UC Berkeley published a paper ‘Deep Unsupervised Learning using Nonequilibrium Thermodynamics’, introducing diffusion models (the concept originates from non-equilibrium statistical physics). The main idea behind training these models is to gradually corrupt data by adding noise progressively until the data is completely noisy, and then learn how to reverse this alteration to generate new samples.

Here is what the process looks like:

Forward Diffusion. The model begins with a data sample from a simple distribution - often a Gaussian – and proceeds to apply a series of invertible transformations to it. The aim is to evolve this sample into a more intricate data distribution step by step. Each step adds complexity, effectively capturing the nuanced patterns of the real-world data distribution.

Training the Model. The model learns the specific transformations it needs to apply during the forward diffusion process. Training typically involves the optimization of a loss function that measures how closely the transformed samples mimic the original, complex data. This is often referred to as score-based modeling because it involves estimating the score function, or the gradient of the data distribution's log-likelihood.

Reverse Diffusion. Once the model has been trained, it can generate new data samples. The process is essentially the inverse of forward diffusion: it starts with a complex data point and applies a series of invertible transformations to map it back to the simpler original distribution. This is the crux of the model's generative power, enabling it to create new samples that are remarkably similar to the original data.

The outcome? A model adept at generating images from seemingly random input, such as text embeddings. Advantages of diffusion models include non-reliance on adversarial training, scalability, and parallelization capabilities.

Stable Diffusion

Source: Original paper

The problem was that operating directly in pixel space, diffusion models demanded a lot of GPU time, which is terribly costly for both optimization and interference. In December 2021, Runway ML in collaboration with LMU Munich, published a paper ‘High-Resolution Image Synthesis with Latent Diffusion Models’. The paper builds on the original idea of diffusion models from 2015 but it adds a latent space in the process! An autoencoder condenses image data into a manageable latent space without sacrificing perceptual depth. This sets the stage for Latent Diffusion Models (LDMs) to operate with both computational ease and keen attention to visual detail. The approach has not only broadened the application spectrum – from image inpainting to super-resolution – but also set new performance benchmarks, all while trimming down computational overhead.

The Stable Diffusion Model is based on the Latent Diffusion Model but scaled with a compute donation by Stability AI and training data from non-profit organizations.

Imagen

Source: Original blog post

Imagen by Google Research raises the bar in text-to-image synthesis through a fusion of large pre-trained text encoders and diffusion models. Particularly, human evaluators favored T5-XXL encoders over CLIP for complex image-text alignment and image fidelity.

Imagen uses conditional diffusion models that transform Gaussian noise into samples based on learned data distribution. These models are augmented with classifier-free guidance to condition the text effectively.

DALL-E 2

Source: original paper

DALL-E 2 by OpenAI adopts a two-tiered architecture with a CLIP encoder and diffusion decoder.

Prior model: This generates a CLIP image embedding given a text prompt. Two types of priors are explored - autoregressive and diffusion. The prior condenses the text into a semantic vector representation.

Decoder model: This is a conditional diffusion model that takes the CLIP image embedding from the prior and generates the final image. The decoder converts the semantic vector into a detailed image.

This two-tier architecture decomposes the text-to-image problem into a semantic conditioning stage and a detailed image synthesis stage for better control, efficiency, and capabilities. This allows for the nuanced alteration of images – tweaking non-essential details while preserving core semantics and style.

DALL-E 3 is the most recent OpenAI model. The key difference is DALL-E 3's training on higher quality, synthesized image captions to enhance its prompt following capabilities. The quality has been improved significantly.

Midjourney

Midjourney by David Holz's AI research lab is a bit mysterious. Very little has been published about its inner workings, but it appears to be pretrained on billions of images and inspired by models like CLIP. The quality suggests advanced techniques and large-scale infrastructure, with some estimates of over 10,000 servers. Midjourney's main interface is simple text commands, but it intelligently parses prompts to create beautiful, intricate images.

Additional info: TheSequence produced an insightful series on text-to-image synthesis models, which extensively covers diffusion models.

Conclusion:

In this Token, we thoroughly discussed Transformer and Diffusion-based architectures. Transformers, with their encoder-decoder framework, have revolutionized NLP by capturing intricate word relationships through embeddings. On the other hand, diffusion models have redefined image synthesis by progressively adding noise and then reversing it. As AI continues to deeply integrate into various technological domains, understanding foundation models is crucial for anyone keen on staying ahead in the AI race. We briefly mentioned fine-tuning and prompt engineering, but we haven't even touched upon Large vs. Small FMs, Open vs. Closed-sourced FMs, and the classification of FM modalities. All this (and much more!) will be covered in the next Tokens. Stay tuned!

Please give us feedback

Thank you for reading, please feel free to share with your friends and colleagues. In the next couple of weeks, we are announcing our referral program 🤍