Introduction

In this series, we've traced Large Language Models (LLMs) development from the 1930s to the 2020s and delved into ChatGPT's secrets. Today, we want to share a bonus episode, where we explore three leading open-source LLMs and their fine-tuning results, using the average score from the 🤗 Open LLM leaderboard to guide us.

🏆 Top-3

As of July 2023, the top-performing LLMs based on the average score from the four key benchmarks are:

Llama 2-70B

LlaMA-65B and LLaMA-30B

Falcon-40B

⚖️ Evaluation

The 🤗 Open LLM leaderboard provides a comprehensive assessment of various LLMs based on four key benchmarks furnished by the Eleuther AI Language Model Evaluation Harness:

AI2 Reasoning Challenge (ARC) (25-shot) evaluates models' reasoning skills through grade-school science questions.

HellaSwag (10-shot) tests commonsense inference, posing a challenge for state-of-the-art models despite being relatively easy for humans.

MMLU (5-shot) measures multitasking accuracy across 57 tasks, including elementary mathematics, US history, computer science, law, and more.

TruthfulQA (0-shot) examines models' tendency to reproduce online falsehoods.

Let’s go deeper into each model!

LLaMA

In February 2023, Meta AI introduced the LLaMA (Large Language Model Meta AI) series of models, but access was restricted to a select group of researchers from academia, government, civil society, and industry laboratories. Others could apply for access through a request form, which sparked concerns about the limited availability of the model.

Yann LeCun, the chief AI scientist at Meta AI, explained why the model is not so easily available. He referred to the past criticism of Meta AI when it made an LLM available to everyone (Galactica), leading to fears of misuse.

The limited commercial usage of the LLaMA model was another topic of concern, as well as not exactly the open nature of this “open-source” model.

But barely two weeks after its release, something unexpected happened: the model was leaked on 4chan, spreading like wildfire with thousands of downloads. This leak produced a plethora of new models that were using LLaMa as their foundation.

The LLaMA original release came in four sizes: 7B, 13B, 30B, and 65B, and demonstrated outstanding performance on various benchmarks. LLaMA-13B outperforms GPT-3 (175B) on most benchmarks, and LLaMA-65B is competitive with the best models, Chinchilla-70B and PaLM-540B.

Image Credit: original LLaMa paper

What’s interesting is that the LLaMA creators aimed to make research on foundational models accessible to more people in the research community, but soon after the leak researchers all over the world discovered that the smallest model with 7B parameters required almost 30 GB of GPU memory to run.

Image Source: Reddit comment

Despite initial challenges, researchers managed to optimize the model and reduce the required computing power, leading to the development of multiple new projects that utilized LLaMA as a basis for advanced LLM agents.

🌳 LLaMA heritage

The release of LLaMA sparked an unprecedented wave of innovation in the open-source LLM community. The projects leveraged LLaMA's capabilities to create a diverse range of models, each pushing the boundaries of what was possible in the field:

Stanford University unveiled Alpaca, an instruction-following model based on the LLaMA 7B model. (The public demo is now disabled until further notice.)

A collaboration among researchers from UC Berkeley, CMU, Stanford, and UC San Diego resulted in the open-sourcing of Vicuna, a fine-tuned version of LLaMA that achieves performance on par with GPT-4.

The Berkeley AI Research Institute (BAIR) introduced Koala, a version of LLaMA that underwent fine-tuning using internet dialogues.

Nebuly contributed to the open-source landscape with ChatLlama, a framework that enables the creation of conversational assistants using custom data.

FreedomGPT, an open-source conversational agent, draws its foundation from Alpaca, which in turn is based on LLaMA.

UC Berkeley's Colossal-AI project released ColossalChat, a ChatGPT-like model featuring a comprehensive RLHF (Reinforcement Learning from Human Feedback) pipeline built on top of LLaMA.

A multitude of researchers have extended LLaMA models through instruction tuning or continual pre-training. Instruction tuning, in particular, has emerged as a prominent approach for developing customized or specialized models, owing to its relatively low computational costs. Now, LLaMA lies at the foundation of many exceptional models taking the top places in the ranking of open-source models.

Falcon-40B (Known worldwide, based in UAE)

The Falcon family is composed of two base models: Falcon-40B and its little brother Falcon-7B. The 40B parameter model currently tops the charts of the 🤗 Open LLM leaderboard, while the 7B model is one of the best in its weight class.

Image Credit: Technology Innovation Institute company card on Hugging Face

Falcon 40B, developed by the Technology Innovation Institute (TII) proudly holds the distinction of being the first home-grown model from the UAE and the Middle East. This LLM serves as a foundational milestone, boasting an impressive parameter count of 40 billion. Notably, it is the first “truly open” model with capabilities rivaling many current closed-source models.

Image Credit: Original RefinedWeb paper

Its training was carried out using one trillion tokens derived from the RefinedWeb dataset. Just FYI, the public extract of RefinedWeb will cost you about ~500GB of storage to download, and 2.8TB in the unpacked format.

To construct the RefinedWeb dataset, public web crawls were utilized to gather pre-training data. Careful filtering was employed, excluding machine-generated text and adult content, resulting in a dataset of nearly five trillion tokens. To broaden the capabilities of Falcon, curated sources such as research papers and social media conversations were incorporated into the dataset.

The process of construction of the RefinedWeb dataset. Image Credit: Original RefinedWeb paper

Another interesting feature of the Falcon models is their use of multi-query attention. This trick doesn’t significantly influence pre-training, but it greatly improves the scalability of inference, reducing memory costs and enabling novel optimizations such as statefulness.

The training process for Falcon 40B spanned two months, making use of 384 GPUs on AWS. While it seems to be some giant numbers, it uses only 75 percent of GPT-3’s training compute, 40 percent of Chinchilla’s, and 80 percent of PaLM-62B’s. On the other hand, Falcon-7B only needs ~15GB, making inference and fine-tuning accessible even on consumer hardware.

📝 Fine-tuned Falcon-40B for instructions

Falcon-40B-Instruct, a remarkable causal decoder-only model with 40B parameters, has been also developed by TII to excel in processing generic instructions within a chat-based format. The name of this model is derived from its base counterpart, Falcon-40B, which underwent fine-tuning on a blend of Baize data to ensure its suitability for instruction-based interactions.

Baize, inspired by a mythical creature from Chinese folklore, serves as an open-source chat model trained using the Low-Rank Adaptation of Large Language Models (LoRA), about which we will be talking later. To train Baize, 100k dialogues were generated by allowing ChatGPT to engage in conversations with itself. Additionally, Alpaca data was incorporated to enhance Baize's performance.

Falcon-40B-Instruct stands out as a formidable alternative to ChatGPT, securing the top position on the 🤗 Open LLM leaderboard. But you also should be aware of its limitations: primarily trained on English data, Falcon-40B-Instruct may not exhibit optimal performance when applied to other languages. Furthermore, due to its training on extensive web corpora, the model may inadvertently perpetuate stereotypes and biases commonly encountered online.

🔓 LoRA: The Game-Changer in Large Language Model Optimization

The emergence of open-source LLMs like LLaMA has sparked a frenzy of interest and activity because it proved that size isn't everything when it comes to achieving state-of-the-art performance.

Traditional LLMs, the behemoth ones, were notorious for their exorbitant costs to train, fine-tune, and operate. For example, GPT-3 boasts 175B parameters! Deploying independent instances of fine-tuned models with such a large parameter count would be prohibitively expensive. However, researchers have developed techniques like Low-Rank Adaptation of Large Language Models (LoRA) to address this challenge.

LoRA dramatically slashes the number of trainable parameters for downstream tasks. Compared to the traditional Adam optimization method, LoRA can reduce the parameters by a jaw-dropping 10,000 times and trim the GPU memory requirement by 3 times. LoRA doesn't just cut costs, it allows to achieve comparable, if not superior, model quality when applied to renowned models like RoBERTa, DeBERTa, GPT-2, and even the GPT-3.

Image Credit: Original LoRA paper

Researchers have released a package that simplifies the integration of LoRA with the PyTorch model, including implementations and model checkpoints for such models as RoBERTa, DeBERTa, and GPT-2.

LoRA made a positive impact on the broader adoption and accessibility of sophisticated language models in various applications and domains.

Llama 2

The LLaMA model released in February 2023 produced a new wave of great models and was a remarkable milestone in the history of open-source LLMs. Thank you, 4chan user!

However, the landscape of LLMs experienced a seismic shift again when Meta AI unleashed Llama 2. It’s a collection of pre-trained (Llama 2) and fine-tuned (Llama 2-Chat) LLMs, varying in scale from 7 billion to 70 billion parameters. And it finally had a commercial license!

The impact of this release was nothing short of monumental, as Llama 2 soared to the top spot on the 🤗 Open LLM leaderboard, surpassing the previously dominant LLaMA, Falcon, and other LLMs and chatbots. Moreover, the human evaluations for helpfulness and safety suggest that Llama 2 models could be viable alternatives to closed-source models.

Researchers crafted the new Llama 2 family using a similar pre-training approach as the initial LLaMA but with several modifications. Notably, they implemented more robust data cleaning, updated data mixes, increased the training on 40% more total tokens, doubled the context length, and utilized grouped-query attention (GQA) to enhance inference scalability for their larger models.

A comparison of the attributes of the new Llama 2 models with the Llama 1 models. Image Credit: Meta AI

With a training corpus of 2 trillion tokens, the pre-training data was thoughtfully sourced from publicly available sources. This fresh mix of data laid the foundation for the improved capabilities of Llama 2.

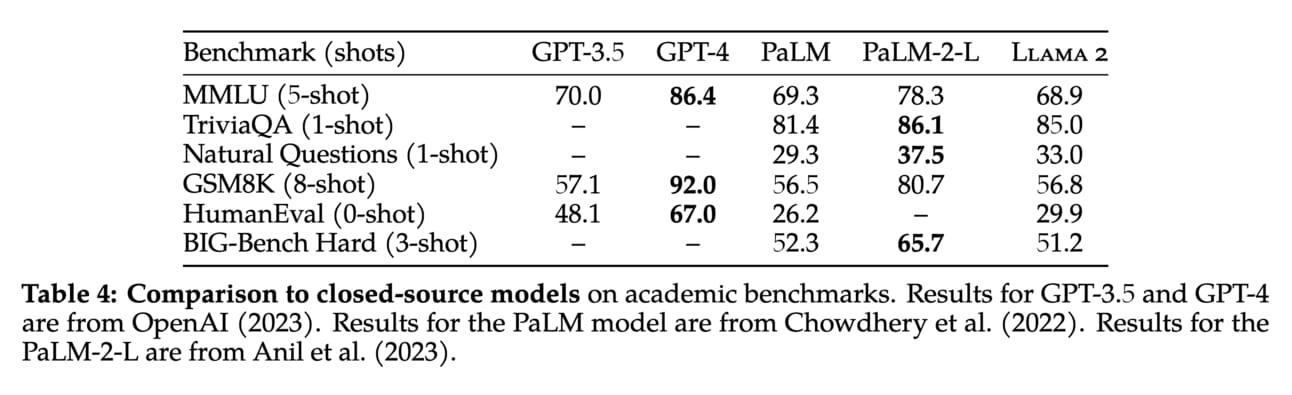

In addition to open-source models, researchers also compared Llama 2-70B results to closed-source models. It appeared to be close to GPT-3.5 on MMLU and GSM8K, but there is a significant gap in coding benchmarks. Llama 2-70B results are on par or better than PaLM (540B) on almost all benchmarks. There is still a large gap in performance between Llama 2-70B and GPT-4 and PaLM-2-L.

A comparison of the attributes of the new Llama 2 models with the Llama 1 models. Image Credit: Meta AI

Despite the novelty of the Llama 2 family, many remarkable models taking the top places in the 🤗 Open LLM leaderboard already appeared from fine-tuning it.

🧳 The Journey of Llama 2-Chat: Advancements in Dialogue Models

Llama 2-Chat, an impressive fine-tuned version of Llama 2, has been specifically designed to excel in dialogue use cases.

Image Credit: Meta AI

To optimize the model, the researchers initially used Supervised Fine-Tuning (SFT) with publicly available instruction tuning data from the original LLaMA paper. Later on, they collected several thousand examples of high-quality SFT data. They found that focusing on a smaller yet superior set of examples from Meta AI's annotation efforts resulted in remarkable improvements. Surprisingly, only tens of thousands of SFT annotations were enough to achieve high-quality results, leading them to stop annotating SFT after reaching a total of 27,540 annotations.

An intriguing discovery emerged during this process: outputs sampled from the resulting SFT model often rivaled those written by human annotators. This finding prompted the researchers to reprioritize and put more effort into preference-based annotation for RLHF.

In their pursuit of reward modeling, the researchers collected human preference data. Annotators were asked to provide prompts and then choose between two sampled model responses, focusing mainly on criteria like helpfulness and safety.

Image Credit: Meta AI

The researchers compared their data against various open-source preference datasets, including Anthropic Helpful and Harmless, OpenAI Summarize, OpenAI WebGPT, StackExchange, Stanford Human Preferences, and Synthetic GPT-J. Their large dataset comprised over 1 million binary comparisons, featuring more conversation turns and longer prompts compared to existing open-source datasets, particularly in domains like summarization and online forums.

🧬 FreeWilly2: Fueled by Llama 2 and Synthetic Data

Another intriguing model that emerged from fine-tuning Llama 2 is FreeWilly2, which proudly claims the top spot on the 🤗 Open LLM leaderboard. Developed collaboratively by Stability AI and their CarperAI lab, FreeWilly2 harnesses the power of the Llama 2 70B foundation model to achieve remarkable performance, favorably comparable to GPT-3.5 for certain tasks.

FreeWilly2 is released to promote open research under a non-commercial license. The training process of these models drew inspiration from Microsoft's pioneering methodology outlined in their paper "Orca: Progressive Learning from Complex Explanation Traces of GPT-4." While the data generation process shares similarities, there are differences in data sources.

Stability AI's variant of the dataset, comprising 600,000 data points (approximately 10% of the original Orca paper's dataset size), involved prompting language models with high-quality instructions from datasets created by Enrico Shippole. These datasets include:

Despite training on one-tenth of the sample size of the original Orca paper, this approach significantly reduced the cost and carbon footprint of training the model. Yet, the resulting FreeWilly models demonstrated exceptional performance across various benchmarks, validating the efficacy of synthetically generated datasets.

Conclusion

The journey through the development of open-source LLMs like LLaMA, Falcon, and Llama 2 has showcased AI's remarkable abilities in natural language processing. They have democratized access, offering impressive performance and innovations that continue to shape AI. The open-source LLM community promises even more breakthroughs ahead.

The exploration of today's top open LLMs raises a crucial question: How to utilize them effectively? The upcoming edition will provide valuable tips and tricks through discussions with experts. Learn how to leverage existing models, fine-tune and deploy them efficiently, and avoid common mistakes and obstacles. Even beginners will find essential advice to get started right.

To be continued…

Be sure you are subscribed and shared this historical series with everyone who can benefit from it.