We took a little time off and, instead of the news, recorded a special history episode of 3 WOW and 1 Promise for you. Back when Jensen Huang didn’t wear a leather jacket →

Research that you need to know about

Research is the litmus test for what’s really happening in AI. Today, we’re continuing our list of the most important and revealing studies from the first half of 2025. These are standout works that reflect the true landscape of AI – what the field has gone through, what it has explored, and the trends that are now shaping its future.

Here’s our second must-read a list of 8 research papers from January to July 2025. (Also, check out the first part here)

Follow us on 🎥 YouTube Twitter Hugging Face 🤗

Inference-Time Scaling for Complex Tasks: Where We Stand and What Lies Ahead

Conducted by researchers at Microsoft Research, this is a comprehensive empirical study on enhancing reasoning in LLMs through inference-time scaling – one of the main trends of 2025. The idea is to boost reasoning by using more compute during inference: longer chains of thought, multiple attempts, and feedback loops. The study finds a nuanced picture: while inference-time scaling helps, gains vary across domains and taper off with difficulty. More tokens don’t always mean better accuracy, and cost predictability remains a challenge. But perhaps the most important insight is this: post-training strategies – like feedback and answer selection – show real promise, often rivaling model retraining. The new wave in AI says: focus on improving inference-time performance rather than scaling model size →read the paper

Continuous Thought Machines

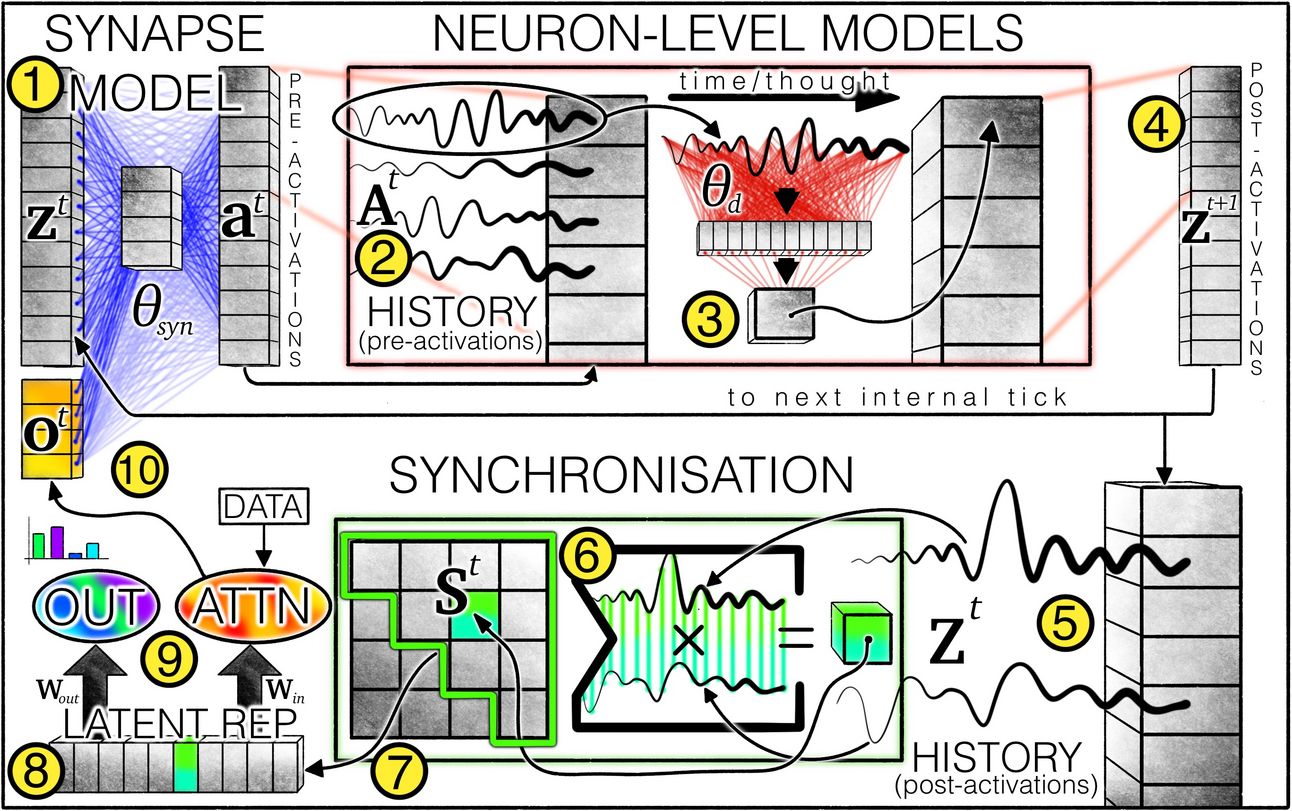

This development by Sakana AI raises the question: what if time is the missing piece in AI? CTM is a new model where neurons look back, remember, and sync up. Timing becomes information, and patterns emerge from rhythms, not from layers. CTMs solve mazes step by step, tracing the path really like a person would. One of the best papers in 2025 so far →read the paper

CTM architecture. Image credit: CTM Report

You are on a free list. Upgrade if you want to read all the following articles and be the first to receive all new deep dives directly in your inbox. Simply your learning →

Scalable Chain of Thoughts via Elastic Reasoning

Controllable reasoning and cost-efficiency is very important in the age of inference time scaling strategies and Reasoning Models, and this paper by Salesforce AI Research introduces a framework that enables large reasoning models to perform reliably under strict inference-time token, latency, or compute constraints. It divides reasoning into two stages – thinking and solution – to better handle inference constraints. Overall, Elastic Reasoning strategy allows models to match or exceed performance of top-tier methods while using 30–40% fewer tokens →read the paper

Image Credit: Scalable Chain of Thoughts via Elastic Reasoning

Parallel Scaling Law for Language Models

This paper by Qwen researchers introduces ParScale, a new scaling paradigm that improves performance by parallelizing computation rather than increasing model size or token count. Notice the pattern? More and more research highlights that scaling model size isn’t the only – or even the best – path to better performance. ParScale, in particular, applies multiple learnable transformations to the input in parallel and then aggregates the outputs, enabling efficient scaling during both training and inference →read the paper

Image Credit: Parallel Scaling Law for Language Models

Soft Thinking: Unlocking the Reasoning Potential of LLMs in Continuous Concept Space

This paper is a collaborative effort by researchers from the University of California (Santa Barbara, Santa Cruz, Los Angeles), Purdue University, LMSYS Org, and Microsoft. The paper proposes a training-free soft token generation approach in continuous space – moving beyond the standard discrete language tokens – to emulate abstract reasoning and boost both accuracy and efficiency in LLMs. By preserving full probability distributions at each step, Soft Thinking enables richer semantic representation and parallel exploration of reasoning paths. This shift beyond language-bound reasoning makes LLMs significantly more flexible →read the paper

The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity

This paper by Apple underlines an important breakdown - a model’s coping mechanism when pushed past its fundamental capacity. It shows that the model’s “CPU” overloads on complex, multi-step tasks, and its reaction is to abort the task mid-thought. This is what we definitely should overcome in the nearest future. A great reminder that we should be making reasoning models not merely smarter but more stable and reliable under cognitive load →read the paper

How much do language models memorize?

This paper by FAIR, Google DeepMind, Cornell University, and NVIDIA shows how the model’s storage capacity is saturated by large training sets. It separates unintended memorization (data-specific) from generalization (true pattern learning), and finds that models memorize data until capacity fills, then it skips memorization and compresses aggressively by generalizing. They also develop accurate scaling laws for membership inference risks. As we all need better understanding of what models truly "remember", this fresh paper is really worth reading →read the paper

Build the web for agents, not agents for the web

A title that is like a slogan for the new age of the web… This paper by McGill University and Mila–Quebec AI Institute proposes a redesign of web interfaces to better support agent navigation, focusing on safety, standardization, and agent-native affordances. In our recent interview with Linda Tong, CEO of Webflow, we also discussed that agents are now the main visitors of our websites and we’re in the moment when need to rebuild infrastructure for them. Agentic Web Interfaces (AWIs), offered in the paper, is one of the options →read the paper