We took a little time off and, instead of the news, recorded a special history episode of 3 WOW and 1 Promise for you. It’s well worth watching →

Research that you need to know

The pace of AI in 2025 is wild – breakthroughs land faster than most of us can process. That’s exactly why it’s worth stepping back to track what’s actually shifting the field. Half a year in, we’ve already seen papers that redefine core capabilities, open new frontiers, or quietly reshape the stack.

Here’s Part 1 of our curated list: 8 research works from early 2025 that shaped how AI is built, optimized, and understood. If you’re working on anything real, they’re worth your time. Part 2 follows in two weeks.

Follow us on 🎥 YouTube Twitter Hugging Face 🤗

Towards System 2 Reasoning in LLMs: Learning How to Think With Meta Chain-of-Thought (Meta-CoT)

This paper introduces Meta-CoT, a new framework for reasoning in LLMs that reflects on the reasoning process itself. Inspired by System 2 thinking, it supports iterative thinking and checking for complex problems.

Authored by researchers from SynthLabs.ai, Stanford, and UC Berkeley, it frames reasoning as a search problem via Markov Decision Processes MDPs and trains models using process reward models and meta-RL. Results show that reinforcement-trained models outperform instruction-tuned ones, echoing broader 2025 research trends.

Overall, this study highlights what we’ve all been talking about since the rapid rise of Reasoning Models in 2025: slower, verified reasoning and the growing role of RL →read the paper

New Methods for Boosting Reasoning in Small and Large Models from Microsoft Research:

This blog emphasizes one of the most critical frontiers in AI today: making language models, both large and small, truly capable of rigorous, human-like reasoning. It summarizes important breakthroughs, such as:

rStar-Math: Brings deep reasoning capabilities to small models (1.5B–7B parameters) using:

Monte Carlo Tree Search (MCTS),

Process-level supervision via preference modeling,

Iterative self-improvement cycles.

Logic-RL framework: Rewards the model only if both the reasoning process and the final answer are correct.

LIPS: Blends symbolic reasoning with LLM capabilities (neural reasoning) for inequality proofs.

Chain-of-Reasoning (CoR): Unifies reasoning across natural language, code, and symbolic math, dynamically blending all three aspects for cross-domain generalization.

This blog and all studies are worth exploring to catch the landscape of model’s reasoning evolution →read the paper

You are on a free list. Upgrade if you want to read all the following articles and be the first to receive all new deep dives directly in your inbox. Simply your learning →

SFT Memorizes, RL Generalizes: A Comparative Study of Foundation Model Post-training

This paper is remarkable because it delivers one of the clearest and most comprehensive empirical investigations into how post-training methods differently impact generalization in both textual and visual reasoning tasks for foundation models. Researchers from HKU, UC Berkeley, Google DeepMind, NYU, and University of Alberta compared Supervised Fine-Tuning (SFT) and RL, showing that RL excels in out-of-distribution generalization also improving visual recognition capabilities, while SFT primarily memorizes data. Once again we see the evidence that shifting toward RL is essential for robust general-purpose models that better adapt to new tasks →read the paper

The GAN is dead; long live the GAN! A Modern GAN Baseline

Loved this paper! Researchers from Brown and Cornell Universities challenge the idea that GANs (Generative Adversarial Networks) are difficult to train by proposing a new alternative, R3GAN. It derives a regularized relativistic GAN loss that leads to stability and convergence, removing the need for heuristics and allowing the use of modern architectures →read the paper

Transformers without Normalization

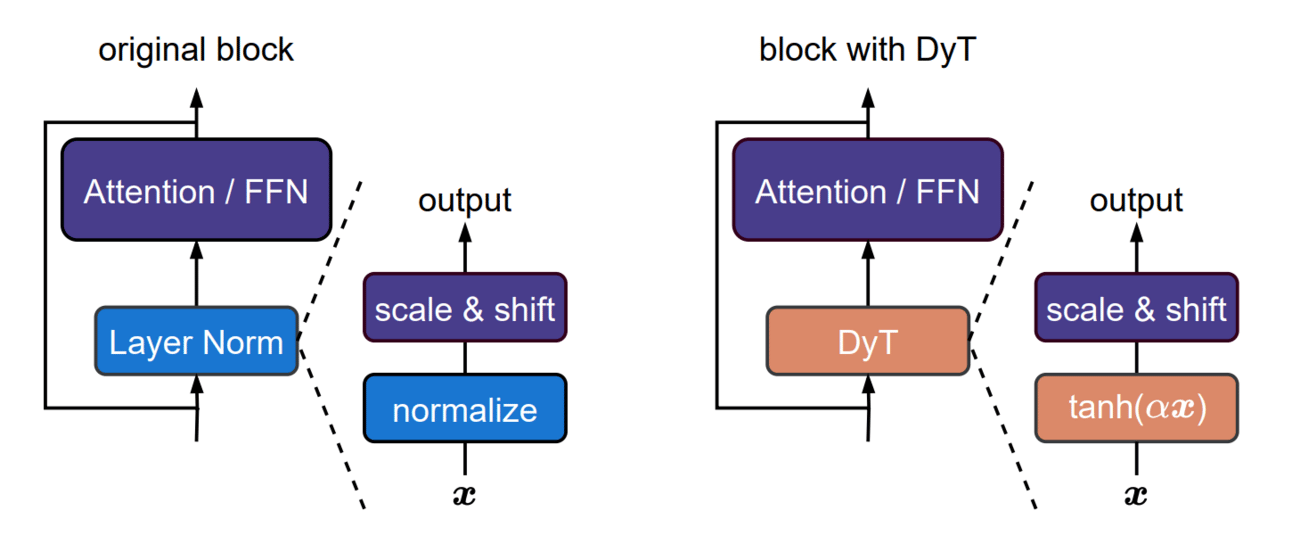

In 2025, Transformers are also undergoing changes, and this paper by FAIR, NYU, MIT, and Princeton University claims that normalization isn’t essential for training stable and powerful Transformers. Meta proposes Dynamic Tanh (DyT) – a super simple and efficient function that mimics how normalization works.

DyT works just as well as normalization layers (or even better)

It doesn't need extra calculations

Requires less tuning

Works for images, language, supervised learning, and even self-supervised learning →read the paper

Image Credit: Transformers without Normalization original paper

Inside-Out: Hidden Factual Knowledge in LLMs

Do LLMs "know" more facts deep inside their systems than they actually "say" when answering questions? Researchers from Technion and Google Research defined what this "knowing" means and also explored it. They found that LLMs indeed often know more than they reveal (up to 40%) but getting them to "say" it can be surprisingly hard →read the paper

Check out what Norbert Wiener thought about the word “think”.

Insights into DeepSeek-V3: Scaling Challenges and Reflections on Hardware for AI Architectures

DeepSeek models are at the center of attention this year. That’s why their papers are a must-read to stay ahead of what’s happening in AI. This research, in particular, explores how hardware–model co-design helps scale massive LLMs efficiently, tackling core bottlenecks in memory, compute efficiency, and network bandwidth.

What does it unpack?

Multi-head Latent Attention (MLA) for memory savings,

Mixture of Experts (MoE) for balancing computation and communication,

FP8 mixed-precision training,

a novel Multi-Plane Network Topology to reduce infrastructure overhead →read the paper

On the Trustworthiness of Generative Foundation Models – Guideline, Assessment, and Perspective

Trustworthiness is a topic that never dies. This paper establishes the first dynamic benchmarking platform that assesses generative AI models across diverse modalities (LLMs, vision-language, text-to-image) and dimensions like truthfulness, safety, fairness, and robustness, moving beyond static evaluation approaches. By aligning technical design with global regulatory trends and ethical standards, this work bridges policy and practice →read the paper

Want a 1-month subscription? Invite three friends to subscribe and get a 1-month subscription free! 🤍