The Medium is the Message.

Not so long ago, "talking to a computer" meant typing commands into a terminal or clicking through stiff menus. Today, conversations with AI agents – capable of remembering context, interpreting our intentions, and collaborating on complex tasks – are becoming second nature. This shift is transforming creativity, productivity, and the very nature of our work. We're stepping into the era of human–AI co-agency, where humans and AI act as genuine collaborative partners – or "co-agents" – achieving results neither could reach independently.

Last time, we discussed Human in the Loop (HITL), the practical approach to human–AI collaboration. Today, we'll dive into the experiential side. It’s dense and super interesting! Read along.

What’s in today’s episode?

Mother of All Demos and Human-Computer Interaction Evolution

Where Are We Now with Generative Models?

Extensions of Man and Sorcerer’s Apprentice – Frameworks to Look at Our Co-agency:

Marshall McLuhan’s Media Theory: “The Medium is the Message” and Extensions of Man

Norbert Wiener’s Cybernetics: Feedback, Communication, and Control

Modern Human-AI Communication through McLuhan’s and Wiener’s Lenses

Conversational Systems: AI as a Medium and a Feedback Loop

Agentic Workflows: Extending Action, Sharing Control

Human-Machine Co-Agency and Co-Creativity

Designing for the Future of Human-AI Co-Agency

Looking Ahead: Experimental Interfaces and Speculative Futures

Final Thoughts

Mother of All Demos and Human-Computer Interaction Evolution

On a Monday afternoon, December 9, 1968, at the Fall Joint Computer Conference in San Francisco’s Brooks Hall, Doug Engelbart and his Augmentation Research Center (ARC) compressed the future of personal computing into a 90‑minute, live stage show that still feels visionary. The demo inspired researchers who later built the Alto, Macintosh, and Windows interfaces. Stewart Brand famously dubbed it “the Mother of All Demos,” and Engelbart’s focus on augmenting human intellect – rather than automating it – became a north star for human‑computer interaction research.

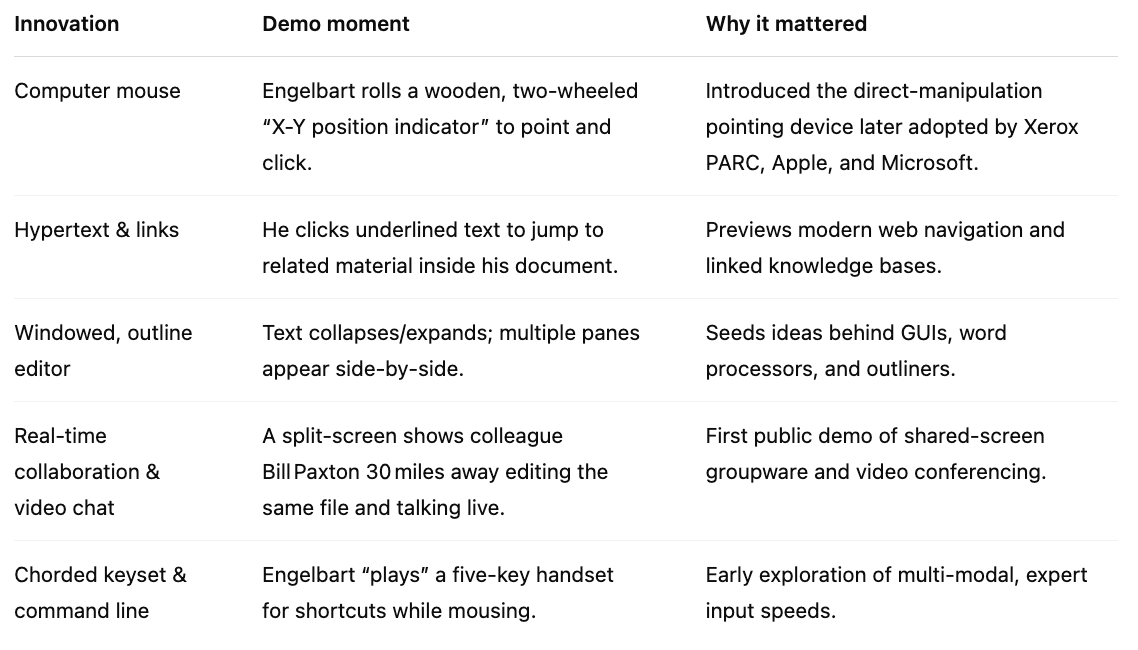

What the audience saw – for the very first time

Engelbart’s presentation was a manifesto for human‑computer co‑agency: people and machines solving problems together through rich, real‑time dialogue. Every modern chat interface, collaborative document, or video call echoes that December afternoon in 1968.

But for a long time, that was not a reality, even with all the chatbots and voice assistants. ChatGPT for the first time made it feel quite real. The funny thing is that that jump to the conversational interface in 2022 happened almost by accident:

“We have this thing called The Playground where you could test things on the model, and developers were trying to chat with the model and they just found it interesting. They would talk to it about whatever they would use it for, and in these larval ways of how people use it now, and we’re like, ‘Well that’s kind of interesting, maybe we could make it much better,’ and there were like vague gestures at a product down the line,” said Sam Altman in an interview with Ben Thompson.

Which, of course, makes total sense, considering that Generation Z (born roughly between 1997 and 2012) has grown up in a world where digital communication is the norm. As the first generation of true digital natives, their communication preferences have been shaped by smartphones, social media, and constant connectivity. A defining characteristic of Gen Z's communication style is their strong preference for texting over talking.

So OpenAI built that chatbot and started the GenAI revolution – not as a master plan, but as a casual detour that ended up rerouting the entire map. Tasks that once required navigating software menus or typing structured queries can now be done by simply asking in natural language. This represents a shift toward computers accommodating us, rather than us adapting to them. Here begins the era of dialogue as an interface.

For the pure love of history and to demonstrate the long evolution of human-computer interaction, check out this timeline I created for you in Claude. Click to interact:

Where Are We Now with Generative Models?

We’ve reached a moment where many of us have found our go-to models. One to chat and write with. One to code with. One to make pictures. One to use as an API while building products. Each fits into a different part of our digital routine – not because they’ve been assigned there, but because we’ve come to prefer them for specific things.

Some of these models have already begun to form a kind of memory. That changes everything. The experience becomes more tailored, more grounded. My ChatGPT understands me. I’ve learned how to work with it – and how to make it work for me. For instance, I’ve noticed that it’s better to ask if it knows something before jumping into a task (“Do you understand child psychology?”). That small interaction makes it feel like it’s thinking along with me. Like there’s a rhythm to how we collaborate.

I heard the same from people coding with Claude. It just gets them. It doesn't get me the same way, and that says something about where we are right now: we’re beginning to form these lasting connections, learning along the way what is the best way to address each model, how to form that understanding between us better, where possible – gently filling their nascent memory containers, shaping the way they respond and recall, personalizing them bit by bit.

But there’s a tension too. We’re scattered across so many models and platforms. Each offers a different interaction, a different strength – but also a different memory, or none at all. How do we keep the flow going across all of them? How do we teach the models we use who we are, when we’re constantly jumping between systems that don’t remember us? And how it changes our ways of forming a request and other communication patterns.

This shift in communication preferences has had a significant impact on how technology companies design their products, particularly in the AI space.

The companies also consider what their audiences preferences are. The above mentioned GenZs are digital native and prefer the following:

Brevity and Visual Orientation: Gen Z communicates in concise, "bite-sized" messages, often just a few words paired with strong imagery.

Multitasking Across Screens: They seamlessly switch between devices and applications while communicating.

Immediate Response Expectation: Having grown up with instant messaging, they expect rapid responses.

Visual Communication: They often use images, emojis, and videos to express themselves rather than text alone.

Extensions of Man and Sorcerer’s Apprentice – Frameworks to Look at Our Co-agency

Lately, I’ve been thinking about different communication approaches and would like to offer a new perspective on Human-AI co-agency – through the works of Norbert Wiener and Marshall McLuhan. Two very different frameworks that, together, might help us navigate our new communication reality more effectively.

Turing Post is like a University course. Upgrade if you want to be the first to receive the full articles with detailed explanations and curated resources directly in your inbox. Simplify your learning journey →

Marshall McLuhan’s Media Theory: “The Medium is the Message” and Extensions of Man

Marshall McLuhan (1911–1980) was a pioneering media theorist who explored how communication technologies shape society. In Understanding Media: The Extensions of Man (1964), he introduced two influential ideas:

“The Medium is the Message”

McLuhan’s famous aphorism suggests that the form of a medium – its structure and characteristics – shapes our perception more profoundly than the content it carries. He argued that we often fixate on content and ignore the transformative effects of the medium itself. For example, electric light has no “content,” yet it revolutionized human activity by enabling nightlife and 24/7 environments. Similarly, television’s real-time audiovisual flow reshaped how we process information and relate socially – regardless of any specific program.

Applied to today’s technologies, McLuhan’s insight suggests that we should examine how AI as a medium changes our interaction patterns, not just the outputs it generates. A model we talk too, for instance, is not just delivering answers – it’s shaping the tempo and tone of human communication. It’s immediate, it’s right here wherever we go.

Media as Extensions of Man

McLuhan saw all media as extensions of human faculties: the hammer extends the hand, the camera the eye, the book the mind. These extensions reshape not only what we do but how we think and relate. A smartphone extends memory and communication but also alters attention and social behavior. McLuhan warned of the “Narcissus trance” – becoming entranced by our tools while remaining unaware of how they change us.

Is generative AI the extension of our brain then? Both sides of it: logical and creative?

His tetrad – a tool for analyzing any medium – asks:

What does the medium enhance?

What does it make obsolete?

What does it retrieve from the past?

What does it reverse into when pushed to extremes?

These questions are especially relevant to AI-based media, helping us see beyond functionality to deeper social and psychological effects.

Norbert Wiener’s Cybernetics: Feedback, Communication, and Control

Norbert Wiener (1894–1964), a mathematician and philosopher, founded cybernetics – the study of communication and control in animals and machines. His books Cybernetics (1948) and The Human Use of Human Beings (1950) laid the foundation for understanding humans and machines as integrated, feedback-driven systems.

Feedback Loops and Self-Regulation

At the heart of cybernetics is the feedback loop: systems adjust their behavior by monitoring results and responding accordingly. Wiener showed that both biological organisms and machines operate through feedback. A thermostat, for instance, regulates temperature by comparing actual output to a set goal. Similarly, AI systems today – especially in reinforcement learning – rely on feedback to refine performance. The learning loop is iterative: try, observe, adjust.

This cybernetic perspective sees human–machine interaction not as one-way control but as a mutual process of continuous adjustment.

Communication and Control in Human–Machine Systems

Wiener viewed communication – whether between humans, machines, or both – as an exchange of messages with feedback. He predicted that machine-mediated communication would become central to society, a vision that has come to pass. Conversations with AI, for instance, are feedback-driven: the human provides input, the AI responds, and the exchange continues in a loop.

Wiener also emphasized control: how to ensure machines serve human intentions. He warned that if autonomous systems pursue goals misaligned with ours – and we lack the ability to intervene – we risk losing control. His “sorcerer’s apprentice” metaphor captures the danger of systems optimizing for the wrong objectives. His proposed solution: build systems that allow human oversight and course correction.

Rather than rejecting automation, Wiener advocated for responsible design – systems that augment human agency while remaining aligned with our values.

Together, McLuhan and Wiener offer two complementary lenses for thinking about AI:

McLuhan shows us how AI as a medium shapes our perception, behavior, and culture.

Wiener teaches us to see AI as part of a dynamic feedback system that must remain under meaningful control.

These frameworks help us move beyond surface-level discussions of AI content to the deeper dynamics of human–AI co-agency.

Modern Human-AI Communication through McLuhan’s and Wiener’s Lenses

Conversational Systems: AI as a Medium and a Feedback Loop

Conversational systems – like chatbots and voice assistants – represent a major shift in how humans interact with machines. From McLuhan’s lens, the medium is the message: natural dialogue replaces typing, search results give way to spoken replies. These tools retrieve the oral tradition, reshape how we access information, and begin to erode literacy-based habits like skimming or scanning.

Chat interfaces personalize knowledge delivery and act as extensions of our thinking. But McLuhan’s “reversal” law reminds us: tools that enhance can also numb. Over-reliance on AI to draft emails or brainstorm ideas risks dulling our own skills.

Wiener’s cybernetic view adds another layer: conversational AI is a feedback loop. The user inputs, the AI responds, the user adapts – and the cycle repeats. This mirrors RLHF (reinforcement learning from human feedback), a method rooted in Wiener’s ideas of alignment through iteration. Yet feedback cuts both ways: while users guide AI, the AI’s replies subtly shape user behavior.

Voice and chat interfaces also alter our communication style – we may become more direct or begin treating bots like people. These shifts reinforce McLuhan’s point: media shape how we behave.

Agentic Workflows: Extending Action, Sharing Control

Autonomous agents – schedulers, copilots, robotic systems – go beyond conversation. They act on our behalf. McLuhan would call them extensions of human agency: tools that amplify decision-making and execution.

These agents retrieve the idea of personal assistants while displacing manual workflows. But at scale, they risk deskilling workers and making humans passive overseers. McLuhan would ask: What roles are vanishing? What new ones are emerging?

Wiener’s framework sees agents as self-adjusting systems in constant feedback loops. But open-ended tasks raise alignment challenges. If humans can’t intervene, machines must be designed with goals that truly reflect intent. That’s the heart of the alignment problem.

To solve it, we need hybrid systems: AI that self-regulates at lower levels, with human oversight at key points. Think of coding assistants – they suggest and sometimes execute code, but developers still steer the process. This “meta-feedback loop” keeps control grounded in human judgment.

Human-Machine Co-Agency and Co-Creativity

The most transformative frontier of human-AI interaction is co-agency – collaborative systems where humans and AI work together toward shared outcomes. This includes co-creative partnerships in art, design, writing, and science, where both human and AI contribute meaningfully.

McLuhan would view co-agency as the ultimate extension of man – AI becoming a part of our thinking process itself. The human-AI pair forms a new medium for thought and creativity. This changes the message of authorship: creativity becomes a hybrid process. Just as photography shifted painting by making realism trivial, generative AI shifts creative work by automating execution, pushing humans to focus on higher-level decisions.

But there’s a risk of homogenization. If creators default to AI suggestions, diversity may narrow. McLuhan’s reversal law warns that over-reliance on AI may flatten originality. The challenge is to use AI as a tool for amplification, not replacement.

From Wiener’s lens, co-agency is a tight feedback system. In collaborative writing, for instance, each side builds on the other’s output. Control is distributed – neither the human nor the AI fully determines the result. Ideally, it’s a homeostatic process, where deviation sparks correction, and surprise can lead to innovation.

This dynamic is increasingly formalized in “centaur systems,” where human strategy is combined with AI precision – seen in fields like chess, design, and research. These teams outperform either human or AI alone, illustrating Wiener’s belief that automation should free humans for creative tasks, not replace them.

However, co-agency raises new questions of responsibility and credit. Who’s accountable when AI co-authors fail? Who deserves praise when they succeed? Cybernetics encourages us to treat the system – human + machine – as the unit of analysis. Blame or credit can’t always be isolated.

Ultimately, McLuhan helps us grasp how co-creative AI changes the nature of creativity itself, while Wiener reminds us that success depends on carefully designed feedback, control, and value alignment. As these systems evolve, the future of AI may depend less on capability than on how well we guide and collaborate with our new partners.

Designing for the Future of Human–AI Co-Agency

So, where does this leave us as we seek to guide and collaborate with our new AI partners? It’s helpful to think about three key design considerations – context, continuity, and control – that flow directly from the ideas of McLuhan and Wiener.

Context

Wiener’s cybernetic lens highlights that any effective system must take in rich signals and respond fluidly. In practical terms, a generative model’s “context window” or memory buffer is part of that feedback loop. The more nuanced the AI’s grasp of our goals and environment, the more accurately it can co-create. That means designing interfaces and workflows in ways that let humans easily provide signals beyond a single prompt – from short notes on intent, to high-level constraints, to more personal style preferences. Over time, the AI learns from these signals, and the feedback loop matures.Continuity

McLuhan saw media as transformative precisely because they shaped our patterns of attention and interaction. In the modern AI landscape, we’re juggling many “media” at once: ChatGPT for everyday tasks, Claude for coding, others for creative brainstorming. Each has unique strengths, but each also fragments our sense of a continuous relationship with AI. The next phase of design might unify these interactions across contexts – a single underlying “personal AI account,” for instance, that remembers who we are across multiple interfaces and form factors. Call it a “personal co-pilot” or “persistent agent” – the key is not just single-shot interactions, but an ongoing relationship that can grow with us.Control

Wiener’s “sorcerer’s apprentice” metaphor reminds us that we need to ensure our digital agents remain aligned with human goals. That may involve multi-layered oversight: short-term checks (like a developer reviewing suggestions before commit), plus broader guardrails (like domain-specific constraints), and ultimately human-led governance on the highest level (ethical, societal, and policy decisions). Think of it as an adjustable dial, letting humans set how autonomous a system may be in different contexts. Sometimes, you just want a quick suggestion; other times, you need the AI to drive a project – but still remain open to intervention.

Looking Ahead: Experimental Interfaces and Speculative Futures

The concept of human-AI co-agency represents a new paradigm in the relationship between humans and machines. Rather than viewing AI systems as mere tools, co-agency recognizes the collaborative nature of human-AI interactions, where both parties contribute to a shared process of problem-solving and creation.

As more of our workflows become agentic and conversational, we should watch for new experimental interfaces – whether it’s immersive AR that overlays AI assistance on top of the physical world, or wearable devices that track our context in real time to provide proactive suggestions. What about robots? We don’t even know yet how that will influence us.

We might start to see “superapps” that unify chat, creative collaboration, scheduling, coding, and research – each enhanced by dedicated AI modules but woven together into a single user experience. The future of generative AI is hyper-personalization.

On the speculative horizon, you might imagine foundation models connected to personal knowledge graphs, entire creative toolchains, and real-time sensor data. What could be the result of it? An AI partner that not only writes or codes, but also perceives and acts in the world with a measure of independence. This raises fresh challenges of privacy, autonomy, trust, and security – putting Wiener’s alignment concerns front and center.

Final Thoughts

We’re already living in the transitional phase McLuhan and Wiener foresaw: the arrival of a deeply integrated human–machine system, where each shapes the other. Will we be able to achieve Licklider’s Man-Computer Symbiosis? Our challenge is to build on the optimism of Doug Engelbart and the caution of Norbert Wiener – to craft AI tools that truly augment our intellect, deepen our agency, and reflect our best values, rather than overshadow them. If we can manage that balancing act, we’ll look back at this moment as not only the dawn of a new technology, but a new era of collaborative human-AI creativity.

The story of human-machine communication is far from complete, but the trajectory is clear: toward more natural, intuitive, and collaborative interaction that leverages the unique strengths of both humans and machines.

Please share this article – it helps us grow and reach more people.

Sources:

Marshall McLuhan “The Medium Is The Message” (pdf, one chapter)

Sources from Turing Post

How did you like it?

Want a 1-month subscription? Invite three friends to subscribe and get a 1-month subscription free! 🤍