- Turing Post

- Posts

- 🎙️Spencer Huang: NVIDIA’s Big Plan for Physical AI

🎙️Spencer Huang: NVIDIA’s Big Plan for Physical AI

Simulation, World Models, and the 3 Computers. NVIDIA’s Robotics stack well explained

When robots move into the real world, speed and safety come from simulation!

In his first sit-down interview, Spencer Huang – NVIDIA’s product lead for robotics software, and yes, Jensen Huang’s 35-year-old son – talks about his role at NVIDIA, a flat organization where “you have access to everything.” We discuss how open source shapes NVIDIA’s robotics ecosystem, how robots learn physics through simulation, and why neural simulators and world models may evolve alongside conventional physics. I also ask him what’s harder: working on robotics or...

Watch to learn a lot about robotics, NVIDIA, and its big plans ahead. It was a real pleasure chatting with Spencer.

Subscribe to our YouTube channel, or listen the interview on Spotify / Apple

We discuss:

NVIDIA’s big picture

The “three computers” of robotics – training, simulation, deployment

Isaac Lab, Arena, and the path to policy evaluation at scale

Physics engines, interop, and why OpenUSD can unify fragmented toolchains

Neural simulators vs conventional simulators – a data flywheel, not a rivalry

Safety as an architecture problem – graceful failure and functional safety

Synthetic data for manipulation – soft bodies, contact forces, distributional realism

Why the biggest bottleneck is robotics data, and how open source help reach baseline

NVIDIA’s “Mission is Boss” culture – cross-pollinating research into robotics

And of course – books

This is a ground-level look at how robots learn to handle the messy world – and why simulation needs both fidelity and diversity to produce robust skills. It’s also a good look into NVIDIA’s mindset. Watch it now →

This is a free edition. Upgrade if you want to receive our deep dives directly in your inbox. If you want to support us without getting a subscription – do it here.

This transcript is edited by GPT-5. As always – it’s better to watch the full video) ⬇️

Ksenia Se:

Hi Spencer, and thank you so much for joining me for the interview.

Spencer Huang:

Thank you. Very excited.

Ksenia:

Through your eyes, I want to see the big picture of what NVIDIA is working on. My first question is – NVIDIA is putting robotics, simulation, and Omniverse at the center of the industrial AI push, with Omniverse and OVX powering digital-twin AI factories, and Isaac Lab training robots in simulation. How do you see this ecosystem evolving, and what is the role of simulation in it?

Spencer:

Maybe we’ll take a step back for a minute so I can give you that big picture, at least from the robotics and physical AI side. I’m sure you’re familiar with the three-computer system.

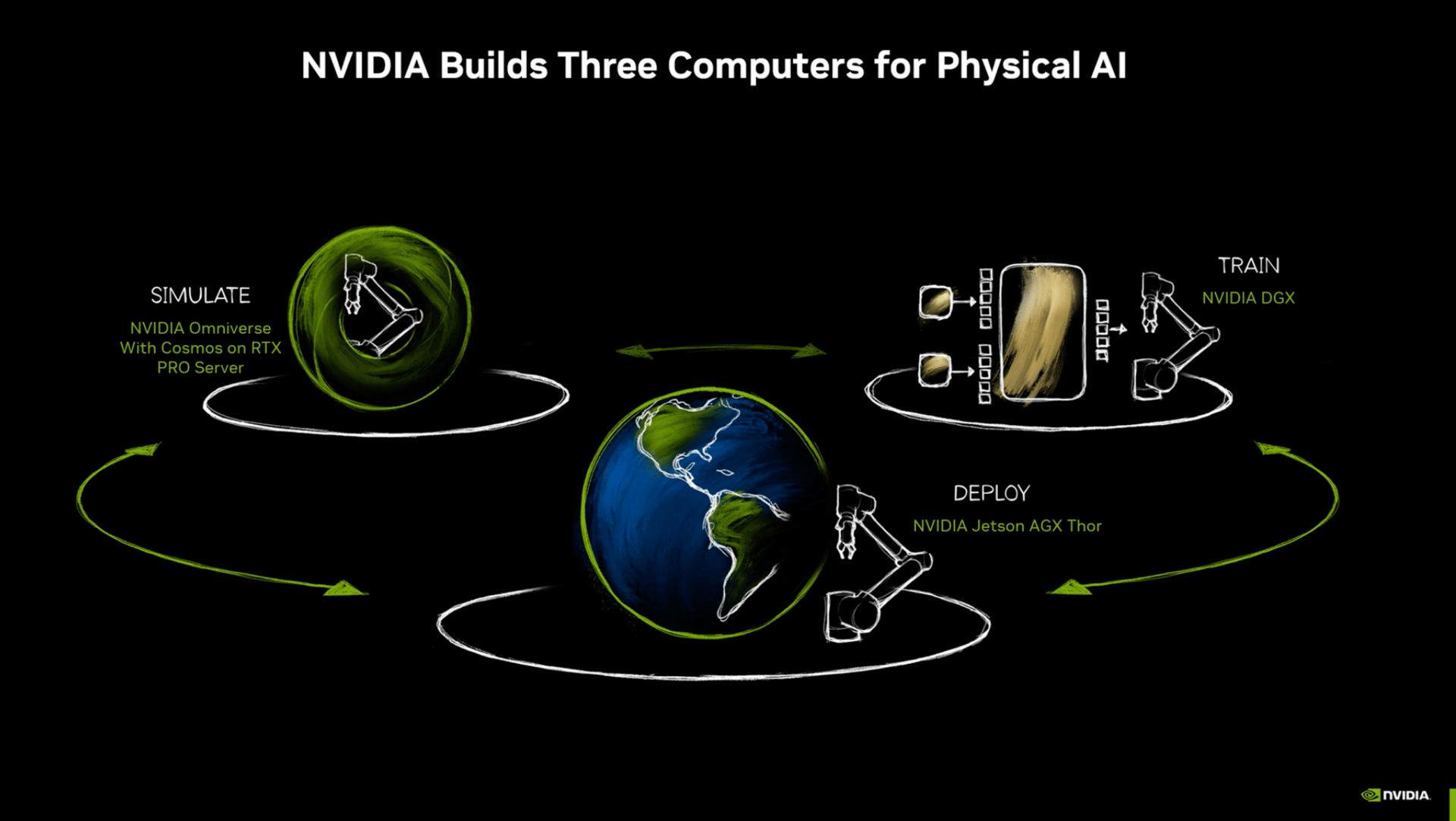

Image Credit: NVIDIA

For robotics, it’s extremely important that we leverage all three, because only through all three can we make real progress – especially as we’re trying to bring robotics to do valuable tasks for reindustrialization and reshoring back in the States.

So the first computer, DGX, is what allows you to train a robot brain. It’s where you train perception models and control policies – all the AI agents you might need when deploying robots. And we have to remember, robots aren’t just about skills. There’s an entire stack around them – perception, safety, navigation.

The second is OVX, the Omniverse computer for simulation. We need a way not only to develop and train robots, but to test them inside simulated environments before deploying them in the real world. That’s important for safety, of course, but also for scale. Humans can only learn so fast – one lifetime at a time – but we can’t wait twenty years for robots to learn. So simulation lets us speed that up. We can run things faster than real time.

And once you’ve trained those skills, you want to put them into the same stack that will run in the real world and test that stack in simulation. That part doesn’t have to be faster than real time – you just want it to be high fidelity, as close to reality as possible. So you can be confident that when you deploy, it will behave the way you expect.

And the last computer is the one that actually goes into the real world – the Jetson or IGX platform that runs the robot itself. That’s the compute inside the physical robot, the one that runs the brain, the perception stack, and all the subsystems. As we move toward functional safety, like in autonomous vehicles, we’ll also need graceful failure – a way for systems to stay safe even if one component fails.

A car spends all of its time trying not to hit the world, whereas a robot spends all of its time trying to hit the world just right. Once robots are helping us cook, clean, or care for people, that has to be safe. We need simulation not only for data generation and training and testing, but we want to make sure that we're utilizing it as that last gate of validation prior to deployment.

Ksenia:

What you describe sounds very early-stage. When do you see this all coming together?

Spencer:

Some of it’s early, but a lot is moving faster than people realize. We’ve had the luxury of building on what the autonomous vehicle teams have done for the last several years – collecting and validating data, generating synthetic data, and understanding how to measure its quality. Those lessons transfer directly into robotics.

But cars only needed visual realism. Robots need physical realism. If I have a vase full of flowers, my perception stack can identify it. But once I want to touch it, the simulation has to know its weight, its material, its friction – all those physical properties cars never needed.

Now we’re building those properties in, because manipulation requires it. And it’s not just about being exact like the real world – it’s about capturing the distribution of the real world. Maybe the vase is half full of water, maybe it’s a third full. Reinforcement learning depends on that variability.

That’s what we’re doing with Isaac Lab – using reinforcement learning and domain randomization to teach robots through trial and error across many simulated worlds. A robot might learn to walk on wood, carpet, ice, sand, snow – all randomized. So when it meets snow in the real world for the first time, it just becomes universe N + 1.

That’s what robustness means. That’s the challenge we’re solving now – how to train robots that can do useful, dexterous tasks, but are also resilient, not brittle.

Ksenia:

During the keynote, Jensen Huang said that robots understand physics. Do you agree with that?

Spencer:

I do. So, in reinforcement learning, just as I was talking about before, when we randomize across domains and characteristics, one of those characteristics is physics.

You’re standing on carpet right now, right? There’s a good amount of friction – you’re not going to slip unless you’re wearing really fancy shoes. But on ice, there’s almost no friction. So if I randomize, inside the world where these robots are learning, the friction of the ground, they start learning how to adapt across those different environments. Slippery, non-slippery – it all breaks down to basic physics. And that’s exactly what we’re randomizing.

So yes, robots do understand physics in that sense.

Now, what Jensen was really alluding to is when we move into manipulation – when we want robots that are fully autonomous and generalizable. Robots that can go into any environment and perform a variety of tasks. They don’t just understand the physics of an object – they understand the consequences of that physics.

If I throw an apple, it drops. If I push something off the edge of a table, it falls. Those are things a robot has to learn through its own training.

When I gave the example of walking, imagine now we have to do that for every task. Each task requires its own understanding of physics.

When humans learn, especially babies, they’re constantly experimenting with physics. They drop things, they throw things, they notice what’s heavy and what’s light. They develop intuition about gravity, force, and motion. Robots have to go through the same process – only through experience and feedback. It’s a semantic understanding, too. For humans, that’s intuitive. For machines, it’s much harder.

Ksenia:

I’m surprised about that – because physics still feels like the missing piece. World models are just starting to emerge, and there’s still so much research happening. If you say robots understand physics, what’s the bottleneck now?

Spencer:

You brought up a great term – world model.

When I talk about reinforcement learning, we’re usually thinking of a conventional simulator – a physically grounded one, like Isaac Sim. On the other hand, you have neural simulators, which are world models. That’s where a lot of research is heading right now.

A world model has this incredible advantage: it can very quickly generate diverse environments. But it’s still not physically perfect.

For example, if I try to simulate a soft object – a cloth or a water balloon – accurately modeling soft-body dynamics is still extremely hard in a traditional simulator. So instead, you might use a world model to approximate that.

The challenge is that these neural simulators don’t yet have enough grounded physical data. They can hallucinate. Their physics isn’t always consistent. So right now, they work for some things, but not for others.

The interesting question is: how do we get conventional simulators and world models to work together, as part of a data flywheel?

You can capture tons of data from real robots and use that to train world models. You can also use Isaac Sim or Omniverse to generate more data – contact data, physics data, visual and non-visual data – and feed that into those neural simulators.

Eventually, these world models shouldn’t just output images or scenes – they should output actions, contact forces, the kind of signals you get from a physics engine. Then they can complement each other.

World models solve a big pain point: roboticists are not digital artists. They don’t want to spend days hand-modeling every object or scene. We’re limited by what 3D assets we have. So a lot of our research teams are working on neural reconstruction –scanning real objects, bringing them into simulation, and using AI agents to add physical properties so they can be manipulated or articulated.

On the other side, world models – like what we’ve seen in recent diffusion-based work from DeepMind or others – can take a single RGB frame and, conditioned on text or action, generate a consistent 3D world that evolves over time.

That’s what we mean by a neural simulator. It’s unlocking a new way to do data generation, augmentation, or policy evaluation. And maybe eventually we’ll even be able to do effective post-training inside those neural worlds.

But they have to mature side by side. Traditional simulators and neural simulators are going to grow up like siblings – each learning from the other.

Ksenia:

You explained that very clearly. But your background is different – how did you get into robotics and simulation? And what does your role actually involve today?

Spencer:

Sure. So my current role – I lead NVIDIA’s robotics software products. That includes everything under the Isaac family, GROOT for robot orchestration, OSMO for workflow scheduling and scaling workloads across heterogeneous compute, and now a lot of collaboration with the Cosmos team as we start exploring neural simulators.

My background is pretty nontraditional. I’m kind of a case study for how much you can learn outside of school. I grew up in a very technical family, and one of my biggest interests early on was distributed computing – building servers, networks, that kind of stuff. I always loved software development, and I was always around robotics teams, so it was never totally foreign.

In my twenties, I wanted to try something different – see what I could do on my own (Editor’s note: Spencer had a bar in Taipei, at some point he was also a wedding photographer in Japan). But after a while, I realized I was spending more time coding than bartending, so it felt like the right time to come back to tech. I started diving deeper into cloud systems and cloud distribution, especially for robotic simulation, which sits right at the intersection of everything I enjoy. It wasn’t a huge leap if you knew me, but if you just scroll through my LinkedIn, it probably reads a little weird – that’s fair.

Ksenia:

Yeah, everyone checks your LinkedIn. How hands-on are you now, in terms of coding?

Spencer:

Not as much as the rest of the team. Most of my job is making sure we’re building robotic platforms that don’t require a PhD to use. And, unfortunately, I don’t have a PhD – the math is well beyond me at this point.

Ksenia:

Do you feel the lack of it?

Spencer:

Not really. A PhD is mostly about implementation – designing algorithms, optimizing learning policies – and that’s a bit different from what I do. As a product lead, my job is to make sure we’re building the right platforms for developers.

The challenges we handle are less about the algorithms themselves and more about the developer experience and scaling. I can give you how we structure our roadmap, actually – it’s built around four main priorities:

First is data acquisition and generation – how we capture reality and use it to generate more data.

Second is the simulation platform and robot learning frameworks – how we take that data and represent it in ways that allow us to train policies effectively.

Third is the model spectrum. Intelligence isn’t binary; it’s a continuum. If you imagine end-to-end autonomy on one side and traditional robotics on the other, there’s a lot of space in between. That’s where hybrid models live – combining MPCs with specialized policies or mixture-of-experts systems. Jensen talked about this at the keynote, and it applies equally well to robotics.

And finally, we have deployment and optimization – how all of this gets wrapped up, optimized, and brought onto hardware.

Those four pillars are what my product teams focus on. We work closely with research, but most of my team doesn’t have PhDs – and that’s totally fine. We don’t need to solve the equations ourselves; we just need to understand what problems the researchers are solving and build the tools that help them do it better.

Ksenia:

Right. And it also feels like education just can’t keep up anymore – if you’re passionate enough, you just learn as you go.

Spencer:

Exactly. The advent of AI has made that so much easier. I use Perplexity constantly.

Ksenia:

To learn?

Spencer:

We use Perplexity and a bunch of other agents for all kinds of things. Perplexity is great for breaking down technical problems. It’s honestly one of the best ways to learn, especially when you’re starting out.

The thing with robotics is that everything is research until it isn’t. A VLA was research until suddenly it wasn’t. Cosmos was research until suddenly it wasn’t. Things come out of left field all the time – even if you’re paying attention. There’s just too much happening at once.

So you need tools that help you quickly digest: What’s the high-level value here? How deep do I actually need to understand this? Because at some point, you do need to understand things at the fundamental level – especially to connect them to other technologies.

That’s a big part of our job: connecting dots. How do I take what the Cosmos team is building, and align it with our product roadmap, and then connect that to what researchers are doing? It’s all about seeing the research early and figuring out where those pieces fit together.

Ksenia:

That’s what I was thinking about – robotics development feels very fragmented. Is NVIDIA moving toward something like a DGX for robotics – a repeatable stack for perception, planning, deployment? What will actually help standardize robotics development?

Spencer:

We’ve identified a few things – I’d say three big observations.

First, you’re right: the robotics ecosystem is fragmented. Everyone has their own toolchain. And it’s not because they want to reinvent the wheel – most people don’t want to build the tools they’re building. They just have to, because there isn’t anything out there that does exactly what they need.

Take locomotion, for example. One team might be training a whole-body controller; another might just be working on grasping or manipulation. They could all be using Mujoco, Isaac Gym, or Isaac Lab, but each still ends up building their own solvers – for contact, soft-body dynamics, tactile sensing, whatever their domain needs.

So our first big focus is on a physics interoperability layer. If teams could move between toolchains without rewriting half their setup, they’d save months of work. That’s why we’re collaborating with Google DeepMind and Disney Research on Project Newton – to create a unifying physics interface that connects these systems together.

Second, there’s data representation. Everyone uses different formats. Just like Omniverse aims to unify 3D formats through OpenUSD, we’re working closely with the robotics community to create a robotics-specific USD extension. That means you could import URDFs or MJCFs into USD and have them work seamlessly across simulators.

So instead of maintaining separate marketplaces or pipelines for Mujoco and Isaac, you’d have a single interoperable layer. Robots, environments, and objects could all be defined once – with physical and articulation properties intact – and used anywhere.

Third, we need flexible simulators, not monolithic ones. That’s been a big part of our work on Isaac Lab over the past year – making sure the framework isn’t hard-tied to any single physics engine. That’s also where Newton comes in: it provides a unified API so different solvers can plug in and work together.

Because it’s all built on Warp – NVIDIA’s Python-based GPU kernel framework – developers have the freedom to choose the fidelity they need. If you want high-end RTX rendering for photorealism, you have it. If you need lightweight rendering for massive-scale training throughput, you can do that too.

We’re also introducing Isaac Lab Arena – a policy evaluation framework built on top of Isaac Lab. It’s not fully integrated, because we want to keep Isaac Lab lightweight for training, but Arena gives developers a unified interface to benchmark and evaluate policies without constantly rebuilding scaffolding.

And that’s the pattern you’ll keep hearing from us: nobody wants to rebuild scaffolding. They just want to build robots.

Ksenia:

But doesn’t that create a sort of closed, walled garden?

Spencer:

No – the opposite. We open-source everything.

The goal is that all of this – Isaac Lab, Isaac Lab Arena, Newton, Warp – is open source from the start. Newton is already part of the Linux Foundation; it’s not even NVIDIA-owned. PhysX is open source too.

And that’s important because you’re right – you can’t unify an ecosystem by closing it off. If everyone has different toolchains, you need open “highways” between them, not gates.

So our approach is to create interoperability layers and compatibility standards, and to open them up so the community can contribute. We can’t solve every robotics problem, but we can solve the parts where NVIDIA adds unique value – and make the rest open so others can build with us. That’s our strategy.

Ksenia:

Thank you. Yeah, open source is hugely important. I saw you this morning surrounded by people – and of course, lots of questions about who your father is. So my last question: what’s harder – solving these robotics problems, or being Jensen’s son?

Spencer:

That’s a good question. I think solving robotics is definitely harder – because I’ve had 35 years to adapt to being a son.

Ksenia:

Fair enough. Then tell me – what else are you excited about, maybe apart from robotics?

Spencer:

You know, one of the things I’ve always been most excited about is just fundamental technologies.

At NVIDIA, one of the greatest advantages is how flat the organization is. The benefit – and the downside – of a flat structure is that you have access to a lot of information. When I first joined, it was like drinking from a firehose. At first it was amazing for robotics, and then I realized I could go explore all sorts of other technologies.

Pretty soon I was averaging three or four hours of sleep, just staying up late reading internal docs, watching demos, trying to learn everything. But the upside is, when you work in robotics, there’s so much happening elsewhere at NVIDIA that can feed directly into your work.

We have a saying: Mission is Boss. It means that because we’re structured horizontally, your org chart doesn’t really matter. If there’s a mission that needs solving, you can pull in anyone from any part of the company to do it.

For example, say we want to find better ways to extract action data from videos in the wild. There’s so much knowledge buried in YouTube tutorials – cooking videos, DIY projects, you name it – but today robots can’t learn from that, because there’s no way to extract and retarget that motion data into robot policies.

To solve that, you might need people from gaming – who’ve been working on AI body-motion generation for characters. Or folks doing segmentation and video understanding. Or teams that take 2D footage and reproject it into 3D.

Those aren’t robotics projects – but if you connect them, they suddenly become robotics-relevant. That’s the fun part for me – seeing what amazing things other teams are doing, and finding ways to bring them into our mission. That’s how NVIDIA moves so fast.

Ksenia:

I call that cross-pollination. I was reading Elon Musk’s biography recently, and he does something similar – connecting deep knowledge from different fields to create new things.

Spencer:

Yeah, he’s really good at that. We’ve kind of institutionalized it here – it’s an organizational mindset. It takes a while to get used to, because it’s easier to stay narrowly focused. You feel like you’re making more progress when your world is small.

But at the state of the art, that narrow progress can actually blind you to what’s happening next to you. For example, if the Omniverse team wasn’t paying attention to the Cosmos team, we’d lose opportunities to merge simulation and neural modeling in new ways. Because we do watch each other’s work, we find complementary overlaps and come up with solutions that wouldn’t exist if we stayed in silos.

That’s something we really value – keeping those walls low so new ideas can travel fast.

Ksenia:

What’s the thing that concerns you most about helping build this new world?

Spencer:

One of my biggest concerns isn’t really fear – it’s more of a reality check.

Large language models had the luxury of centuries of recorded human data. That’s why they can learn from all cultures, all languages, all history. Robotics doesn’t have that. The biggest bottleneck for us is data.

We don’t have hundreds of years of recorded motor actions or physical interactions. And if the ecosystem doesn’t come together to build shared datasets, we’ll all be stuck.

Jensen talked about the three scaling laws during the keynote – they apply just as much to robotics. In the LLM world, we hit scale through pre-training. In robotics, we’re still at the stage where our pre-training datasets are too small to create a strong baseline.

Right now, everyone’s building specialists – robots that can do one task really well. That’s fine, because it generates new data. But the goal is to reach a baseline generalist – a robot that can do many tasks adequately, even if it’s not great at any single one. That’s like ChatGPT 1.0: a great foundation. From there, you can start creating generalist-specialists – systems that are both broad and deeply capable.

But to get there, we have to stop hoarding data. No single company, university, or country can digitize the entire world fast enough. We need to collaborate, share, and build toward that common baseline.

Because the robots that reach that baseline will be useful – but not transformative. They’ll be able to take out the trash, not solve new kinds of problems. The real value comes when they can augment humans – work alongside us, create new kinds of jobs, new industries, even new economies.

So I’m excited for that future – but my concern is how quickly we can get there if everyone stays siloed, thinking, “this is my data.”

Ksenia:

My last question is always about books. What book has shaped you the most?

Spencer:

Oh boy. There are two that really stuck with me.

The first is Isaac Asimov’s Foundation series. It’s one of the most important works in science fiction, but it’s also a study of psychology and the human condition – what happens when people lose a shared purpose and start to fracture.

The second is Harvest of Stars by Poul Anderson. It’s an older book – kind of dry at first, but worth it if you stick with it. There’s a moment when a crew approaches the disk of a black hole and starts receiving strange signals – what looks like noise. They eventually realize it might be a form of communication.

The lesson for me was: what you dismiss as noise might actually be something meaningful. You just need to learn how to listen – and how to tell the difference. That’s probably one of the biggest lessons I’ve ever taken from a book.

Ksenia:

That’s super interesting. Thank you so much.

Spencer:

Thank you! Awesome questions.

Do leave a comment |

Reply