AI models have shifted from thinking quickly (giving fast answers) to thinking more carefully by breaking problems into smaller steps. o1-like thinking with implementing Chain-of-Thoughts method allows large reasoning models, such as OpenAI’s o1, o3, and DeepSeek-R1, to backtrack, retry, and refine its reasoning, making it even better at solving tricky problems. We discussed all important aspects and advantages of scaling test-time compute in one of our previous episodes. However, there is a big issue: this kind of reasoning creates a lot of text (tokens), which takes up memory and slows things down, making processing more expensive. This is especially noticeable with Transformers – the more text they generate, the more memory and computing power they need. As large reasoning models become more prevalent, we must find ways to mitigate their weaknesses while fully exploring their potential for improvement.

Today we will focus on the problem of increased memory use and, as a result, too long processing time because of this. If we can address memory inefficiency, models can become more balanced and effective while maintaining their high accuracy. Two notable approaches have already been proposed to reduce memory usage in reasoning models: 1) LightThinker that helps models learn how to summarize their own “thoughts” and solve tasks based on these short, meaningful summarizations; and 2) Multi-head Latent Attention (MLA), a DeepSeek solution, proposed back when they released DeepSeek-V2 and later implemented in DeepSeek-V3 and DeepSeek-R1.

Today we invite you to dive into these concepts with us and consider the potential benefits of blending them together.

In today’s episode, we will cover:

What is LightThinker?

The idea behind LightThinker

How does LightThinker work?

Actual performance of LightThinker

Why is LightThinker a better approach?

Not without limitations

What is Multi-Head Latent Attention (MLA)?

Why is MLA needed?

How does MLA work?

What are the key benefits of MLA?

Not without limitations

MLA results

What if we blend LightThinker and MLA concepts?

Conclusion

Sources and further reading: explore the references used to write this article and dive deeper with all the links provided in this section

What is LightThinker?

The idea behind LightThinker

As we have already said, we need optimization methods that would make high-quality reasoning models much faster and more efficient, avoiding high memory cost.

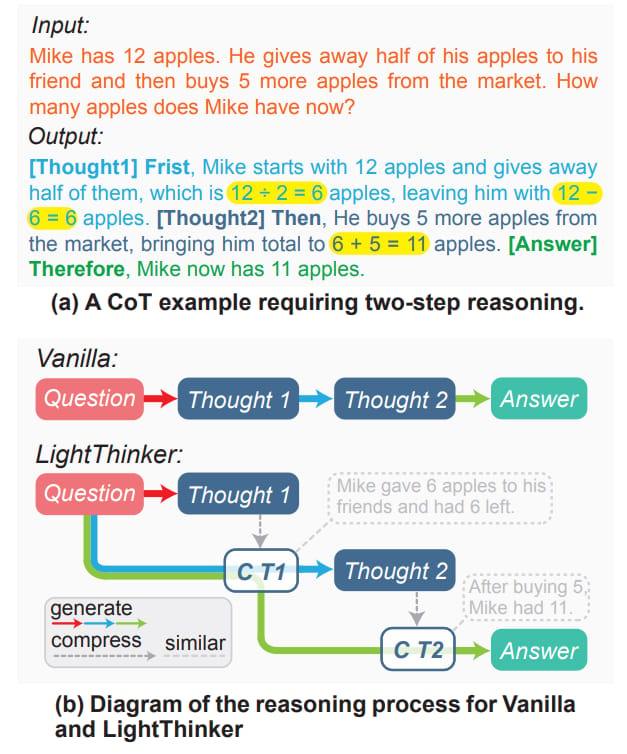

One of these methods is LightThinker developed by Zhejiang University - Ant Group Joint Laboratory of Knowledge Graph. LightThinker doesn’t just cut out words or memory manually, it teaches the model to "summarize" its own “thoughts” while solving problems. Think of it like how people jot down key points instead of writing every detail. Let’s look at how it works in detail.

Image Credit: The original LightThinker paper

How does LightThinker work?

The rest of the article is for our Premium members. Learn how we propose to combine LightThinker and MLA to build more efficient and powerful reasoning models (exclusive content) →

or follow us on Hugging Face, you can read this article there tomorrow for free

Offer from our partners:

Introducing Qodo Gen 1.0 - the IDE plugin that embeds agentic AI, with deep context awareness, into JetBrains and VS Code. Leveraging LangGraph by LangChain and MCP by Anthropic, Qodo Gen understands your code deeply, integrates your favorite tools, and autonomously tackles writing to testing. Write, test, debug, and refactor without switching context.