- Turing Post

- Posts

- AI 101: Inside Robotics

AI 101: Inside Robotics

Building the body of AI: How Physical AI is trained and powered – from Figure 03, Neo, Unitree robots to NVIDIA freshest updates

This moment has finally come – we’re dedicating an entire article to robotics, the embodied entities of physical AI that exist in the real world. They move AI from digital space into physical environments, where machines can perceive, move, and act in real time. This means one key thing: robots now interact with the world on an entirely new level. And the big players – NVIDIA, Tesla, and even OpenAI (whose Sora 2 app is a big step forward) – are racing to master physical AI.

We’ve avoided this topic for a while because the technology stack behind robotics mostly includes vision-language-action (VLA) models, computer vision, world models, and mechanical systems – areas we’ve covered in other articles. Plus, robotics often overlaps with our Agentic AI series. Still, we can’t ignore that robotics is becoming its own field – not just about agentic behavior, vision, or physics, but everything combined. All these puzzle pieces come together to shape robots into what may soon become our everyday companions. How long will it take before living alongside robots feels normal?

We don’t know yet – but recent progress is clearly bringing that future closer. From Figure 03 and 1X’s Neo to Unitree’s quadrupeds and NVIDIA’s latest innovations, robotics is advancing faster than ever. In this article, we’ll explore the key technologies driving this shift with illustrative technical details to the essential concepts behind creating modern robotic systems. It’s a must read for everyone!

In today’s episode, we will cover:

Basic Terms to Become Fluent with Robotics

Robot Types

Four Main Pillars of Robotics

How Robots Learn Today

Reinforcement Learning in Robotics

Behavioral Cloning

Your potential home companions: Figure 03 and 1X’s NEO

Unitree Robots

NVIDIA Freshest Updates: Powering Robotics

Conclusion

Sources and further reading

Basic Terms to Become Fluent with Robotics

Robot Types

Terms are the base of every domain. If you’ve never dived deep into robotics before, this section may help you understand the subject. Today we can identify several main types of robots:

Humanoid robots designed to look and move like humans (Figure 03, Tesla Optimus, Agility Digit, Boston Dynamics Atlas, 1X Neo)

Image Credit: Atlas, Boston Dynamics

Quadruped robots – four-legged robots inspired by animals, often dogs (Boston Dynamics Spot, Unitree Go2, ANYmal)

Manipulator robot, or robotic arm used in factories or manufacturing lines (in that case called industrial robots), fun projects, and labs. Soon to be our surgeons as well.

Image Credit: Hugging Face LeRobot GitHub

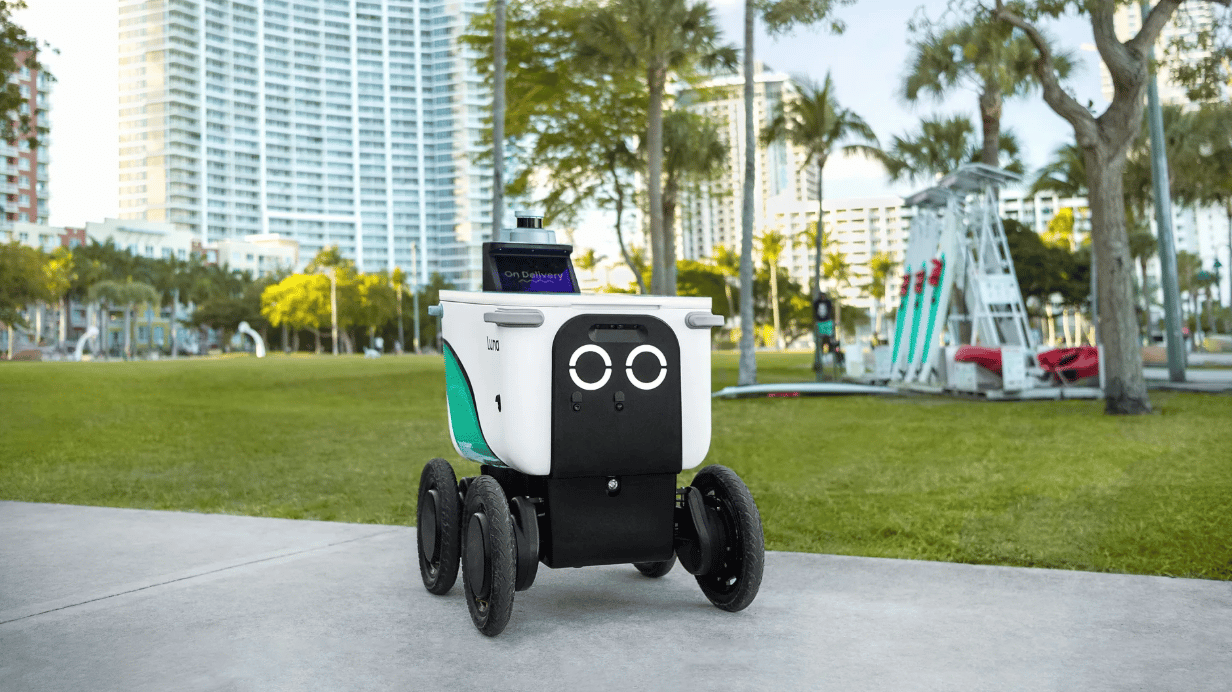

Autonomous vehicles, like self-driving cars (Waymo, Tesla Autopilot), drones, or delivery robots (Serve Robotics, for example).

Image Credit: Serve Robotics

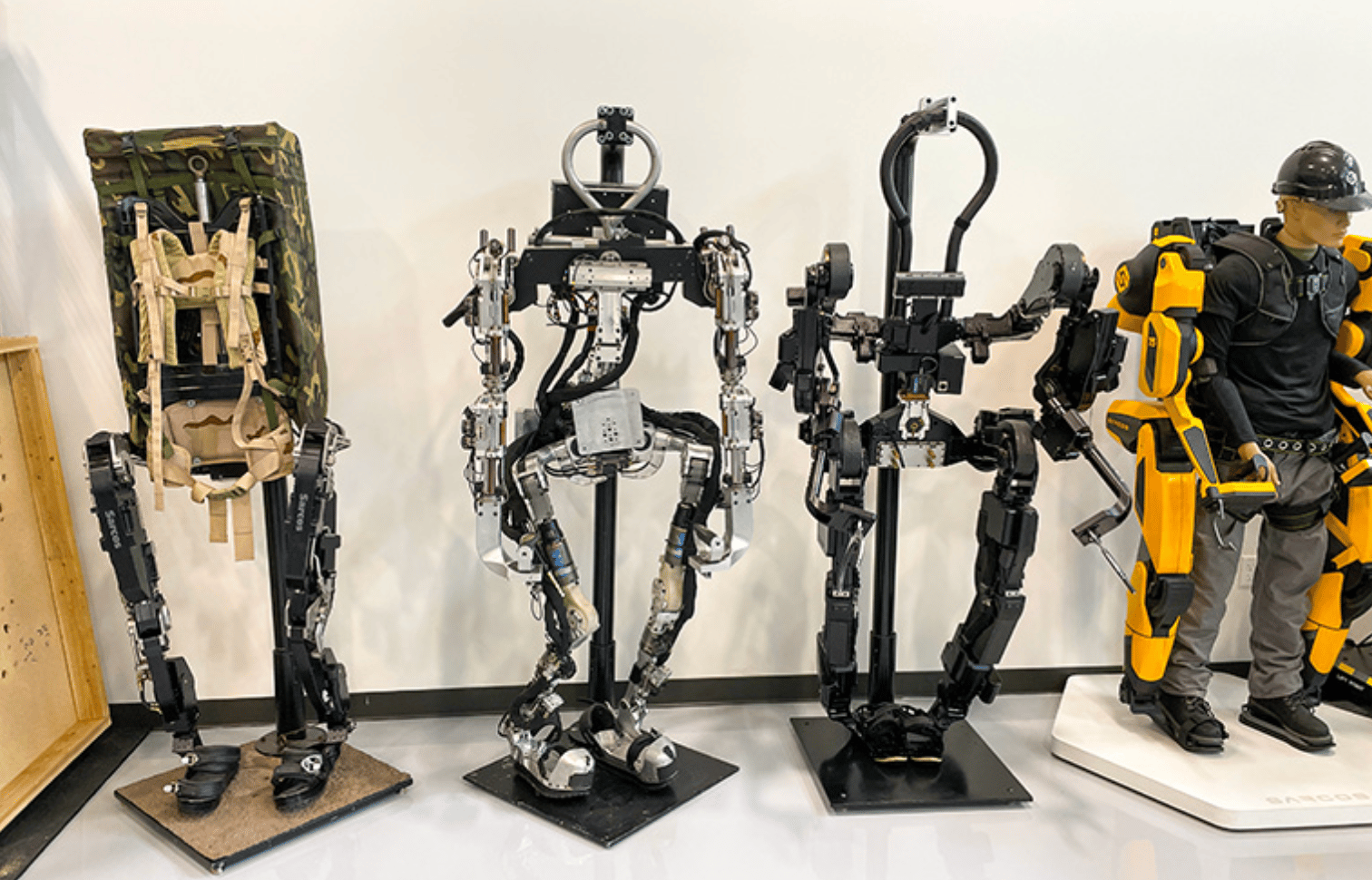

Exoskeletons – wearable robotic systems that assist or enhance human movement (Sarcos Guardian XO, Ekso Bionics).

Image Credit: The Robot Report

Machine learning (ML) and AI today enriches these entities with new perception, understanding and action capabilities. No matter if a robot is a humanoid, drone, or manipulator, it needs four main pillars to function meaningfully.

Four Main Pillars of Robotics

Perception

Perception helps the robot see, hear, and sense its environment. Its main components include:

Computer vision: Cameras + neural networks detecting objects, people, and scenes.

Depth sensing: LiDAR – Light Detection and Ranging sensor that measures distance by firing laser pulses and timing how long they take to reflect back, producing a 3D point cloud, – radar, or stereo vision to create 3D maps.

Touch and force sensing: Tactile sensors or joint torque sensors that estimate contact and grip strength.

Audio processing: Microphones with speech recognition models and sound localization.

AI models here help to interpret robot “senses.” They converts raw sensor data into a symbolic or numerical understanding of the world, handle object recognition, segmentation, motion detection, etc.

Localization and mapping (SLAM)

SLAM (Simultaneous Localization and Mapping) does two things at once:

Determines the robot’s position within a map (localization).

Builds or updates a map of surroundings in real time (mapping).

It uses algorithms such as Extended Kalman Filters, Graph-SLAM, or particle filters to align sensor readings over time and minimize positional drift.

Motion

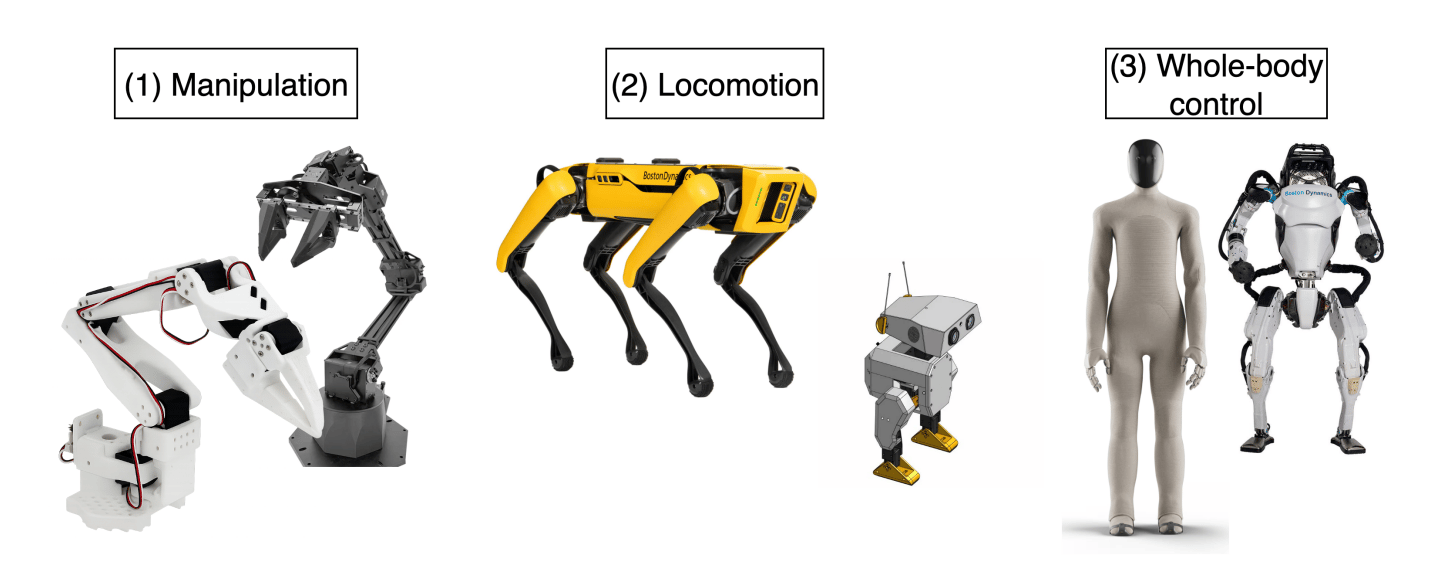

This covers how a robot acts physically, including locomotion, manipulation and whole-body control.

Image Credit: Robot Learning: A Tutorial

Locomotion means how a robot moves through its environment. It combines mechanics (hardware) and AI-based control (software).

The mechanical part provides the movement system – wheels, legs, tracks, wings, etc. Traditional motion relied on fixed programming with predictable, repetitive paths.

AI-powered locomotion adds adaptivity and autonomy. The AI part plans and adjusts movement, balancing, reacting to unexpected obstacles, adapting to terrain (we discussed this in the upcoming interview with Spencer Huang from Nvidia, stay tuned), learning optimal movement patterns through RL, coordinating complex motions like walking or climbing stairs and planning paths in real time.

Manipulation is about robot’s interaction with and moving objects. AI systems here are needed to provide visual and tactile feedback for adaptive grasping, language-guided manipulation (like “pick up the write cup”), and to predict object dynamics (for example, how items move or fall when pushed).

Mobile manipulation combines both and demonstrates more complex cases like ones when a robot moves through a room and picks up objects.

Whole-body control relates to the movement of all parts and joints of a robot.

In general, there are two broad types of methods for generating robot motion:

Explicit (dynamics-based) models: These rely on mathematical equations to describe exactly how a robot’s parts move and interact with the environment. They come from physics, including things like forces, torques, and rigid-body dynamics.

Implicit (learning-based) models: They learn patterns directly from data by observing how robots move and respond in different situations.

Many modern robotic systems combine both ideas.

Planning and decision-making:

This is the robot’s “thinking system”. Depending on the robot’s functioning, different AI models (models with RL, world models, transformers, neural-symbolic planners) can be used to choose actions, search and plan paths and high-level tasks, and react to new information. These models use search algorithms, learn from feedback, combine symbolic reasoning and neural decision policies, etc. to plan and execute high-level tasks, balancing goals, safety, and ethical constraints.

Optional but common add-ons may include:

Communication / Language understanding if the robot responds to human commands.

Learning and adaptation to improve over time.

Energy management for long operation.

One of the main shifts today happens in robot learning. Recently Hugging Face published “Robot Learning: A Tutorial” where they show how robotics learning approach moved from traditional dynamics-based models – mathematical equations describing how forces and motions behave – to ML methods that have started to transform how robots plan and act. Let’s look a little bit closer on general trends in robot learning.

How Robots Learn Today

Join Premium members from top companies like Microsoft, Nvidia, Google, Hugging Face, a16z, plus AI labs such as Ai2, MIT, Berkeley, .gov, and thousands of others to really understand what’s going on with AI. Learn the basics and go deeper👆🏼

Reply