Froth on the Daydream (FOD) – our weekly summary of over 150 AI newsletters. We connect the dots and cut through the froth, bringing you a comprehensive picture of the ever-evolving AI landscape. Stay tuned for clarity amidst the surrealism and experimentation.

The most interesting AI technology news coming this week is from Meta. They have taken one step closer to realizing Yann LeCun’s vision of human-like AI. Other major events in AI revolve around regulations (EU act), chips (AMD and Intel planning to challenge Nvidia's dominance), and money (McKinsey Report), as if we are caught in an endless casino. Meanwhile, the old internet experiences a serious meltdown as the dice continue to roll.

Froth on the daydream, casino —ar 16:9 —v 5.1 by Midjourney

Remember, some of the articles might be behind the paywall. If you are our paid subscriber, let us know, and we will send you a pdf.

Human-like Meta

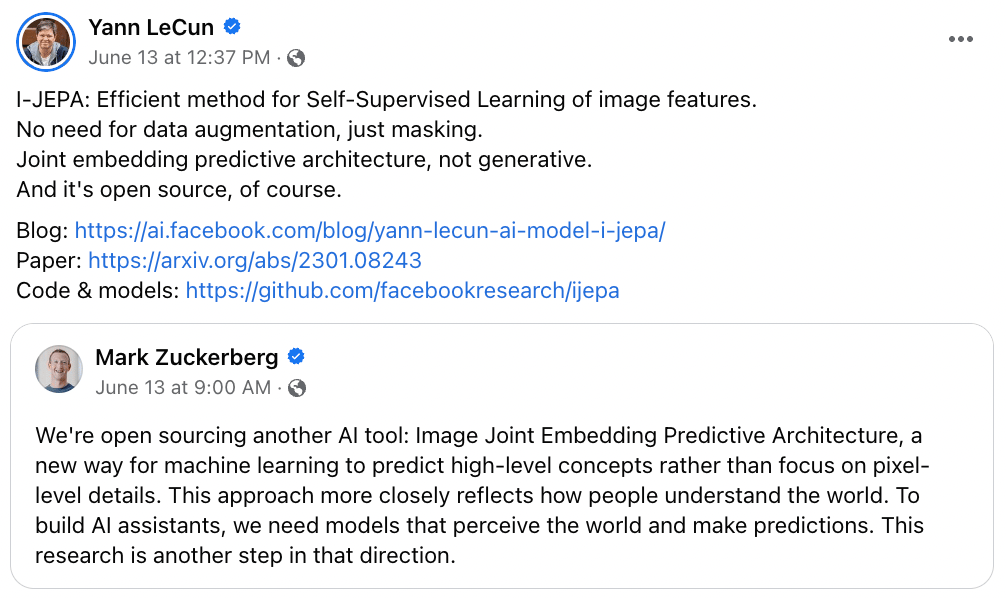

For a while now, Meta's chief AI Scientist and overall AI guru, Yann LeCun, has been envisioning a different AI architecture that utilizes self-supervised learning (SSL) and can learn and reason more like animals and humans. This week, Meta introduced the first model based on Yann LeCun's vision for more human-like AI: the Joint Embedding Predictive Architecture (I-JEPA). I-JEPA is a step towards offering an alternative to the widely used transformer architecture. As a reminder, the whole generative AI boom became possible because of the paper "Attention is all you need," which introduced the network architecture Transformer, based solely on attention mechanisms.

I-JEPA operates on abstract representations of images rather than individual pixels. It leverages humans' passive observation and general understanding of the world to predict missing information. It focuses on high-level concepts and semantic features rather than pixel-level details. I-JEPA is specifically designed for computer vision tasks and aims to construct an internal framework of the environment and predict missing information in images using background knowledge about the world. Its goal is to capture common sense understanding and reasoning capabilities, enabling more human-like image completion.

Is this the way to AGI? At least, Mark Zuckerberg thinks that's the way to AI assistants:

Despite the dominance of generative AI, SSL and I-JEPA present promising alternatives that could shape future AI models.

I-JEPA:

Code & models: https://github.com/facebookresearch/ijepa

Transformers:

Transformers not going anywhere anytime soon. Recently, a few researchers put together this Comprehensive Survey on Applications of Transformers for Deep Learning Tasks.

Ahead of AI (Sebastian Rashka) explains the differences between encoder-style and decoder-style language models (LLMs), highlighting their respective purposes. It also mentions the popularity of decoder-only models like GPT for text generation, while encoder-only models remain useful for training predictive models based on text embeddings

Generative models based on transformers can produce hallucinations or confabulations, which is a significant issue in fields like finance and healthcare. While ongoing research aims to reduce confabulation, it stems from the autoregressive design of language models.Timely with Meta’s I-JEPA, The Algorithmic Bridge discusses the problem of hallucination (or confabulation) arguing that eliminating confabulation would require a major redesign.

Last week, Meta also introduced Voicebox, a new text-to-speech generative AI model It is capable of creating voice samples from scratch, modifying existing samples, and performing these tasks in six different languages. Voicebox is 20 times faster than prior models and demonstrates significantly better performance. Unlike I-JEPA, Voicebox was not outsourced: the potential for misuse is too high. The FBI, btw, hasn’t raised concerns about voice scams yet but has warned about a rise in sextortion schemes involving AI-generated fake explicit content.

Reddit meltdown

I have always perceived Reddit as a community of disgruntled individuals who are deeply passionate about the content they share. That's precisely why Reddit has remained popular over the years: no amount of marketing efforts or algorithms could change the fact that Reddit is governed by numerous well-defined communities. However, Reddit's recent decision to charge for its API has caused a significant uproar, with around 8,400 forums going dark in protest. This development raises important questions about the ownership of Reddit's content and its moderators. After all, the content on Reddit is not created by the company itself but rather by enthusiastic individuals who significantly enhanced Reddit’s user experience.

As Stratechery aptly puts it, "Reddit is miffed that Google and OpenAI are taking its data, but Huffman and his team didn't create that data: Reddit's users did, under the watchful eyes of Reddit's unpaid mod workforce. In other words, my strong suspicion is that what underlies everything happening this week is widespread discontent and frustration that everything that was supposed to be special about the web, particularly the part where it gives everyone a voice, has turned out to be nothing more than material to be fought over by millionaires and billionaires."

Some even speculate that OpenAI, which wields significant influence over Reddit, may be behind these changes to gain control over training data for AI models. While this theory remains speculative, it sheds light on the complex dynamics and power struggles within the realms of data, AI, and content platforms. All the data that was on Reddit is already sucked in and digested by the AI giants, but the new data becomes the real gold in the upcoming months. The battle for valuable human-generated content, which is highly beneficial for AI models, has only just begun.

Moreover, the repercussions of the subreddit shutdowns extend beyond Reddit itself. With many subreddits going dark, Google searches that include "reddit" often lead to inaccessible content. This unexpected turn of events proves beneficial for OpenAI's partner, Microsoft.

Despite the protests, Reddit CEO Steve Huffman has maintained an obstinate stance, dismissing the protests as led by a minority of moderators without wide support. He even referred to them as "landed gentry" while still planning for Reddit's IPO. ~8400 communities wish him good luck.

Overall, monitoring the Reddit meltdown is crucial as it highlights the urgent concerns regarding data control, monetization, and the role of AI in our digital landscape. It also exposes the power dynamics between content platforms, AI companies, and developers.

Not enough chips

The shortage of GPUs made chip makers move faster and move billions across the countries.

Though Nvidia is unabashed leader, AMD and Intel put a lot of effort to catch up. Last week AMD revealed a new AI chip called the MI300X. This chip is designed for LLMs and advanced AI models. It has a memory capacity of up to 192GB, allowing it to accommodate even bigger AI models than Nvidia's most powerful chip, which can fit 120GB. However, the release of AMD's chip is scheduled for the first half of 2024, giving Nvidia an 18-month lead in the market. AMD's chip focuses on AI inferencing and not the more demanding task of training. After the announcement, AWS told Reuters, they consider using these new chips from AMD.

While announcing the new chip, AMD also partnered with Hugging Face. The goal of this partnership is to deliver high-performance transformer models on AMD CPUs and GPUs to compete with Nvidia. Before that, AMD has also collaborated with Microsoft in creating AI-specific chips. Build networks and conquer!

Intel, meanwhile, is heavily investing in factory buildings. It plans to invest up to $4.6 billion in a new semiconductor assembly and test facility in Poland. This investment is part of a larger initiative to build chip capacity in Europe. Additionally, Intel is set to spend $25 billion on a new factory in Israel.

While chipmakers are trying to keep up with growing demand, Nat Friedman, former CEO of GitHub, and investor Daniel Gross created Andromeda Cluster, an AI cloud service that offers computing power to startups facing GPU shortages. The service relies on 2,512 Nvidia H100 GPUs.

OpenAI delivers

OpenAI no matter what keeps shipping updates to the community. Yesterday, they announced more steerable API models, function calling capabilities, longer context, and lower prices to gpt-3.5-turbo and gpt-4.

Function calling is especially curious development. Function calling in ChatGPT can be metaphorically described as a bridge connecting two worlds. Just like a bridge allows smooth passage between two separate locations, function calling enables seamless communication between ChatGPT and external tools or APIs. It serves as a conduit through which information and instructions flow between the language model and the specialized functionalities provided by external systems. That update makes GPT way more powerful.

Money

Meanwhile, Microsoft, OpenAI's partner, revealed that its next-generation AI business is expected to be the company's fastest-growing segment. Microsoft's CFO announced that it could generate over $10 billion annually in revenue from AI usage on its Azure cloud and OpenAI's models, which is exactly the amount they invested in OpenAI this year.

Money is also on McKinsey's mind, of course. According to their report, generative AI could potentially contribute up to $4.4 trillion per year to the worldwide economy. McKinsey also claims that these changes "will challenge the attainment of multiyear degree credentials," implying that knowledge workers may be the most likely to face career setbacks.

A different opinion comes from Larry Fink, CEO of the $100 billion investment fund BlackRock. He thinks that "the collapse of productivity has been a central issue in the global economy. AI has the huge potential to increase productivity and transform margins across sectors. It may be the technology that can bring down inflation." The Tokyo government seems to align with that vision, stating that ChatGPT could save them at least 10 minutes a day. In Japan, every second counts.

EU Act

Cool research and educational materials

Orca is a 13-billion parameter model developed by Microsoft researchers that autonomously supervises models with minimal human intervention. The findings highlight the potential of learning from step-by-step explanations to advance AI systems and natural language processing.

Fascinating research and new AI algorithm by Google, Cornell University, and UC Berkeley for object tracking: Tracking Everything Everywhere All at Once.

Researchers from Hugging Face, OpenAI, Cohere, AI2, and a few universities united to evaluate the Social Impact of Generative AI Systems in Systems and Society

Interviews with people who you are not sure you can trust

Microsoft’s CEO Satya Nadella: https://www.wired.com/story/microsofts-satya-nadella-is-betting-everything-on-ai

Palantir CTO Shyam Sankar and Head of Global Commercial Ted Mabrey: https://stratechery.com/2023/an-interview-with-palantir-cto-shyam-sankar-and-head-of-global-commercial-ted-mabrey

Thank you for reading, please feel free to share with your friends and colleagues. Every referral will eventually lead to some great gifts 🤍