As we all know, it is difficult to make predictions, especially about the future. And still, it’s one of the most favorite games at the end of the year. I like a quote from Antoine de Saint-Exupéry who once said, ‘As for the future, your task is not to foresee it, but to enable it.’

So, I believe by choosing the right predictions, we enable the future the way we’d like it to be. Last week, Hugging Face’s CEO Clement Delangue started with his predictions:

As if echoing the first prediction, rumors surfaced about Stability AI’s financial problems and a potential sale. Additionally, companies like Character AI, with overblown valuations exceeding $1 billion and unsustainable business models, are becoming acquisition targets.

BUT, what we really want to do in today’s newsletter is to encourage YOU to send us your predictions. The most compelling ones, along with the names of their authors and companies, will be featured next Monday alongside our insights on what the AI industry is poised to enable in 2024.

→ REPLY TO THIS EMAIL WITH YOUR PREDICTIONS ←

News from The Usual Suspects ©

Hugging Face (again)

Last week, organized the first AI media briefing for a few media outlets where in a Q&A session, we could ask Clement Delangue, Margaret Mitchell, and Irene Solaiman any questions.

About time series, Clement Delangue said: “Recently, a generative model for time series, Time GPT, has been launched, and we're witnessing a growing number of startups exploring this area. What's particularly exciting is the potential to integrate time series with other modalities. We've become much better at it, so perhaps we can combine time series with text data, which could lead to groundbreaking developments. For instance, consider fraud detection, a critical area for many businesses, including financial companies like Stripe. If we can blend predictive time series data with textual information, such as messages scammers write when attempting fraud, we might create a system that surpasses the previous generation by tenfold. I believe the financial sector will be greatly impacted by this.”

The other point was made about the AI industry being at a crucial juncture where financial, environmental, and technological sustainability are becoming increasingly interlinked. As such, companies and stakeholders in the AI field are urged to consider more holistic and sustainable approaches to AI development and deployment, balancing the pursuit of profit with long-term viability and responsibility. One of the challenges is the sustainability of cloud-based AI models. The economic viability of maintaining and scaling these models is under scrutiny, especially considering the heavy reliance on cloud infrastructure which can be both costly and resource-intensive.

The term "cloud money laundering" metaphorically describes the situation where AI investments are substantial but the returns are not always clear or immediate.

Additional read: The study "Power Hungry Processing: Watts Driving the Cost of AI Deployment?" shows multi-purpose ML models have higher energy and carbon costs than task-specific ones, urging careful utility evaluation due to environmental impact. For example, an image generation model like stable-diffusion-xl-base-1.0 generates a staggering 1,594 grams of CO2 for 1,000 inferences. This amount is roughly equivalent to the carbon emissions from driving an average gasoline-powered passenger vehicle for 4.1 miles.

OpenAI, their all-male Board, and other news

Sam Altman is back, and the new board 'is working very hard on the extremely important task of building out a board of diverse perspectives.' You might notice, even if you are not very observant, that there are zero (0) women on the board. It made me curious to see who is on, let's say, Anthropic's board. Well, no one. However, they have a long-term benefit trust (LTBT) that will choose board members over time. Currently, the trust has 5 members: four men and one woman. All of them are tightly connected with the Effective Altruism (EA) movement. So, if we speak about AI risks and safety, should we REALLY consider diversity? And not only in gender but to have a plurality of views? Some weight and balance in check? Agh.

I loved the directness of Margaret Mitchell, Chief Ethics Scientist at HF when she answered my questions about EA: 'One of the fundamental issues I have with EA is that it seems to incentivize sociopathic behavior where looking your neighbor in the eye does not stimulate empathy or does not stimulate the sense of being with your fellow person. That creates this psychological sense where no one really matters that much to you. That treating people like that then makes them feel more negative, which then has a further domino effect of them treating other people more negatively.”

OpenAI's $51 million purchase of AI chips from Rain, a company Sam Altman personally invested in, blurs professional and personal interests, highlighting investment in AI hardware amidst global GPU shortages and facing potential US security review delays.

OpenAI delays its custom GPT store launch to early 2024 amid internal issues, including CEO Sam Altman's brief ouster. The store, for user-created and monetized GPTs, is being refined based on feedback.

Turing Post is a reader-supported publication. To receive new posts, have access to the archive, and support our work, become a paid subscriber →

Amazon Re:Invent

At the AWS Re:Invent conference, Amazon Web Services (AWS) made a series of significant announcements in Generative AI (Gen AI) to challenge rivals like Microsoft Azure and Google Cloud. Key highlights include the expansion of Large Language Model (LLM) choices through its Bedrock service, introducing the Amazon Q assistant, and enhancing multi-modal and text generation capabilities with models like Titan TextLite, Titan TextExpress, and Titan Image Generator. AWS also focuses on integrating various databases and simplifying retrieval-augmented generation (RAG) for ease of use with proprietary data. These moves signify AWS's aggressive push to lead in the Gen AI space, offering diverse AI model support and advanced tools for enterprise customers. TheSequence thinks AWS’ GenAI strategy starts to look a lot like Microsoft’s.

Meta is 10

Meta's AI lab marks its 10th anniversary with innovative projects like Ego-Exo4D, a new suite of AI language translation models named "Seamless", and Audiobox, aiming to enhance AI in augmented reality and audio generation. Chief scientist Yann LeCun reflects on AI's mainstream emergence and Meta's role amidst controversies and its focus on generative AI. The lab's shift to generative AI research and open-source advocacy, despite industry debates, aligns with Meta's significant investment in the metaverse. Amid challenges of public perception and internal dynamics, Meta's AI initiatives underscore its commitment to advancing AI technology, while grappling with safety, content moderation, and ethical concerns.

Twitter Library

Other news, categorized for your convenience

But it seems that OpenAI fixed it. That’s my recent attempt:

Advancements in Large Language Models (LLMs):

Perplexity AI (PPLX-7B and PPLX-70B Models): Internet knowledge access in LLMs for up-to-date responses →read more

ExploreLLM: Enhanced user experience in complex tasks through structured interactions →read more

TaskWeaver by Microsoft: Autonomous agents using LLMs for complex data analytics →read more

Starling-7B: Open LLM focusing on helpfulness and safety →read more

ChatGPT’s One-year Anniversary Review: Progress and state of open-source LLMs →read more

Innovative Model Architectures and Efficiency:

Mamba Sequence Model Architecture: Efficient handling of long sequences in diverse modalities →read more

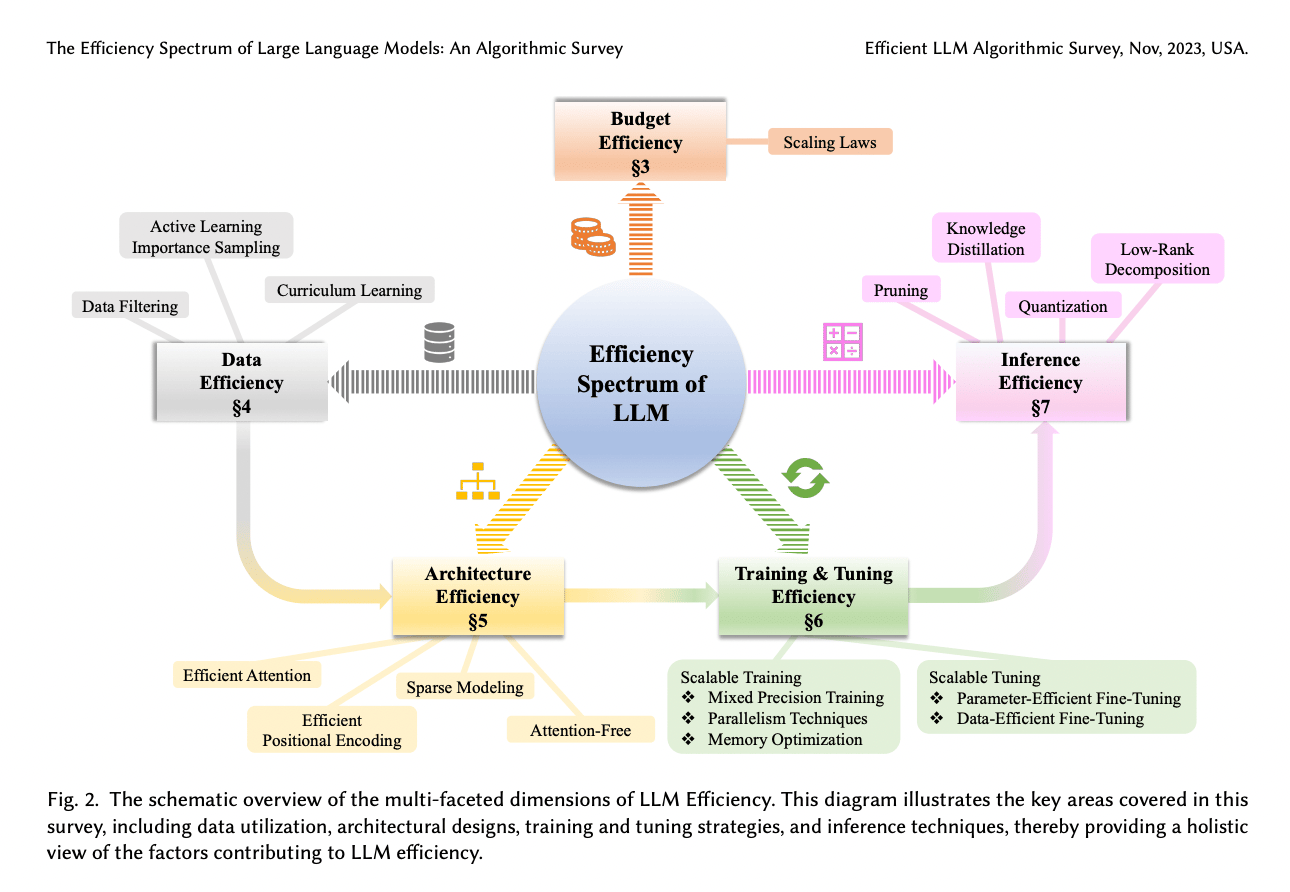

LLM Efficiency Advancements Review: Comprehensive review of techniques improving LLM efficiency →read more

Diffusion State Space Model (DIFFUSSM): High-resolution image generation with FLOP-efficient architecture →read more

Multimodal and Specialized AI Systems:

MMMU Benchmark: Evaluation tool for multimodal models in multi-discipline tasks →read more

UniIR: Universal multimodal information retriever for diverse retrieval tasks →read more

GNoME for Inorganic Materials Discovery: Data-driven discovery of new materials structures: GNoME finds 2.2 million new crystals, including 380,000 stable materials that could power future technologies →read more

AI in Language Translation and Image Generation:

Seamless AI Language Translation Models: Preserving expressive elements in speech for real-time translation →read more

Adversarial Diffusion Distillation (ADD): ADD enables single-step, real-time image synthesis with foundation models, a significant advancement in the field →read more

We are reading/watching/listening to:

The Inside Story of Microsoft’s Partnership with OpenAI by The New Yorker

My techno-optimism by Vitalik Buterin

A Conversation with Andrew Ng by Exponential View (read or watch)

Crucible moments with Jensen Huang from Nvidia by Sequoia

Thank you for reading, please feel free to share with your friends and colleagues. In the next couple of weeks, we are announcing our referral program 🤍

Another week with fascinating innovations! We call this overview “Froth on the Daydream" - or simply, FOD. It’s a reference to the surrealistic and experimental novel by Boris Vian – after all, AI is experimental and feels quite surrealistic, and a lot of writing on this topic is just a froth on the daydream.

How was today's FOD?