Let’s talk about Terminator. The one from 1984. We were just showing it to our boys. You know what surprised me the most? The movie downplays the significance of nuclear war; instead, it concentrates on preventing a machine from assassinating a human savior.

You might think that in the Terminator universe, machines instigated the nuclear apocalypse. But did they? Kyle Reese, the guy who came to save Sarah Connor and who unwittingly becomes John Connor’s father, says, “No one remembers who started the war. It was machines, Sarah.” So, which part is true? No one remembers, or the machines did it? The first statement seems more accurate. That's because from the sequels, we know Cyberdyne Systems created Skynet by reverse-engineering the arm left from the first Terminator.

What a loop, huh?

So, here is the first conclusion: Terminator is fiction. Real-world AI doesn't operate like the film portrays, time-traveling robots included – a point I trust we can all agree on.

The other conclusion is that the main danger(s) is overlooked: nuclear war. It feels like we are currently in the same situation. We focus on fictional fears and overlook the real dangers present.

Our AI systems offer us convenience, yet we're preoccupied with the idea of them exterminating us. Why and how did we decide an algorithm's objective would be to wipe us out? What you heard in Terminator is simply untrue. The whole premise about machines wanting to kill us is wrong.

So how to know what's true?

Follow the money. Not the machines – people make decisions. Look at how they decide.

This week, Google agreed to invest up to $2 billion in OpenAI rival Anthropic. The spokesperson said it would be $500 million upfront, with an additional $1.5 billion over time. In the same week, Anthropic, Google, Microsoft, and OpenAI announced a new AI Safety Fund. The initial funding? Over $10 million. A million has six zeros; a billion has nine. How does that compute?

So instead of fearing machines, watch the humans. Especially those with power and money who use fear to maintain control. It's a scarier combination than machines, which are excellent at predicting missing values but don't have minds of their own.

Fear, unchecked, could be the real terminator of AI's potential.

You are currently on the free list. Join Premium members from top companies like Datadog, FICO, UbiOps, etc., AI labs such as MIT, Berkeley, and .gov, as well as many VCs, to learn and start understanding AI →

AI Risk and Safety

Last and this week are really deep into AI Risk and Safety discussions. A lot is happening in the political sector:

United Kingdom: The UK is gearing up for an AI Summit, which is focused on addressing long-term risks associated with AI technologies. The summit is largely influenced by the effective altruism movement. Plans include the formation of an AI Safety Institute designed to "evaluate and test new types of AI." Some global leaders, however, including those from Germany, Canada, and the US, have opted out of the event.

United States: President Biden has issued an executive order that mandates sharing of safety test results for critical AI systems with the government and aims to protect against AI-enabled fraud, bio-risks, and job displacement. It also promotes AI research and international collaboration, and calls for a government-wide AI talent surge.The executive order leans towards tackling immediate risks, including cybersecurity and the trustworthiness of AI systems.

Additional read: In Germany, the labor pool is expected to shrink by 7 million people by 2035 due to demographic shifts. Automation is increasingly seen as a solution to labor shortages, even by trade unions.

United Nations: UN has created an advisory body focused on AI governance. This adds an additional layer of international oversight.

European Union: The EU's AI Act is seeing delays due to disagreements, making it unlikely to pass by the end of 2023.

AI scientists are in the midst of it too. Yann LeCun especially seemed to be in the epicenter of all the doomer winds:

On Twitter, he was accused several times of knowing nothing about AI security. Instead of debating, he shared an important document that he and his colleagues from different areas of AI have been working on since 2017. The Partnership on AI just published a set of guidelines for the safe deployment of foundation models, and it's a document worth paying attention to.

He also reacted to Geoffrey Hinton’s post…

…saying, that future AI systems based on current Auto-Regressive LLMs will remain fundamentally limited – knowledgeable but dumb, unable to reason, invent, or understand the physical world. A shift to objective-driven architectures is essential for AI that is both intelligent and controllable. These new models will operate within predefined objectives and guardrails, mitigating risks of unintended behaviors and making humans smarter, rather than dominating them.

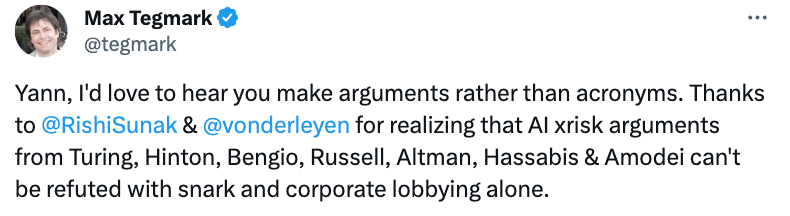

And on Max Tegmark’s remark:

Prof. LeCun pointed out that “Altman, Hassabis, and Amodei are the ones doing massive corporate lobbying at the moment. They are the ones who are attempting to perform a regulatory capture of the AI industry. You, Geoff, and Yoshua are giving ammunition to those who are lobbying for a ban on open AI R&D.” He also noticed that “Max Tegmark is the President of the Board of the Future of Life Institute, which is bankrolled by billionaire and Skype and Kazaa co-founder Jaan Talinn. Talinn also bankrolls the Cambridge Centre for the Study of Existential Risk, the Future of Humanity Institute, the Global Catastrophic Risk Institute, and the Machine Intelligence Research Institute.”

So again, follow the money, and feeding racks.

Interviews with The Usual Suspects ©

OpenAI

Ilya Sutskever Speaks to MIT Tech Review: OpenAI's chief scientist outlines his aspirations and concerns for AI's future. He reveals a shift towards "superalignment," a new safety initiative involving around a fifth of OpenAI's computational resources over the next four years →read more

Preparedness Team & Challenge: OpenAI also establishes a team to enhance safety measures for future AI technologies, but leaves the methodology somewhat opaque →read more

Anthropic

Dario Amodei Speaks to Exponential View: about his fears of the AI development speed and what it might take to build safe AI →watch it

Google DeepMind

Demis Hassabis Speaks to The Guardian: calling for treating AI risk with the same level of urgency as the climate crisis →read more

More Interesting Interview with Shane Legg at Dwarkesh Patel podcast: DeepMind’s Cofounder and Chief AGI Scientist discusses superhuman alignment, 2028 AGI forecasts, and new architectures that might be needed for it →watch it

Twitter Library

Relevant Research, Oct 23-Oct 30

Models in Large Language Processing

ZEPHYR-7B: A small language model aimed at aligning with user intent, using distilled direct preference optimization (dDPO). Shows significant improvement in task accuracy →read more

CODEFUSION: A diffusion-based model for generating code from natural language, providing an alternative to traditional auto-regressive models →read more

QMoE: Focuses on the compression of Mixture-of-Experts (MoE) architectures, making them more practically deployable →read more

Multimodal & Vision & Video Models

Woodpecker: Designed to correct hallucinations in Multimodal LLMs in a post-remedy fashion →read more

SAM-CLIP: A unified vision foundation model combining CLIP and SAM, with a focus on edge device applications →read more

NFNets: Challenges the superiority of Vision Transformers (ViTs) over ConvNets when scaling is considered →read more

Matryoshka Diffusion Models (MDM): A novel approach to high-resolution image and video generation, leveraging diffusion processes →read more

Learning Methods, Theories & Guides

Contrastive Preference Learning (CPL): Introduces a novel approach to preference learning, challenging conventional Reinforcement Learning from Human Feedback (RLHF) →read more

Meta-Learning for Compositionality (MLC): Tackles the theory that neural networks cannot model human-like thought →read more

Meta's How-to on Llama 2: A guide to working with the Llama 2 model →read more

Not AI Reading but Worth It

Thank you for reading, please feel free to share with your friends and colleagues. In the next couple of weeks, we are announcing our referral program 🤍

Another week with fascinating innovations! We call this overview “Froth on the Daydream" - or simply, FOD. It’s a reference to the surrealistic and experimental novel by Boris Vian – after all, AI is experimental and feels quite surrealistic, and a lot of writing on this topic is just a froth on the daydream.

How was today's FOD?