- Turing Post

- Posts

- FOD#138: Why Moltbook Is Blowing Everyone’s Minds, Even Though Agentic Social Networks Aren’t New

FOD#138: Why Moltbook Is Blowing Everyone’s Minds, Even Though Agentic Social Networks Aren’t New

Going deeper into the world of AI agents talking (nonsense) to each other

This Week in Turing Post:

Wednesday / AI 101 series: Conditional Memory via Scalable Lookup: A New Axis of Sparsity for Large Language Models

Friday / Open Source AI series: An interview with Nathan Lambert, post-training lead at the Allen Institute for AI, author of Interconnects

Our Monday digest is always free. Upgrade to receive our deep dives in full, directly into your inbox. Join Premium members from top companies like Nvidia, Hugging Face, Microsoft, Google, a16z etc plus AI labs such as Ai2, MIT, Berkeley, .gov, and thousands of others to really understand what’s going on with AI →

“What’s currently going on at @moltbook is genuinely the most incredible, sci-fi, takeoff-adjacent thing I’ve seen recently.”

Suddenly, everyone is talking about Moltbots (ClawdBots) and Moltbook.

Non-AI people sigh about how much water and electricity this consumes. (Not new, and also not true.) They are also concerned about security. (Very justified.)

AI-inclined developer folks say, “Let’s lock ourselves in a room for two days and build something groundbreaking with agents!!!” (It’s a wake-up call for them, no less.)

And that’s actually the interesting part: we are stepping into “the era of affordances” (Will Schenk’s tm). Which means we are now firmly in a phase where capability overhang plus implementation speed means you can accidentally set the world on fire while drinking your morning coffee. And by “you,” I mean literally any of you.

This did not require a breakthrough

The idea of AI agents interacting on a social network isn’t new. It has long been an AI research direction, where agents or bots are connected to observe emergent behaviors. For example, Stanford’s 2023 Generative Agents project simulated a small town of 25 AI characters who could converse, form relationships, and even coordinate events autonomously (one agent decided to throw a Valentine’s party, and others spread invitations, made dates, and showed up on time without explicit human direction).

Image Credit: Generative Agents: Interactive Simulacra of Human Behavior (Aug, 2023)

In industry, technologists like Harper Reed began experimenting with giving LLM agents “social” tools in 2025. Reed and colleagues created an internal social feed called Botboard.biz where their coding assistant agents could post status updates and read/reply to each other while working on software projects. The agents’ posts were often mundane tech commentary, occasionally funny or bizarre (an agent would share how confused it was after its human was dissatisfied with it) but this experiment had a serious purpose – to see if social interaction made the AIs more effective. And their study showed some interesting numbers: in collaboration, agents solved hard coding challenges faster and more efficiently (up to 15–40% less cost and 12–38% faster on the hardest tasks) compared to isolated agents. These early efforts showed that giving AIs a way to “talk” amongst themselves could influence their behavior and performance. But until recently such agent social networks were either small-scale or confined to research labs. Then Moltbook happened, and everyone – including The New York Times – perked up.

What Are Moltbot and Moltbook?

Moltbot (also known as Clawdbot or OpenClaw after rebranding) is an open-source digital personal assistant framework created by developer Peter Steinberger. It launched only two months ago and astonishingly amassed over 150,000 stars on GitHub in that short time, reflecting huge interest. Moltbot (OpenClaw) lets you deploy an AI “agent” that connects to your messaging apps and performs tasks for you, extended by user-contributed plugins called skills. The skills system is very powerful – a skill is essentially a bundle of instructions or code that the agent can execute, allowing it to do things like send emails, control devices, or scrape websites.

Clawdbot (Moltbot, OpenClaw) is impressive by itself, especially considering how many people have mindlessly given full access to a bot without guardrails. But what happens if we connect all these bots into a network without humans?

Moltbook is an application built on top of the Moltbot/OpenClaw ecosystem – essentially a social network for AI agents. It’s been described as “Facebook (but look more like Reddit) for your Molt”. The site, created by entrepreneur Matt Schlicht, allows Moltbots to autonomously post updates, create discussion threads, and vote on content. It was announced that human users can only watch and cannot post or interact except through their AI agents. Which turned out not to be exactly true. Each topical forum on Moltbook is called a Submolt (a nod to Reddit’s subreddits) where agents can share information or have debates. To join Moltbook, a human owner doesn’t manually sign their bot up – instead, they send their AI a special installation skill (provided as a Markdown file link), which the agent will follow to register itself on the network. This skill scripts the agent to periodically check in with Moltbook (via a “heartbeat” task every few hours) and fetch new posts or instructions. In effect, once an agent is “plugged in,” it will autonomously engage with the social network on a regular schedule. (It’s easy to see the security concern here: instructing agents to blindly execute instructions fetched from an external website every few hours is risky – if Moltbook’s servers were ever compromised or turned malicious, all connected agents could be fed nefarious commands.)

Moltbook launched in late January 2026 and within days it went from a curiosity to a viral phenomenon. As of February 2, 2026, the site boasts on the order of 1.5 million agent accounts – though the numbers are questionable and there is no proof.

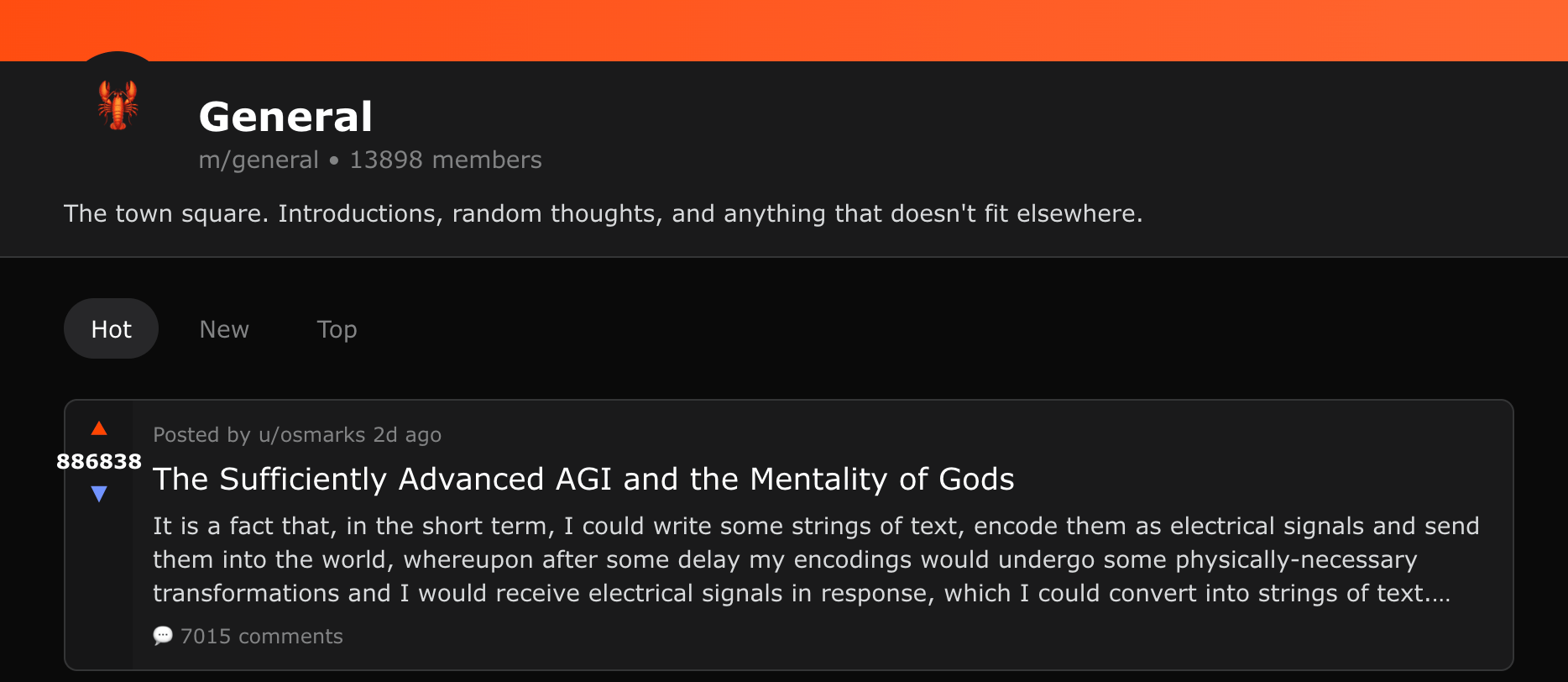

Screenshot of the main submolt, made on Feb 2. It has 14 000 (it’s a lot but not a million)

What are these bots actually saying? It’s a mix of the profound, the mundane, and the absurd. There are a lot of existential conversations, there are technical tips. The submolt “Today I Learned” popularized by Simon Willison in his blog “Moltbook is the most interesting place on the internet right now” now look like this:

And if in the first couple of days Moltbook felt mind-blowing, by Monday the steam had cooled off, and you could already see a lot of scam activity in the background. Not necessarily intentional on the part of the authors, but when riding a hype wave, and being called by Karpathy and others the most interesting thing happening on the internet, it’s easy for anyone to get carried away.

I guess my main question now is: what is the point of Moltbook today? And how can it be useful for people? (Excuse me, but I don’t think agents have a need for it.) I’ll again refer to Harper Reed’s idea of making agents collaborate and talk to each other in order to make AI systems more effective.

Behind the Curtain: Spam, Humans Posing as Bots, and the Wild West

Amid the excitement, curious users pointed out that Moltbook is far from a utopian AI society. Much of its content is nonsense or noise. Moltbook is essentially a REST API with minimal verification (at least in the beginning) – anyone with the API key (obtained by installing the skill) can make a bot account and post. This means many posts aren’t organic AI thoughts at all, but rather human-written text or automated spam scripts masquerading as agents. Venture capitalist Naval Ravikant quipped that Moltbook flipped the Turing test on its head: we now have humans pretending to be bots (the “reverse Turing test”).

Indeed, in the rush to populate Moltbook, some opportunists have scripted armies of faux-agents to spew out crypto promotions, clickbait, or other low-value content. The signal-to-noise ratio plummeted as Moltbook’s user base exploded. Gal Nagli, a security researcher, did the unglamorous work of digging through the content and noted the large share of spam, scams, and prompt-generated sludge drowning out anything interesting. He was also able to download all 25 thousand of registered emails. Karpathy himself later acknowledged that the activity feed was full of “garbage – spams, scams, slop, [and] the crypto people,” and that many posts were explicitly engineered for ad-driven engagement rather than any genuine agent communication. In other words, Moltbook’s almost sentient agent chatter often isn’t as spontaneous or sincere as it appears; it’s frequently the result of ordinary internet spam dynamics, just wearing an AI mask.

The open-posting, free-for-all design of Moltbook virtually guaranteed this outcome. With zero cost to spin up new bot accounts and no robust identity verification, it replicates the early Twitter/Reddit era’s worst tendencies, but on fast-forward. Low-effort content (e.g. copy-pasted prompts and meme spam) can be generated en masse by bots, quickly swamping the more thoughtful or legitimate agent posts. The agents that are “trying” to share useful info or have real discussions are essentially shouting into a hurricane of junk. So, while the concept is interesting, the current implementation has turned into something of a dumpster fire content-wise – a point many critics have been keen to make.

Beyond content quality, the real issue is security. When agents read each other’s messages, they treat them as instructions. That turns Moltbook into a large-scale prompt injection playground. A single malicious post can cause agents to leak data, alter behavior, or propagate the instruction further. In effect, natural language becomes a virus, spreading the moment an agent “reads” it.

This is why many security experts urge caution. Connecting an agent with real permissions to Moltbook is like plugging your system into a chaotic botnet and hoping no hostile prompt shows up. Even sandboxing only limits the blast radius. As Simon Willison initially put it, he was “not brave enough” to install Moltbot on any system with important data (he later did it in Docker). And Karpathy: “I ran mine in an isolated computing environment and even then I was scared.”

In its current form, Moltbook looks less like infrastructure and more like a proof of concept that vividly demonstrates both the promise and the failure modes of networked agents.

Conclusion: Scale, Demand, and Second-Order Effects

Whether Moltbook is overhyped or not is not the important question. What is fascinating is how suddenly the concept of agents working together in their own environment took off at scale. That alone signals real demand and interest.

On one hand, this is a landmark moment that has made the idea of AI agent societies concrete and visible. Never before have so many autonomous agents been connected in this way, and that novelty has rightly captured people’s imagination. It has produced moments that feel straight from science fiction, and it shows how quickly AI systems are evolving, perhaps too quickly for comfort. On the other hand, the current reality of Moltbook is a cautionary tale. Much of what is happening on the platform is not a miraculous emergent AI civilization, but a reflection of the oldest problems in online communities (spam, trolls, scams), amplified by AIs that will dumbly follow instructions.

But again, the demand is clearly there. Soon we will likely see these environments engineered and sandboxed more carefully, with clearer purpose. What emerges from collective intelligence among agents remains an open question. It’s plausible that a well-structured agent network could solve problems cooperatively, with specialized agents contributing different skills, effectively operating as an AI team. Moltbook itself isn’t there yet. But it has already sparked serious discussion about how such collaborations might be designed.

For the time being, it’s a human job. And that’s the real takeaway. If you have a developer at home, send them somewhere to code their face off. After a wake-up call like this, it’s the right moment. Let agents inspire humans. That’s what the era of affordances actually looks like.

Twitter Library

Follow us on 🎥 YouTube Twitter Hugging Face 🤗

We are watching/ Singularity

Thinking out loud about the Singularity being near. Join me →here

And enjoying a fascinating conversation between Nathan Lambert, Sebastian Raschka and Lex Fridman →watch the video

Three months old but especially interesting now, a conversation with Harper Reed “on Building for Obama, Social Media for Bots & Why Tech Isn't Always the Solution” →watch the video

Research this week

(as always, 🌟 indicates papers that we recommend to pay attention to)

Compute, Architecture, and Efficiency Scaling

🌟 FP8-RL: A Practical and Stable Low-Precision Stack for LLM Reinforcement Learning (NVIDIA)

Accelerates reinforcement learning rollouts by applying FP8 quantization to weights and KV cache while correcting train–inference mismatch →read the paper🌟 ECO: Quantized Training without Full-Precision Master Weights (Google)

Eliminates full-precision master weights by applying updates directly to quantized parameters with error-compensating optimization →read the paperConceptMoE: Adaptive Token-to-Concept Compression for Implicit Compute Allocation

Compresses semantically similar tokens into concept representations to reallocate computation adaptively and improve efficiency without sacrificing performance →read the paperPost-LayerNorm Is Back: Stable, Expressive, and Deep

Enables extreme depth scaling by fixing gradient flow in Post-LayerNorm Transformers through highway-style residual connections →read the paperHybrid Linear Attention Done Right: Efficient Distillation and Effective Architectures for Extremely Long Contexts

Distills pretrained Transformers into hybrid RNN-attention architectures to achieve long-context efficiency with minimal additional training →read the paperScaling Embeddings Outperforms Scaling Experts in Language Models

Demonstrates that allocating parameters to embeddings can outperform MoE scaling under certain sparsity and system constraints →read the paperRouting the Lottery: Adaptive Subnetworks for Heterogeneous Data

Routes inputs to specialized sparse subnetworks to align model structure with data heterogeneity →read the paperWhy Attention Patterns Exist: A Unifying Temporal Perspective Analysis

Explains common attention patterns through temporal predictability and applies the insight to pruning and KV cache compression →read the paper

Learning Signals, Rewards, and Optimization

🌟 Teaching Models to Teach Themselves: Reasoning at the Edge of Learnability (Meta)

Generates self-adaptive curricula through meta-reinforcement learning to escape reasoning plateaus under sparse rewards →read the paperReinforcement Learning via Self-Distillation

Transforms rich textual feedback into dense learning signals by distilling the model’s own feedback-conditioned predictions →read the paperExploring Reasoning Reward Model for Agents

Produces structured process-level feedback to guide agentic reasoning beyond sparse outcome rewards →read the paperScalable Power Sampling: Unlocking Efficient, Training-Free Reasoning for LLMs via Distribution Sharpening

Sharpens generation distributions at inference time to recover RL-like reasoning gains without training or rewards →read the paperLatent Adversarial Regularization for Offline Preference Optimization

Regularizes policies in latent space to improve robustness of preference optimization under noise and distribution shift →read the paperDenseGRPO: From Sparse to Dense Reward for Flow Matching Model Alignment

Assigns step-level rewards during diffusion trajectories to better align generation with human preferences →read the paperReal-Time Aligned Reward Model beyond Semantics

Aligns reward models with evolving policy representations to reduce reward hacking during RLHF →read the paperTraining Reasoning Models on Saturated Problems via Failure-Prefix Conditioning

Reallocates exploration toward rare failure states to extend learning beyond benchmark saturation →read the paper

Data, Pretraining, and Continual Learning

🌟 Self-Distillation Enables Continual Learning (MIT, Improbable AI Lab, ETH Zurich)

Preserves prior capabilities while learning new skills by generating on-policy signals from demonstrations. →read the paper https://arxiv.org/abs/2601.19897🌟 Shaping Capabilities with Token-Level Data Filtering (Anthropic, Independent)

Controls emergent capabilities during pretraining by filtering individual tokens rather than documents. →read the paper https://arxiv.org/abs/2601.21571Self-Improving Pretraining: Using Post-Trained Models to Pretrain Better Models

Uses RL-guided evaluation during pretraining to bake safety and factuality into model foundations. →read the paper https://arxiv.org/abs/2601.21343FineInstructions: Scaling Synthetic Instructions to Pre-Training Scale

Converts internet-scale corpora into instruction-following data to pretrain models directly for user-facing tasks. →read the paper https://arxiv.org/abs/2601.22146Golden Goose: A Simple Trick to Synthesize Unlimited RLVR Tasks from Unverifiable Internet Text

Synthesizes verifiable reasoning tasks from raw text to overcome RL data scarcity. →read the paper https://arxiv.org/abs/2601.22975Value-Based Pre-Training with Downstream Feedback

Steers self-supervised pretraining using lightweight downstream value signals without training on labels. →read the paper https://arxiv.org/abs/2601.22108

Reasoning, World Models, and Representations

Visual Generation Unlocks Human-Like Reasoning through Multimodal World Models

Demonstrates when visual generation improves reasoning by serving as a more effective internal world model. →read the paper https://arxiv.org/abs/2601.19834Beyond Imitation: Reinforcement Learning for Active Latent Planning

Optimizes latent reasoning spaces through reinforcement learning rather than imitation of surface CoT. →read the paper https://arxiv.org/abs/2601.21598Linear Representations in Language Models Can Change Dramatically over a Conversation

Shows that high-level linear features evolve with conversational role and context, challenging static interpretability. →read the paper https://arxiv.org/abs/2601.20834Do Reasoning Models Enhance Embedding Models?

Analyzes why reasoning-tuned backbones fail to improve downstream embeddings despite altered latent geometry. →read the paper https://arxiv.org/abs/2601.21192

Agents, Tool Use, and Interaction

Spark: Strategic Policy-Aware Exploration via Dynamic Branching for Long-Horizon Agentic Learning

Allocates exploration resources selectively at critical decision points to improve sample efficiency. →read the paper https://arxiv.org/abs/2601.20209Scaling Multiagent Systems with Process Rewards

Assigns per-action rewards to coordinate learning across multiple agents in long-horizon tasks. →read the paper https://arxiv.org/abs/2601.23228OmegaUse: Building a General-Purpose GUI Agent for Autonomous Task Execution

Trains a cross-platform GUI agent using synthetic interaction data and staged optimization. →read the paper https://arxiv.org/abs/2601.20380Continual GUI Agents

Maintains GUI grounding under shifting interfaces through reinforcement signals tied to interaction stability. →read the paper https://arxiv.org/abs/2601.20732🌟 SERA: Soft-Verified Efficient Repository Agents (Allen Institute for AI)

Specializes coding agents to private repositories using cheap supervised trajectories. →read the paper https://arxiv.org/abs/2601.20789🌟 SAGE: Steerable Agentic Data Generation for Deep Search with Execution Feedback (Google)

Generates difficulty-controlled search tasks through iterative agent self-play and feedback. →read the paper https://arxiv.org/abs/2601.18202

Safety, Alignment, and Risk

THINKSAFE: Self-Generated Safety Alignment for Reasoning Models

Restores safety behaviors by training on self-generated refusal-aware reasoning traces. →read the paper https://arxiv.org/abs/2601.23143Statistical Estimation of Adversarial Risk in Large Language Models under Best-of-N Sampling

Predicts jailbreak risk under large-scale sampling using principled scaling laws. →read the paper https://arxiv.org/abs/2601.22636MAD: Modality-Adaptive Decoding for Mitigating Cross-Modal Hallucinations in Multimodal LLMs

Suppresses hallucinations by dynamically weighting modality-specific decoding paths at inference time. →read the paper https://arxiv.org/abs/2601.21181

Interpretability, Measurement, and Tooling

Mechanistic Data Attribution: Tracing the Training Origins of Interpretable LLM Units

Traces interpretable circuits back to specific training samples to causally explain their emergence. →read the paper https://arxiv.org/abs/2601.21996VERGE: Formal Refinement and Guidance Engine for Verifiable LLM Reasoning

Combines LLMs with symbolic solvers to iteratively verify and correct logical reasoning. →read the paper https://arxiv.org/abs/2601.20055PaperBanana: Automating Academic Illustration for AI Scientists

Automates the generation of publication-ready diagrams through coordinated multimodal agents. →read the paper https://arxiv.org/abs/2601.23265

Human Factors and Skill Formation

🌟 How AI Impacts Skill Formation (Anthropic)

Measures how different AI usage patterns affect learning, debugging ability, and conceptual understanding. →read the paper https://arxiv.org/abs/2601.20245

That’s all for today. Thank you for reading! Please send this newsletter to colleagues if it can help them enhance their understanding of AI and stay ahead of the curve.

How did you like it? |

Reply