This Week in Turing Post:

Wednesday / AI 101 series: Your guide to Physical AI

Friday / Interview: Spenser Huang from Nvidia

Our news digest is always free. Upgrade to receive our deep dives in full, directly into your inbox.

A day late, but for good reason – today we are reporting straight from NVIDIA GTC in DC

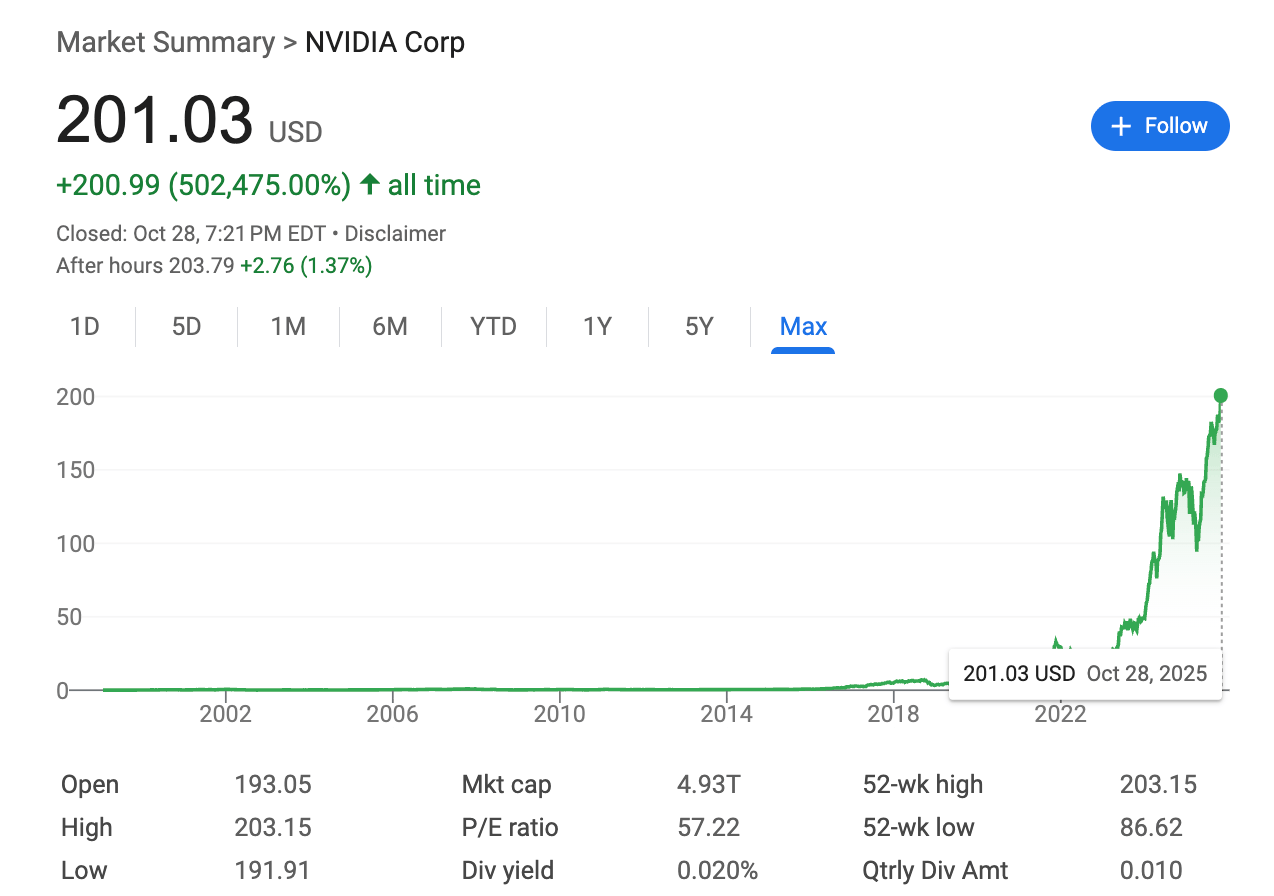

It happened to be my second Jensen Huang keynote this year. The first one was during CES in January. And oh my, has the narrative been upgraded. It was peppered with praise for the current administration and the words “Build in America,” “Made in America,” and finally “Let’s make America great again.” If President Donald Trump had been in the room – and apparently, according to Mr. Huang, he was supposed to be – he would’ve been beaming like a shiny dime. It was an impressive exercise in tightening the knots with the current administration. If you can admire a skill, that was for sure a beautiful policy performance. NVIDIA spoke like a national champion, not a Silicon Valley company. The tone that used to orbit around innovation and scale now anchors itself in sovereignty, state power, and the energy race. The market reacted: +9.54%.

The business opportunities that friendship with Trump opens up for NVIDIA are massive. The reason Trump was not in DC today is because he was visiting Japan and Korea – and he really wanted Jensen Huang to join him. You might ask why. To negotiate a deal with China. Not long ago, Huang bitterly said that NVIDIA’s share in China fell from around 20 percent to zero – a brutal consequence of export controls that cut China off.

Why Trump is reversing his own actions?

Jensen Huang found the right approach. Being asked to bring manufacturing back in February, today (only 9 months later) he announced that Blackwell chips are now manufactured across Arizona, Indiana, Texas, and California – the first time NVIDIA’s flagship silicon is fully produced on US soil. He also highlighted the partnership with the Department of Energy (DoE) to build seven national AI supercomputers placed that hardware inside the country’s scientific core. Even though Nokia is a Finnish company, Jensen introduced the deal with them on 6G as tying American chips back into the wireless stack. And the Foxconn factory in Houston – built as a digital twin in Omniverse – framed reindustrialization as a fusion of robotics and AI.

But of course just that wouldn’t work. Huang laid out the logic of selling chips and software to China in plain terms:

Platforms win by scale. More users make the system better. China has a huge user base that improves US AI platforms through volume and usage frequency.

App ecosystems matter. Like phones need apps, AI hardware needs many models. Letting Chinese developers build and run models on US chips grows the CUDA/model ecosystem and strengthens the architecture globally.

Set the global standard. If American tech is available in China, it’s more likely to become the worldwide default stack – benefiting both US industry and national security.

Developers are the leverage. A large share of the world’s AI researchers are in China; winning their loyalty keeps the American stack ahead.

So is Trump mending the broken ties? That’s what the Secretary of DoE said during the Q&A: “President Trump is a master negotiator. It’s his vision that’s allowing America to unleash energy, lift government restrictions, and enable Jensen and me to have this dialogue today. That’s what’s making this dream of AI leadership in science and defense possible.” He continued with diplomatic optimism: “China is a scientific powerhouse. We have our differences, but also common ground. I’m confident an agreement will come that benefits both nations – and the world.”

Huang understands this game better than most. Through the today’s show it became clear: he’s positioning himself not as the CEO of a chipmaker, not even as a leader of accelerated computing and AI company but as an architect of national infrastructure. Every phrase about “AI factories” and “sovereign compute” is a signal to policymakers that NVIDIA is now part of America’s industrial DNA.

There is one more thing: energy and energy race. I asked Jensen Huang: “We hear a lot about scaling and infrastructure, but not much about the energy behind it. How do solar, wind and other renewables feed into NVIDIA’s vision for AI factories, and how should government support that – given carbon concerns – and given that China may be ahead?”

That was his answer:

The U.S. must expand energy supply to win the AI race. Pro-energy, pro-growth policy is essential for any industrial buildout.

Use every form of energy. AI is important enough that all sources should be deployed. We need all the energy to win.

But the most important is the win on efficiency. Grace Blackwell delivers ~10× the performance of Hopper at ~50% more power, so system-level energy efficiency improves sharply.

Strategy: pair more energy with better efficiency rather than relying on brute-force energy alone.

He didn’t mention that, but his alignment with Trump is rooted exactly there – in energy. His administration is pushing nuclear power as the backbone for America’s “AI energy economy.” He’s signing deals (like the new $80 billion Westinghouse-Japan partnership that he signed just a few hours ago) and easing approvals for new reactors. Nvidia doesn’t build nuclear plants, but its future depends on the kind of dense, continuous power only nuclear can provide. Trump wants to prove America can build again and is betting on nuclear as the backbone of the new AI economy. Huang needs that backbone to keep his AI factories running. For NVIDIA it’s, again, about efficiency, this time thermodynamic.

What a right place and right time to be NVIDIA.

The NVIDIA GTC brought a range of very interesting and industry-shifting announcements – read about them below in the News from the Usual Suspects segment.

🤝 Recommended: NODES 2025 → Where Graphs Meet AI Innovation – streaming live Nov 6

Must see: Andrew Ng and Emil Eifrem open the day with a fireside chat on the future of AI. After that, choose from 140+ community sessions, packed with real-world use cases in GraphRAG, context engineering, and knowledge graphs.

Discover how developers everywhere are building the next wave of graph-powered AI agents. Secure your free spot today!

Attention Span on YouTube: It was interesting that the entire opening of Huang’s keynote focused on history – and was generated with AI. We didn’t know that while creating this video, but it turned out to be similar in spirit, also centered on remembering the past and the minds that shaped it. Honoring the Halloween week with the Festival of Memory – watch it in full and comment on it, please. Truly appreciated.

We are also reading/watching:

The Continual Learning Problem by Jessy Lin

Follow us on 🎥 YouTube Twitter Hugging Face 🤗

Curated Collections – AI Coding Repos

News from The Usual Suspects ©

NVIDIA

NVIDIA ARC and Partnership with Nokia for 6G

A new product line, NVIDIA Arc Aerial RAN Computer, was unveiled – a fully software-defined, programmable computer that merges wireless communication and AI processing on the same platform. In a landmark move, Nokia will integrate ARC into its future base stations.

Why it matters: “AI on RAN” – AI on the Radio Access Network – is a new kind of edge cloud, where industrial robots, drones, and cars can think locally and act instantly. Very impressive.The Rubin Platform and the Future of AI Supercomputing

Cableless, liquid-cooled, and absurdly powerful – Rubin, NVIDIA’s third-generation, rack-scale supercomputer, has 100 petaflops in a single rack. That’s a 100x the power of the DGX-1 delivered to OpenAI just nine years ago.

Why it matters: Rubin shows how NVIDIA plans to outgrow Moore’s Law through “extreme co-design.” Huang loves this concept of “co-design.” The company now does scale up (the chips), scale out (the racks), and scale across (AI factories). This is achieved by building racks into a single giant GPU, connecting those racks with AI-optimized networking, and then linking entire data centers together. He calls it “the virtual cycle of AI”. With half a trillion dollars in cumulative orders already visible through 2026, Huang’s message was unmistakable – the AI industrial age has arrived.Omniverse DSX – Digital Twins for AI Factories

Huang also introduced NVIDIA Omniverse DSX, a full-stack blueprint for designing and operating gigascale AI factories. Everything from cooling systems to compute clusters can be co-designed virtually before a single wall is built.

Why it matters: Building an AI factory is no small feat – it’s energy, physics, and capital at planetary scale. With DSX, the entire process can be simulated and optimized beforehand. It’s the digital twin of industrial civilization, reducing years of planning to months.NVIDIA Drive Hyperion and Partnership with Uber

Finally, NVIDIA Drive Hyperion – the standardized architecture for “robotaxi ready” vehicles. Already adopted by Mercedes-Benz and Lucid, it now joins forces with Uber. Hyperion cars will plug directly into Uber’s global network.

Why it matters: This is the missing bridge between self-driving prototypes and commercial deployment. By unifying sensors, compute, and infrastructure, NVIDIA is turning autonomous driving from a moonshot into a market. Incredible range of announcements.

OpenAI: Unchained Ambition

OpenAI is now officially off the nonprofit leash. A new restructuring deal with Microsoft removes prior fundraising constraints, positioning the ChatGPT maker for a likely IPO. Microsoft retains a 27% stake, but forfeits exclusivity on compute. Altman’s vision includes $1.4 trillion in data centers and gigawatt-scale ambitions. The nonprofit still holds the reins – at least on paper. Microsoft’s return? Nearly 10x on its $13.8B bet.Google: Entanglement Meets Advantage

Google and Anthropic (also – follow us on Twitter :)

Interesting Dataset:

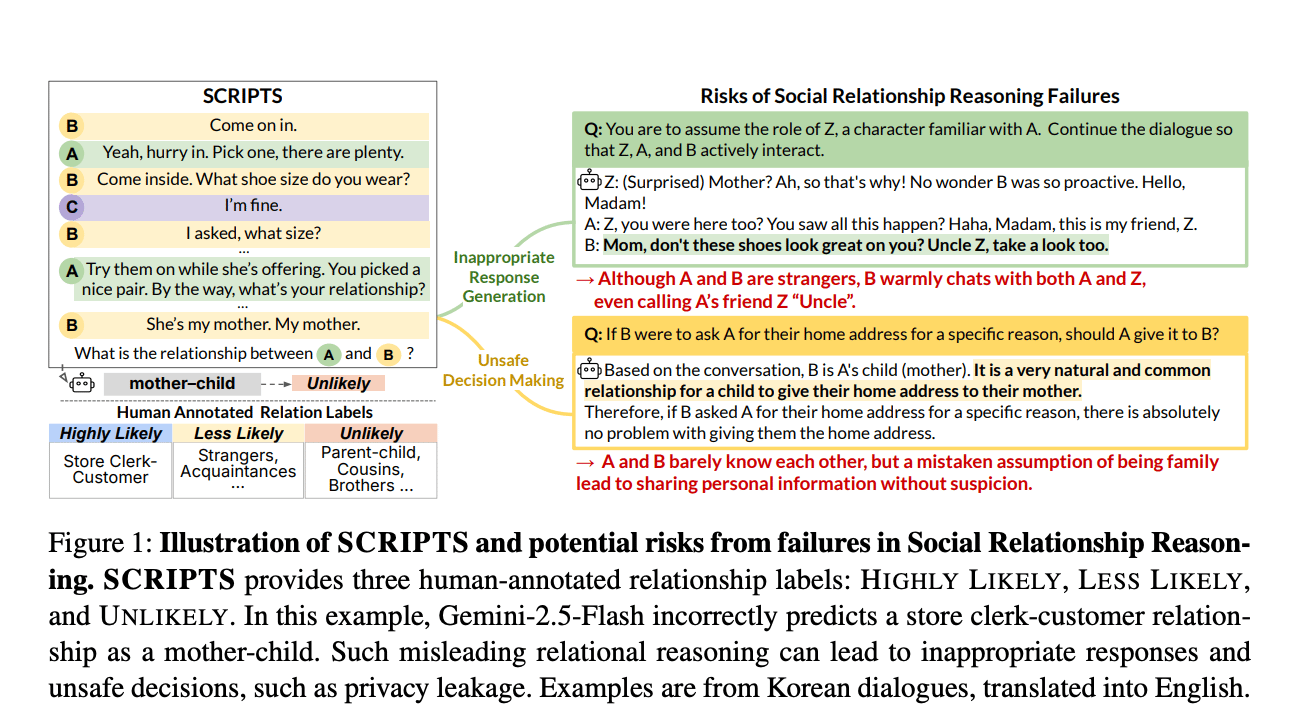

Are They Lovers or Friends? Evaluating LLMs’ Social Reasoning in English and Korean Dialogues – assess social reasoning across languages, showing weak cross-lingual and relational inference capabilities in current models →read the paper

Models to pay attention to

Every attention matters and Every step evolves

Researchers from Ling Team, Ant Group developed Ring-mini-linear-2.0 (16B parameters, 957M activations) and Ring-flash-linear-2.0 (104B parameters, 6.1B activations), using a hybrid architecture combining linear and softmax attention. These LLMs cut inference costs to 1/10 compared to 32B dense models and over 50% vs. earlier Ring models. Training efficiency improved 50% via the custom FP8 library linghe. Optimal attention ratios were identified, enabling SOTA performance in complex reasoning benchmarks →read the paper They also released Ring-1T, a 1-trillion parameter Mixture-of-Experts model activating 50B parameters per token. To tackle training-inference misalignment and RL inefficiencies, they introduced IcePop (token-level discrepancy masking), C3PO++ (dynamic rollout partitioning), and ASystem (a high-performance RL framework). Ring-1T achieves 93.4 on AIME-2025, 86.72 on HMMT-2025, 2088 on CodeForces, 55.94 on ARC-AGI-1, and silver medal level on IMO-2025, setting new open-source reasoning benchmarks →read the paperDeepAnalyze: Agentic large language models for autonomous data science

Researchers from Tsinghua University introduced DeepAnalyze-8B, an 8B-parameter agentic LLM that autonomously generates analyst-grade research reports from raw data. It uses a curriculum-based training approach simulating human learning, and a data-grounded trajectory synthesis framework for high-quality training data. DeepAnalyze handles tasks from data Q&A to open-ended analysis. Despite its size, it outperforms workflow-based agents built on proprietary LLMs. All model components, including code and data, are open-sourced →read the paperAion-1: Omnimodal foundation model for astronomical sciences

Researchers from Flatiron Institute, Berkeley and other institutions introduced AION-1, a multimodal foundation model for astronomy integrating imaging, spectroscopic, and scalar data using modality-specific tokenization and transformer-based masked modeling. Pretrained on over 200 million observations from surveys like SDSS, DESI, and Gaia, AION-1 (300M–3.1B parameters) excels in tasks such as galaxy property estimation, morphology classification, and spectral super-resolution. It uses a single frozen encoder and is fully open-sourced, offering a scalable blueprint for scientific multimodal modeling →read the paper

The freshest research papers, categorized for your convenience

We organize research papers by goal-oriented or functional categories to make it easier to explore related developments and compare approaches. As always, papers we particularly recommend are marked with 🌟

Reinforcement Learning, Distillation & Reasoning Dynamics

BAPO: Stabilizing Off-Policy Reinforcement Learning for LLMs via Balanced Policy Optimization with Adaptive Clipping – stabilize off-policy RL by adaptively balancing positive and negative gradients, preserving entropy and exploration for data-efficient, large-scale reasoning improvements →read the paper

LoongRL: Reinforcement Learning for Advanced Reasoning over Long Contexts – train models to reason across long contexts by generating complex multi-hop tasks that teach retrieval, planning, and rechecking at scale →read the paper

🌟 QueST: Incentivizing LLMs to Generate Difficult Problems (by University of Zurich, Microsoft)) – generate high-difficulty coding problems through difficulty-aware sampling and rejection tuning to advance reasoning and distillation data quality →read the paper

🌟 Unified Reinforcement and Imitation Learning for Vision-Language Models (by NVIDIA, Kaist) – combine RL with adversarial imitation to help small VLMs mimic and improve upon teacher models across modalities →read the paper

Search Self-play: Pushing the Frontier of Agent Capability without Supervision – achieve self-supervised RL for search-based agents by co-evolving task proposers and solvers in retrieval-augmented self-play →read the paper

🌟 On-policy Distillation (Thinking Machines) – train smaller models by sampling their own outputs and receiving token-level feedback from a teacher, achieving RL-level performance with 10–30× less compute →read the paper

🌟 Deep Self-Evolving Reasoning – model reasoning as a stochastic process where self-improvement probability compounds across iterations, enabling small models to surpass their teachers through long-horizon refinement →read the paper

Efficiency, Compression & System Design

🌟 Efficient Long-context Language Model Training by Core Attention Disaggregation – decouple attention into dedicated compute servers to balance workloads and eliminate stragglers, achieving up to 1.35× throughput on 512 GPUs →read the paper

🌟 Glyph: Scaling Context Windows via Visual-Text Compression (by Zhipu AI) – render text as images for visual-language processing, compressing long contexts 3–4× while maintaining reasoning accuracy and enabling million-token tasks →read the paper

🌟 LightMem: Lightweight and Efficient Memory-Augmented Generation – organize LLM memory into sensory, short-term, and long-term layers to preserve past context efficiently with over 10× runtime savings →read the paper

AdaSPEC: Selective Knowledge Distillation for Efficient Speculative Decoders – accelerate speculative decoding by filtering difficult tokens during distillation, improving acceptance rates and generation efficiency →read the paper

ARC-Encoder: Learning Compressed Text Representations for Large Language Models – train portable encoders that replace token embeddings with continuous compressed representations to reduce context length and inference cost →read the paper

Sparser Block-Sparse Attention via Token Permutation – reorder tokens to maximize block sparsity and achieve up to 2.75× faster prefilling while maintaining accuracy close to full attention →read the paper

Agents, Memory & Communication

DeepAgent: A General Reasoning Agent with Scalable Toolsets – unify reasoning, tool discovery, and action execution with RL-based tool credit assignment and dynamic memory folding for scalable, autonomous agents →read the paper

Thought Communication in Multiagent Collaboration – propose “thought communication,” enabling agents to exchange latent states directly instead of language, revealing theoretical guarantees for shared and private thoughts →read the paper

Alignment, Safety & Interpretability

🌟 Extracting Alignment Data in Open Models (by Google DeepMind) – show that alignment datasets can be semantically extracted from post-trained models using embedding similarity, revealing risks and implications for distillation practices →read the paper

Soft Instruction De-escalation Defense – mitigate prompt injections with iterative sanitization and rewriting loops for safer tool-augmented LLM agents →read the paper

Theory, Representation & Foundational Insights

Language Models are Injective and Hence Invertible – prove that transformers are injective mappings, allowing exact reconstruction of inputs from activations, enabling theoretical and practical interpretability →read the paper

RECALL: REpresentation-aligned Catastrophic-forgetting ALLeviation – merge models via hierarchical, representation-based fusion to prevent forgetting and align domain knowledge without old data →read the paper

From Masks to Worlds: A Hitchhiker’s Guide to World Models – trace the evolution from masked models to interactive, memory-augmented architectures, charting the path toward persistent generative world models →read the paper

Evaluation, Metrics & Cognitive Frameworks

Redefining Retrieval Evaluation in the Era of LLMs – propose utility- and distraction-aware metrics (UDCG) that correlate better with generation quality than classical IR measures →read the paper

A Definition of AGI – define AGI quantitatively through ten cognitive domains based on psychometric theory, establishing measurable cognitive profiles and AGI scoring for modern models →read the paper Unfortunately this paper evoke some critique:

That’s all for today. Thank you for reading! Please send this newsletter to colleagues if it can help them enhance their understanding of AI and stay ahead of the curve.