This Week in Turing Post:

Wednesday / AI 101 series: Reinforcement learning history and what’s happening now in the world of RL

Friday / Interview with Akash Parvatikar, AI Scientist at HistoWiz

Our news digest is always free. Upgrade to receive our deep dives in full, directly into your inbox.

GRPO Weekend – what happened?

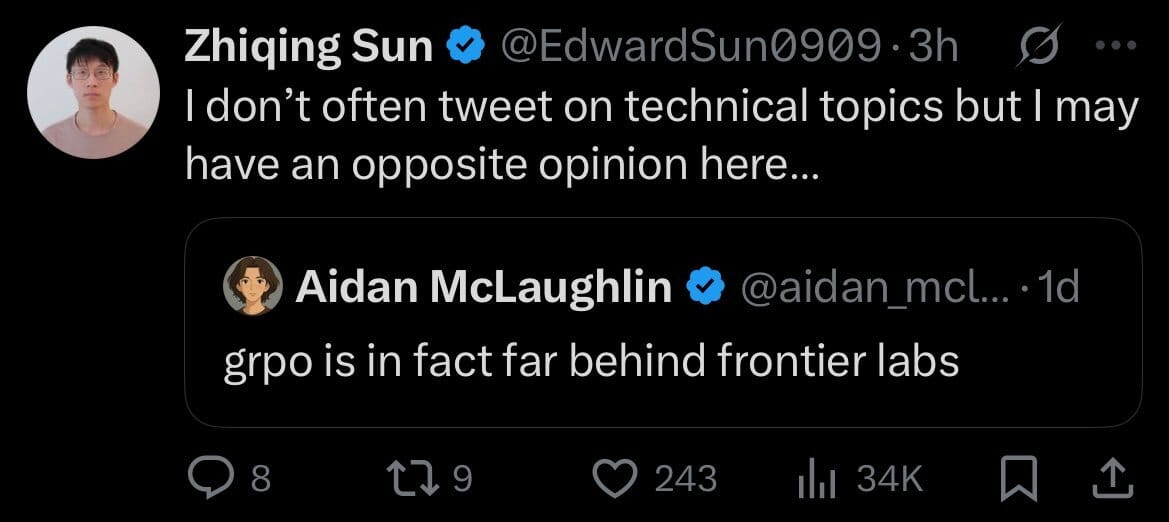

It all started with a tweet.

Original tweet is here

This reply by Aidan McLaughlin from OpenAI is now deleted. Instead we have another tweet, from another OpenAI employee saying:

What did little harmless GRPO did to evoke such drama (researchers were unfollowing each other omg!).

GRPO humble beginning

Group Relative Policy Optimization (GRPO) began as a modest experiment. Introduced in March 2024, it looked like another twist on reinforcement learning – a stripped-down cousin of REINFORCE with group-based comparisons, designed to optimize policies with less overhead. On paper, it was nothing extraordinary. Yet once applied to reasoning tasks, it flipped the field. Within months, it became a common replacement for DPO and PPO in reasoning pipelines, and researchers across different labs began to use it as a starting point for their own experiments.

And it was so effective that open-source labs took GRPO and turned it into a toolkit for pushing reasoning benchmarks. Variants appeared almost weekly, just to name a few:

SEED-GRPO added semantic entropy, making models more uncertainty-aware.

Curriculum-based GRPO adjusted for difficulty and length, letting weaker models climb steeper learning curves.

GRPO with length penalties or format-aware rewards kept models from gaming the system with bloated outputs.

Flow-GRPO that we previously covered – allowed to apply RL to images.

The results were spectacular – models with seven billion parameters started outperforming giants with thirty-two billion, at least on math-heavy and logic-heavy benchmarks like AIME and MATH. A new rhythm emerged: drop a paper, post your scores, tag it with a GRPO variant, and watch Twitter erupt.

For Chinese labs like Qwen and DeepSeek, this became a competitive advantage. For the broader open-source community, it became a symbol of what could be achieved with clever training recipes rather than sheer scale. Some researchers in frontier labs felt their superiority wobble.

Frontier lab voices began to argue that GRPO was “behind the frontier.” The technical critiques gave this claim teeth. GRPO, in its original formulation, was sloppy. Its KL regularization skipped over the importance weighting, producing inconsistent objectives. Researchers like Quanquan Gu called this out directly, framing GRPO as technically wrong.

Others pointed to the narrow definition of GRPO – just the algorithm as first published – and contrasted it with more principled approaches they suspected frontier labs were already pursuing.

This is where the debate split in two.

In the narrow sense, GRPO really was flawed, and many of its fixes look obvious in hindsight.

In the expansive sense, GRPO had become more than an algorithm. It was a family of methods, continuously refined, endlessly iterated, and responsible for most of the reasoning gains seen in the open.

When someone says “GRPO is behind,” it matters which sense they mean. Frontier insiders often mean the narrow sense. Open-source researchers almost always mean the expansive sense. What we just heard was the sound of those definitions colliding.

So what’s their inside frontier labs that is much ahead of GRPO? Few details are public, but they most likely are building on GRPO rather than discarding it:

Properly fixed KL-regularized policy gradients.

Token-level RL rather than only chain-of-thought rollouts.

More efficient credit assignment across steps.

Integration of uncertainty signals and world models directly into the reward function.

It would make sense. GRPO provided the scaffolding, but frontier labs likely have moved toward a version that is more principled, more stable, and less compute-hungry. That and other dismissive tweets set a little bit of panic and offended a few in open sourced community, but it was truly remarkable to see how active the community became and how more vivid, so much more passionate.

Zhongwen Xu, researcher from Tencent, summarized what open source community should focus on to truly close the gap:

What algorithm is next to set apart OSS and frontier labs?

Topic number two: Reinforcement learning is the talk of the town right now – not just because of GRPO, but also thanks to the conversation between Dwarkesh Patel and the father of RL, Turing Award laureate Richard Sutton. Twitter was already hot, so the terminology gap between an old-school AI scientist and a curious Dwarkesh only ignited it further. We tried to translate between the two. Watch it here→

We recommend: A Deep Dive into NVIDIA Blackwell

The GPU of the next decade is here.

Join Dylan Patel (SemiAnalysis) and Ian Buck (NVIDIA) for an insider look at NVIDIA Blackwell, hosted by Together AI. The deep dive will cover architecture, optimizations, implementation and more, along with an opportunity to get your questions answered.

Curated Collections – MCP servers

News from The Usual Suspects ©

NVIDIA Pushes Physical AI at CoRL 2025

At CoRL in Seoul, NVIDIA unveiled a full-stack push into robotics: open models, simulation engines, and new hardware.

Newton Physics Engine – GPU-accelerated, co-developed with DeepMind and Disney, now open source under the Linux Foundation and integrated in Isaac Lab. Already adopted by ETH Zurich, TUM, and Peking University.

Isaac GR00T N1.6 – a humanoid foundation model enhanced with Cosmos Reason, giving robots humanlike reasoning and the ability to handle locomotion and manipulation together. Partners include LG, Franka, Neura, and Solomon.

Cosmos WFMs – updated Predict and Transfer models generate long-horizon, multi-view synthetic data at scale, downloaded over 3M times.

Dexterous Grasping + Arena – new Isaac Lab workflows for robot hands and standardized skill evaluation; Boston Dynamics’ Atlas has already trained with it.

Jetson Thor – Blackwell-powered on-robot supercomputer, adopted by Figure AI, Unitree, DeepMind, and Meta.

Nearly half of CoRL papers cited NVIDIA tech. With Newton as the body, GR00T as the brain, and Jetson Thor as the deployment engine, NVIDIA is positioning itself as the operating system for physical AI.

Google also plays with Robotics (on a serious level)

OpenAI puts GDP on the scale

OpenAI just unveiled GDPval, a benchmark for evaluating AI on real-world, economically valuable tasks. It spans 44 occupations across the top 9 U.S. GDP sectors and compares AI deliverables to those from professionals with ~14 years of experience. All credits to them – they don’t shy from showing that the rival model beats their own.

Image Credit: OpenAI’s blog

Follow us on 🎥 YouTube Twitter Hugging Face 🤗

We are reading/watching

The QMA Singularity by Scott Aaronson

Models to pay attention to

Code World Model (CWM)

Researchers from Meta FAIR introduced a 32B parameter decoder-only LLM for code generation and reasoning. Trained on 8T pretrain, 5T mid-train, and 172B post-train tokens, CWM uses Python execution traces and 3M agentic ForagerAgent trajectories to model code semantics and planning. It achieves 65.8% pass@1 on SWE-bench Verified (test-time scaled), 68.6% on LiveCodeBench, 96.6% on Math-500, and 76.0% on AIME 2024. Checkpoints are released post mid-training, SFT, and RL under a noncommercial research license →read the paper (pdf)

Deepseek-v3.2-exp

Researchers from DeepSeek-AI introduced a 685B parameter model using DeepSeek Sparse Attention (DSA) to improve long-context training and inference efficiency while maintaining output quality. Benchmarked against DeepSeek-V3.1-Terminus, it achieves similar scores: MMLU-Pro 85.0, GPQA-Diamond 79.9, AIME 2025 89.3, and SWE Verified 67.8. In Codeforces, V3.2-Exp scores 2121 vs. 2046. Sparse attention improves performance in BrowseComp (+1.6%) and Terminal-bench (+1.0%), showcasing gains in agentic and multilingual tasks →DeepSeek on HF

Qwen3-Omni

Researchers from Qwen released a 30B Mixture-of-Experts model achieving state-of-the-art performance across text, image, audio, and video, with no degradation relative to single-modal baselines. It supports 119 written and 19 spoken languages, 10 spoken outputs, and 40-minute audio inputs. Its Thinker-Talker architecture enables 234 ms first-packet latency, real-time streaming, and cross-modal reasoning. It outperforms closed models like Gemini 2.5 Pro and GPT-4o on 32 audio/audio-visual benchmarks and sets overall SOTA on 22. Models are released under Apache 2.0 →technical report

Hunyuan3d-omni and HunyuanImage-3.0

Researchers from Tencent introduced a 3D-native generative framework that supports fine-grained controllable 3D asset generation from text or image with additional inputs like point clouds, voxels, bounding boxes, and skeletons →read the paper They also introduced HunyuanImage-3.0, the largest open-source image generation MoE model with 80B parameters (13B active per token) and 64 experts. Built on a unified autoregressive multimodal framework, it supports advanced reasoning, sparse prompt elaboration, and photorealistic image generation. SSAE evaluation showed strong alignment with 3,500 key points across 12 categories. Human evaluation (1,000 prompts, 100+ raters) confirmed superior aesthetic and semantic performance compared to closed-source models →their GitHub

Manzano

Researchers from Apple introduced a unified multimodal LLM that combines strong image understanding and text-to-image generation using a hybrid tokenizer architecture. A shared vision encoder outputs continuous embeddings for image understanding and discrete tokens for image generation. Manzano employs a unified autoregressive LLM and a diffusion decoder, trained via a three-stage recipe. It achieves state-of-the-art results among unified models, with minimal task conflict and strong scaling: the 30B variant surpasses prior models on text-rich VQA and competitive generation (GenEval: 1.00, WISE: 0.54) →read the paper

The freshest research papers, categorized for your convenience

We organize research papers by goal-oriented or functional categories to make it easier to explore related developments and compare approaches. As always, papers we particularly recommend are marked with 🌟

Science & Biotech Modeling

🌟🌟 SimpleFold: Folding Proteins is Simpler than You Think (Apple) – fold proteins with a general-purpose transformer trained by flow matching, avoiding domain-specific blocks. ->read the paper

Multimodal, Vision & Video Reasoning

🌟🌟 Video models are zero-shot learners and reasoners (Google DeepMind) – demonstrate emergent zero-shot perception and manipulation abilities in a generalist video model. ->read the paper

🌟MetaEmbed: Scaling Multimodal Retrieval at Test-Time with Flexible Late Interaction (Meta) – append meta tokens and scale vectors at inference to balance accuracy and cost. ->read the paper

🌟 MMR1: Enhancing Multimodal Reasoning with Variance-Aware Sampling and Open Resources – release long CoT data and select high-variance samples to stabilize RL. ->read the paper

MOSS-ChatV: Reinforcement Learning with Process Reasoning Reward for Video Temporal Reasoning – align reasoning traces to video dynamics with DTW-based process rewards. ->read the paper

Theory & Evaluation

🌟 Limits to black-box amplification in QMA by Scott Aaronson and Freek Witteveen – establish oracle-relative limits showing completeness and soundness cannot be amplified beyond doubly-exponential via black-box procedures. ->read the paper

🌟 Behind RoPE: How Does Causal Mask Encode Positional Information? (KAIST, Microsoft) – reveal how causal masking itself induces position-dependent attention and interacts with RoPE to distort relative patterns. ->read the paper

TrustJudge: Inconsistencies of LLM-as-a-Judge and How to Alleviate Them – model judge scoring probabilistically to reduce score-comparison and transitivity failures without extra training. ->read the paper

🌟 What Characterizes Effective Reasoning? Revisiting Length, Review, and Structure of CoT (Meta) – identify failed-step fraction as a better predictor than length or review and validate structure-aware test-time scaling. ->read the paper

Cross-Attention is Half Explanation in Speech-to-Text Models – quantify that cross-attention aligns only partially with saliency, cautioning against over-interpreting attention maps. ->read the paper

Training & Optimization (pre-training, RL, CoT)

🌟 Thinking Augmented Pre-training (Mictosoft) – augment pre-training text with synthetic thinking trajectories to boost data efficiency and downstream reasoning. ->read the paper

🌟 Soft Tokens, Hard Truths (Meta) – train continuous chain-of-thought via RL to increase CoT diversity while deploying with discrete tokens. ->read the paper

SIM-CoT: Supervised Implicit Chain-of-Thought – stabilize implicit CoT by adding step-level supervision with an auxiliary decoder that is dropped at inference. ->read the paper

🌟 Reinforcement Learning on Pre-Training Data (Tencent) – derive rewards directly from next-segment prediction on pre-training corpora to scale RL without human labels. ->read the paper

MAPO: Mixed Advantage Policy Optimization – adapt advantage weighting to trajectory certainty to fix advantage reversion and mirror effects in GRPO-style training. ->read the paper

🌟 VCRL: Variance-based Curriculum Reinforcement Learning for Large Language Models (Alibaba) – curriculum-train by selecting samples with informative reward variance to improve math reasoning. ->read the paper

Advancing Speech Understanding in Speech-Aware Language Models with GRPO – optimize speech QA and translation with GRPO and BLEU-based rewards beyond SFT. ->read the paper

🌟 Thinking While Listening: Simple Test Time Scaling For Audio Classification (Stanford) – add lightweight reasoning and sampling at inference to improve audio classification accuracy. ->read the paper

🌟 CAR-Flow: Condition-Aware Reparameterization Aligns Source and Target for Better Flow Matching (Apple) – shorten probability paths with condition-aware shifts to speed training and improve FID. ->read the paper

Agents, Environments & Planning

🌟 ARE: Scaling Up Agent Environments and Evaluations (Meta) – provide a platform and Gaia2 benchmark to stress-test agents in asynchronous, tool-rich, dynamic tasks. ->read the paper

UserRL: Training Interactive User-Centric Agent via Reinforcement Learning – train agents with simulated users and reward designs that improve multi-turn helpfulness. ->read the paper

BTL-UI: Blink-Think-Link Reasoning Model for GUI Agent – mimic human visual attention, cognition, and action with process-and-outcome rewards for GUI automation. ->read the paper

Tree Search for LLM Agent Reinforcement Learning – expand rollouts via tree-structured sampling and compute step-wise advantages from outcome rewards. ->read the paper

RPG: A Repository Planning Graph for Unified and Scalable Codebase Generation – replace free-form plans with graph blueprints to generate full repositories with tests. ->read the paper

LIMI: Less is More for Agency – show that carefully curated, tiny datasets can elicit strong agentic skills more efficiently than large collections. ->read the paper

Collaboration Across Models

🌟 Mixture of Thoughts: Learning to Aggregate What Experts Think, Not Just What They Say (University of Southern California) – route among heterogeneous experts and fuse hidden states in shared layers for single-pass gains. ->read the paper

That’s all for today. Thank you for reading! Please send this newsletter to your colleagues if it can help them enhance their understanding of AI and stay ahead of the curve.