A lot happened last week, but for many people, it's vacation time. So today, let's focus on a lighter version of the news and concentrate on two main headlines, with the rest available in the link section.

Some of the articles might be behind the paywall. If you are our paid subscriber, let us know, we will send you a pdf.

Worldcoin and Sam Altman's Global Identity Plans

Amidst the tranquil setting of Sardinia, Italy, where I found myself writing today’s newsletter, with doves cooing outside, the significance of AI might seem elusive. However, Monday's announcement by Sam Altman and Alex Blania about Worldcoin proves that the quest for AI dominance persists.

The Worldcoin token, a project merging the realms of crypto and AI, aims to establish a global identity and financial network accessible to all. Using a device called 'an orb,' the initiative plans to verify people's uniqueness and humanity by scanning their irises, rewarding them with Worldcoin tokens wherever legally permissible.

According to Reuters, the project has already garnered 2 million users during its beta phase. With Monday's launch, Worldcoin is expanding its "orbing" operations to 35 cities across 20 countries, enticing new sign-ups in specific regions with Worldcoin's cryptocurrency token, WLD. The project enjoys robust support from investors: In March 2022, Tools for Humanity, the team building Worldcoin, raised $100 million at a $3 billion valuation. In May 2023, additional $115 million in a Series C round.

Sam Altman, while not holding shares in OpenAI, stands to gain significantly from Worldcoin's development due to his strong ties with OpenAI. It might be doves behind the window, or the reality of rural Italy with men and women still chatting on the streets, but the idea of irises scanning for private company to solve a world inequality problem seems creepy. A couple of months ago, The Block reported that “there are signs of a new kind of black market springing up around Worldcoin.”

As Worldcoin founders acknowledge themselves, "Worldcoin is an attempt at global scale alignment, and the journey will be challenging, with an uncertain outcome. But finding new ways to equitably share the forthcoming technological prosperity is a critical challenge of our time." So, following this statement, why should anyone believe them?

To better understand the state of the field and the competition, take a look at a highly detailed and thought-through post by Vitalik Buterin. He has been deeply interested in proof-of-identity for a long time and possesses expertise in that topic. In his post, he discusses the concept of proof-of-personhood, which he believes holds significant value in principle. However, he also acknowledges that solving this problem is a daunting challenge.

In any case, ethical considerations and the potential implications of centralizing such ventures in one company, even with a promise to decentralize it later, call for critical scrutiny.

Now to a really exciting last week announcement with a lot of helpful links to play with:

Llama 2 by Meta in partnership with Microsoft

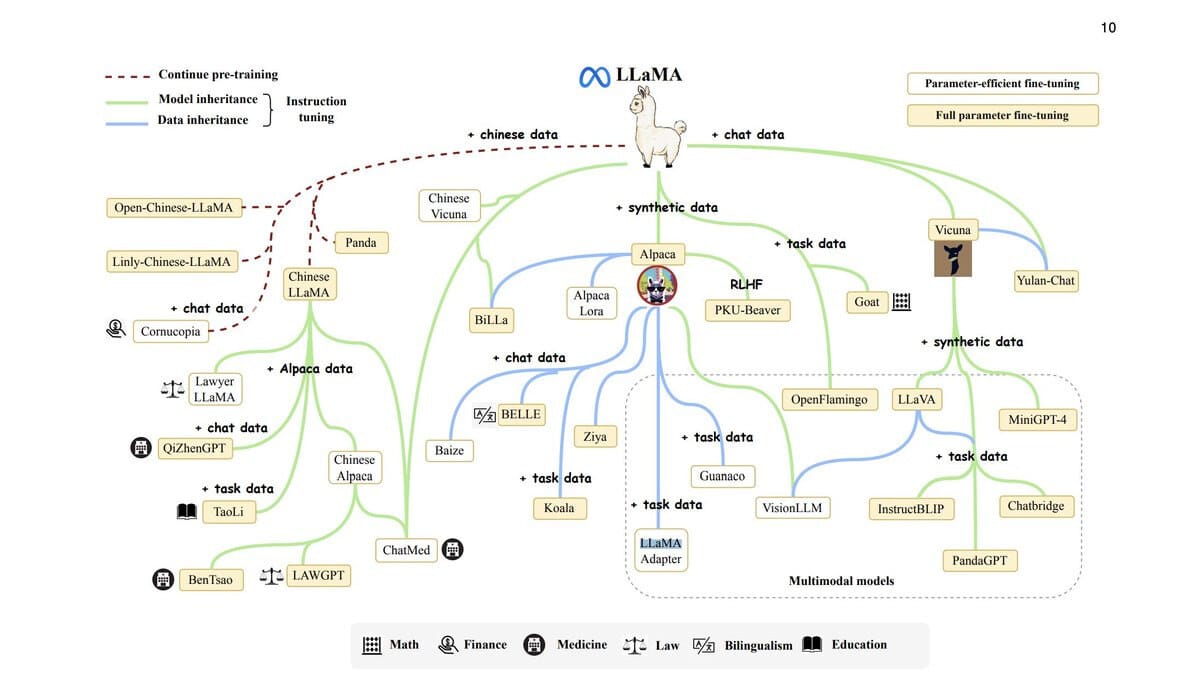

Llama 1 was great and sparked a whole new movement of impressive open-source alternatives to ChatGPT.

It had one limitation: there was no license for commercial use.

Today, the landscape is rapidly evolving with Meta's latest release, Llama 2, in partnership with Microsoft. It’s not only an improved version of Llama 1, specifically designed for use-cases similar to ChatGPT. It is also available for commercial use. This development signals an exciting shift as it allows startups and other commercial entities to harness advanced AI for innovative product development.

But, there is a small catch. As Nathan Lambert explains in his Interconnects newsletter, Llama 2 is not open-source, meaning its development and usage are not fully accessible to the general public. Despite this limitation, it still provides value to the open-source community as an open release or open innovation. Lambert also observes that Llama 2 doesn't feel like a finished project, suggesting that its development is ongoing and likely involved months of training. One can anticipate that the next iteration of the model is already in progress.

I encourage you to delve into Nathan Lambert's insightful deep dive on Llama 2, titled "Llama 2: an incredible open LLM."

Tech Spec

Llama 2 is trained using a three-step process. Initially, it is pre-trained on publicly available online data, then refined through supervised fine-tuning, much like a child learning from observation and instruction. The model reaches maturity through Reinforcement Learning from Human Feedback (RLHF), which involves iterative refining using rejection sampling and proximal policy optimization (PPO) to ensure safety and helpfulness. This groundbreaking process has led Llama-2-chat to outperform other models in reasoning, coding, and knowledge tests.

To further the AI research community's understanding, Meta has published a very detailed paper outlining this intricate training process, including model details, training stages, hardware, data pipeline, and annotation process. Worth checking out.

Benefits to Meta

Meta's decision to make Llama 2 weights available is a multifaceted strategy that goes beyond just sharing technology with the wider community. Here are a few potential benefits:

Goodwill. By open-sourcing Llama 2, Meta is contributing to the public good and the overall advancement of technology. This could improve Meta's image in the eyes of the public and the technology industry.

Standards Lobbying. By open-sourcing Llama 2, Meta can set the standard for large language models and guide the direction of the industry. Other organizations may choose to use Llama 2 as a base, thereby aligning their technologies with Meta's.

Recruit and Retain Top Researchers. Open-source projects can serve as a demonstration of the cutting-edge work a company is doing, and may attract top talent in the field who want to work on similar projects.

Sell Hardware or Cloud Resources. Llama 2's processing needs could potentially be a way to promote Meta's own hardware or cloud resources.

In addition, this move reflects the complex dynamics and competitive landscape of the tech industry.

Who’s the absolute winner?

The absolute winner in this situation is Microsoft; now it can reap the benefits from the best of both worlds: OpenAI and Meta.

Microsoft's Azure platform will support Llama 2 models, enabling Azure users to fine-tune and deploy these models. Additionally, Llama 2 will be optimized for local running on Windows using DirectML.

Microsoft's release of Orca, a smaller open-source alternative to GPT-4, further strengthens their position.

Is this partnership a blow to OpenAI?

While Business Insider believes it marks the end of OpenAI's moat, Analytics India Magazine argues that OpenAI remains vital for the success of numerous teams working on generative AI applications. As per the magazine, developers and teams can now train their models using ChatGPT "output data," providing considerable benefits to various open-source models.

Who misses the fun?

According to Llama 2 Community License Agreement, the model is available for commercial use unless your product has more than 700 million monthly active users (think: Apple, Google and Amazon).

By the way, to catch up with everything, Google co-founder Sergey Brin is “back in the development trenches,“ working alongside AI researchers at the Google headquarters, assisting his efforts in building Google’s powerful Gemini system.

What’s exciting?

Open-source developers have already started experimenting with Llama 2, leading to new fascinating use cases and applications in the future.

Play with it too

Examples and recipes for Llama 2 on github.

To chat with 7B and 13B LLama 2 go to Perplexity Labs, to use 70B model to Hugging Face.

For the better results Scale AI explains how their new open-source LLM Engine help fine-tuning the base Llama-2 model.

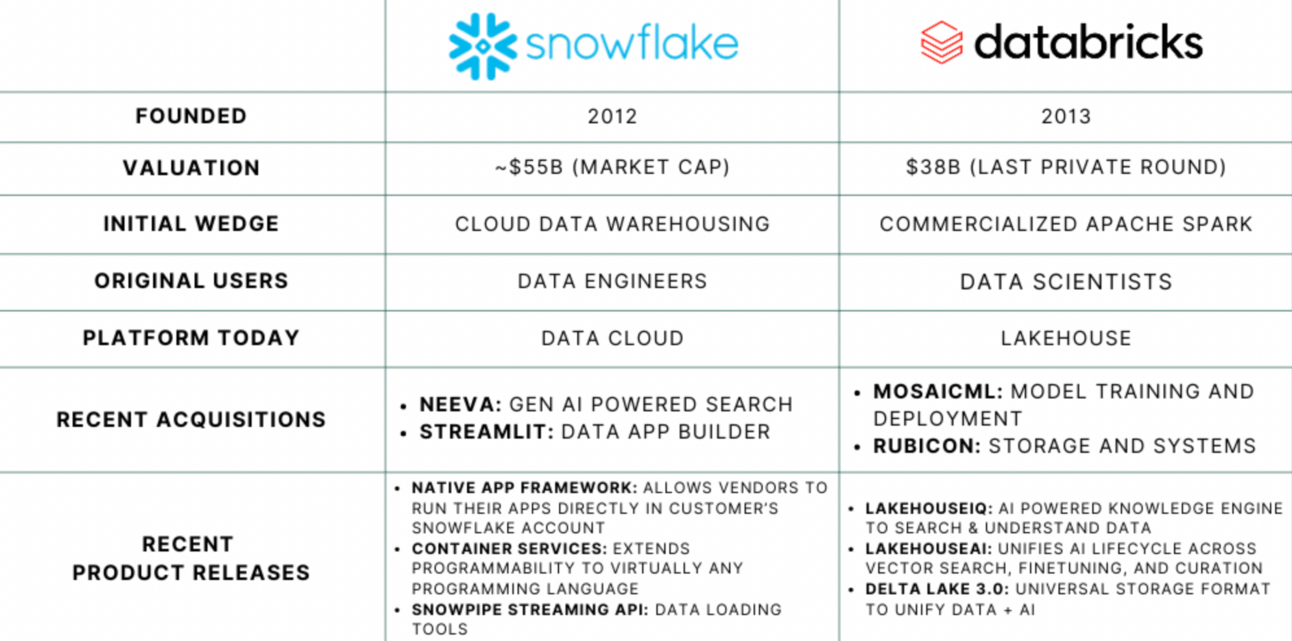

Databricks immediately offered Llama 2 batch inference, Llama 2 model logging and inference with MLFlow, Llama 2 fine tuning.

What else is happening in the AI world?

To catch up with Llama 2's buzzing popularity, OpenAI is introducing custom instructions for ChatGPT to tailor the AI's responses to users' needs. Initially in beta for Plus plan, it'll soon expand to all. This addresses feedback about inconvenience starting each conversation from scratch. ChatGPT considers custom instructions, making it efficient and personalized, like teacher's grade, developer's coding language, or grocery quantities.

OpenAI also announced a new partnership with the American Journalism Project, a venture philanthropy working to rebuild local news. The plan is to explore ways in which the development of AI can support a thriving, innovative local news field.

Amazon share the SCOTT: Self-Consistent Chain-of-Thought Distillation paper and explained what they did to improve the consistency of chain-of-thought reasoning through knowledge distillation: to teach language model to reason consistently

MosaicML has introduced MPT-7B-8K, an open-source large language model with 7 billion parameters and an 8k context length, trained on their platform. Its training started from the MPT-7B checkpoint, using 256 NVIDIA H100s over three additional days with an extra 500B tokens of data. The model excels in document summarization and question-answering and is optimized for rapid training and inference.

AI21 Labs has unveiled Contextual Answers, a plug-and-play engine designed to boost the effectiveness of information queries using generative AI. This solution, provided as an embeddable API, allows organizations to easily integrate large language model technology into their digital assets for enhanced data handling.

Stability AI released two models competitive with ChatGPT on HuggingFace. FreeWilly1 and FreeWilly2 were developed in Stability AI’s CarperAI. FreeWilly1 is based on the LLaMA 65B model and uses a new synthetically-generated dataset for fine-tuning, while FreeWilly2, built on the LLaMA 2 70B model, performs comparably to GPT-3.5 in certain tasks. These models, intended for research purposes, are available under a non-commercial license.

Cerebras Systems has inked a deal worth around $100 million to provide the first of up to nine AI supercomputers to UAE-based tech group G42. This agreement comes amid global cloud computing providers' search for alternatives to Nvidia's AI computing chips, which are in high demand and short supply due to the rising popularity of services like ChatGPT. Cerebras is among the startups aiming to rival Nvidia.

More Papers

Useful insight into Claude 2’s capabilities and limitations read more →

Prompt Engineering guide by GitHub, a must read →

A fun fact, according to this Semafor’s interview with Riley Goodside, the lead prompt engineer for Scale AI, OpenAI did not anticipate GPT-3's ability to effectively follow longer, more complex instructions beyond the typical short prompts. So they started the whole new prompt engineering profession basically by chance!

Google Research announced SimPer that harnesses recurring patterns in data using self-contrastive learning and diverse augmentations, providing robust representations of periodic signals applicable in fields like remote sensing and healthcare read more→

To watch

Why Geoffrey Hinton is worried about the future of AI - YouTube - in which he describes the precise moment when he realized that digital systems might be superior to biological ones.

VC analysis

An interesting comparison by Madrona VC: Snowflake vs. Databricks: Two Cloud Giants Battling in the AI Domain

Governance

The Biden administration has garnered "voluntary commitments" from seven top AI companies - OpenAI, Anthropic, Google, Inflection, Microsoft, Meta, and Amazon, to achieve shared safety and transparency goals in anticipation of a planned executive order. The companies have agreed to conduct security tests of AI systems before release, share AI risk and mitigation techniques across academia, government, and civil society, invest in cybersecurity, facilitate third-party vulnerability discovery, watermark AI-generated content, report AI system limitations, research societal risks, and use AI to address major societal challenges. The commitments, though non-binding, could help shape potential AI legislation and are being tracked publicly. If companies fall short, the looming threat of an executive order could prompt the Federal Trade Commission to scrutinize AI products claiming robust security.

Thank you for reading, please feel free to share with your friends and colleagues 🤍

Another week with fascinating innovations! We call this overview “Froth on the Daydream" - or simply, FOD. It’s a reference to the surrealistic and experimental novel by Boris Vian – after all, AI is experimental and feels quite surrealistic, and a lot of writing on this topic is just a froth on the daydream.