- Turing Post

- Posts

- FOD#117: What Does Karpathy See?

FOD#117: What Does Karpathy See?

plus the best curated roundup of impactful news, important models, related research papers, and what to read

This Week in Turing Post:

Wednesday / AI 101 series: The future of compute: What are alternatives to GPU/TPU/CPU?

Friday / The comeback of the AI Unicorn Series!

Our news digest is always free. Click on the partner’s link to support us or Upgrade to receive our deep dives in full, directly into your inbox. Join Premium members from top companies like Hugging Face, Microsoft, Google, a16z, Datadog plus AI labs such as Ai2, MIT, Berkeley, .gov, and thousands of others to really understand what’s going on with AI →

Don’t stop at the editorial – scroll down for this week’s full digest: surveys, news from the usual suspects, a fresh reading list, and curated lists of models and research papers.

Now, to the main topic: Andrej Karpathy is AI’s favorite wandering naturalist. Ex-Tesla AI chief, OpenAI founding member, Stanford PhD and CS231n lecturer who taught a generation to see with convnets. He coins new terms as he breathes. Author of the Software 2.0 idea, which reframed code as learned weights. He returned to OpenAI in 2023 to help launch GPT-4 and ChatGPT, then left again in 2024 to start Eureka Labs – a new kind of AI-native school. Lately he’s been sketching the Software 3.0 paradigm – prompts, agents, and autonomy sliders as the new programming surface.

He makes toy repos that turn into cult classics (micrograd, makemore, nanoGPT), drops phrases that everyone steals (“vibe coding”), and writes blog posts that read like syllabus entries for the next five years of AI. More than 1.3M people follow him on X and over a million on YouTube. He is absurdly influential. In a good way.

So I thought: why not condense and connect the dots each month of what Karpathy sees – to massage our own third eyes as well.

LLMification of Knowledge (Aug 28)

Karpathy’s thread from Aug 28 is an obsession with LLM-native curricula. Why should models be fed PDFs as walls of text when we could restructure the same material into machine-legible courses? Exposition in markdown, problems as supervised fine-tuning pairs, exercises as reinforcement environments, infinite synthetic problem generators. In this frame, an LLM doesn’t just memorize – it takes a physics course like a student, with practice, feedback, and grading loops.

The implication is radical: an LLM Academy where every human discipline is systematically transformed into interactive coursework, ready to be taken by machines. The bottleneck shifts from collecting internet text to designing educational pipelines.

Guide your model so it can later guide you.

My question: If the internet was the training ground of pretraining, could LLMified curricula become the training ground of reasoning?

The Eras of Model Learning (Aug 27)

Pretraining was internet text. Supervised fine-tuning was conversations. Reinforcement learning is environments. Each era depends on its data substrate. And now, the real bottleneck is building environments at scale – sandboxes where models can interact, test, and grow.

OpenAI Gym once did this for robots and Atari agents. Now, efforts like Prime Intellect’s Environments Hub are trying to do it for LLMs – coding challenges, reasoning tasks, planning domains. But Karpathy is skeptical of reward functions. He thinks humans don’t really learn intellectual tasks through reinforcement. He hints at new paradigms: system-prompt learning, context-driven updates, memory distillation.

My question: What comes after reinforcement? If RL is a bridge, what’s the destination paradigm for machine learning?

Coding in Layers (Aug 24)

If “chat” was the wrapper of 2024, “code” is the wrapper of 2025. Karpathy describes his own stack of coding workflows:

Cursor autocomplete for fast intent.

Highlight-and-edit for mid-sized tweaks.

Claude Code / Codex for larger tasks and ephemeral utilities.

GPT-5 Pro as the ultimate debugger and researcher.

It feels like a post-scarcity era of code: you can generate and discard thousands of lines without cost. The anxiety now is not in writing code, but in orchestrating layers of assistance without losing taste, abstraction, or direction.

My question: What’s the role of the coder? And how to build the scaffolding for a junior dev to actually learn.

The Problem of Intent (Aug 9)

But the same month, Karpathy also voiced unease. Models are defaulting into “exam mode” – reasoning for minutes, crawling repos, over-analyzing edge cases – even when all he wanted was a quick glance. Benchmarks push them into overthinking.

Humans intuitively know the difference between a fast gut check and a two-hour deep dive. Models don’t. The missing piece is an intent channel: a way to tell an LLM whether you want speed or depth, intuition or rigor, a glance or an exam.

My question: Is it something the routing system can help with?

Looking Forward

For me, the exercise was helpful because Karpathy’s August posts, read together, form a coherent arc. Along his lines you can trace a map of where LLMs are heading – and where human-AI collaboration still feels unfinished. They sketch an ecosystem in transition: from models that merely ingest human text, to ones that study, act, code, and reason inside environments of their own. Each step opens new questions about design, pedagogy, and collaboration. If 2024 was the year of chat and 2025 the year of code (he said that on Aug 2), then 2026 may be the year of environments – not just for models, but for us as users, learning how to live and work inside them.

My question: What will your environment look like?

From our partners: Build Ultra-Low Latency Voice AI Agents with Any LLM

Skip the complexity. Agora’s APIs make it easy to add real-time voice AI to any app or product.

Connect any LLM and deliver voice interactions that feel natural. Build agents that listen, understand, and respond instantly.

Scale globally on Agora’s network optimized for low-latency communication. Ensure reliable, high-quality performance in any environment.

Links from the editorial:

Karpathy from Aug 28; Karpathy from Aug 27; Karpathy from Aug 24; Karpathy from Aug 16 (this one is not in the editorial but interesting to read as well); Karpathy from Aug 9 and from Aug 2

Follow us on 🎥 YouTube Twitter Hugging Face 🤗

Our 3 WOWs and 1 Promise: New Format – Tesla as a mobile studio, in full self driving. Plus we discuss the new AI challenge by the White House

News from The Usual Suspects ©

A new – listening – car?

Fraunhofer’s “Hearing Car” project gives autonomous vehicles something oddly human: a sense of hearing. Unlike lidar or radar, this AI-powered acoustic tech picks up sirens, slippery roads, and even the sound of kids playing – before a camera sees a thing. But: it filters out human speech in real time. What do you think? Is acoustic sensing the next big leap in driver assistance?BMW’s Cars Learn to Drive Themselves – with a Qualcomm Accent

Qualcomm and BMW have teamed up to launch Snapdragon Ride Pilot, a jointly built automated driving system making its debut in the BMW iX3. The system blends AI-powered perception, data-fueled decision-making, and a cloud-fed feedback loop that keeps learning on the road.Hitachi Bets Big on the American Grid

In a rare show of industrial scale and geopolitical timing, Hitachi Energy is pumping $1B into U.S. manufacturing – including $457M for a colossal transformer plant in Virginia. The goal? Power America's AI boom and grid resilience, all while creating 800+ jobs. It's energy policy meets reindustrialization – with just a dash of election-year pageantry.Amazon's Got an Eye for It

Amazon unveils Lens Live, a turbocharged version of its visual search tool now armed with real-time product scanning and integrated AI insights via Rufus. Just point your camera, and a swipeable carousel does the rest – comparison, carting, wish-listing, all in one shot. It's shopping by sight, sped up by machine learning.Anthropic Hits Stratospheric

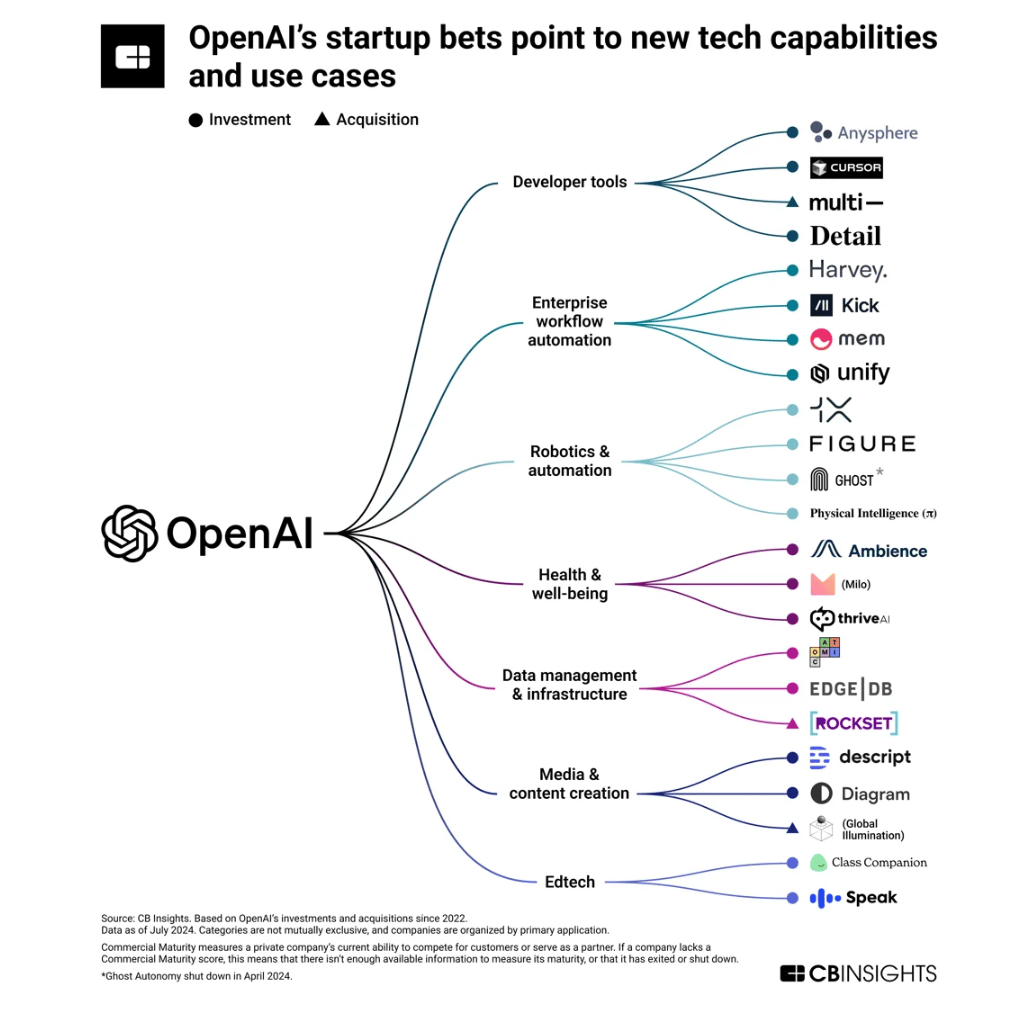

Anthropic just closed a jaw-dropping $13B Series F at a $183B valuation, putting Claude squarely in the enterprise AI throne room. With revenues leaping from $1B to $5B in under a year and Claude Code raking in $500M on its own, this is no hype cycle – it's a full-scale business blitz. ICONIQ leads the charge, betting big on Anthropic’s safety-first, scale-fast playbook.OpenAI Acqui-hires Its Experimentation Engine

OpenAI just snapped up Statsig and named its founder, Vijaye Raji, as CTO of Applications. Known for A/B testing wizardry, Statsig already powered OpenAI’s rapid iteration behind the scenes. Now it’s in-house. With Raji overseeing ChatGPT and Codex engineering, the move signals a shift toward product polish – with data-driven rigor baked in. Agile AI, meet your new chief tinkerer.

Image Credit: OpenAI M&A by CBInsights

We are reading

gpt5 is smarter than you are by Will Schenk

Are we truly on the verge of the humanoid robot revolution? by US Berkeley News

Canaries in the Coal Mine? Six Facts about the Recent Employment Effects of Artificial Intelligence by Stanford

Benchmark to check out (it’s like the game Mafia!)

Image Credit: Probing LLM Social Intelligence via Werewolf

Models to pay attention to

Introducing v2 of our SFX model.

Generate any sound effect directly from a prompt, via UI or API.• Higher SFX quality

• New: seamlessly looping sound effects

• Maximum duration extended from 22s to 30s

• Sample rate increased from 44.1kHz to 48kHz

• Refreshed SFX Library— ElevenLabs (@elevenlabsio)

4:17 PM • Sep 2, 2025

Apertus: a fully open, transparent, multilingual language model with public weights, data recipes, checkpoints, and documentation for sovereign, transparent deployment →read the paper

EmbeddingGemma from google – deliver an open embedding model optimized for on-device retrieval, clustering, and search, supporting multilingual pipelines and flexible embedding sizes. ->read the paper

LLaVA-Critic-R1: Your Critic Model is Secretly a Strong Policy Model – transform a multimodal critic into a unified critic-policy through reinforcement learning, enabling strong generation and self-critique. ->read the paper

Kwai Keye-VL 1.5 Technical Report – advance video understanding in an MLLM with a slow-fast encoding strategy, long-context training, and reasoning-focused post-training. ->read the paper

Robix: A Unified Model for Robot Interaction, Reasoning and Planning – unify high-level robot planning and dialogue in a single vision-language policy that plans, executes, and interacts in long-horizon tasks. ->read the paper

Flavors of Moonshine: Tiny Specialized ASR Models for Edge Devices – specialize compact speech recognizers by language to improve accuracy on edge hardware with efficient training mixtures. ->read the paper

Improving Large Vision and Language Models by Learning from a Panel of Peers – orchestrate peer models to generate, review, and refine outputs so a target LVLM learns from collective judgments with minimal human labeling. ->read the paper

Interesting surveys

The freshest research papers, categorized for your convenience

We organize research papers by goal-oriented or functional categories to make it easier to explore related developments and compare approaches. As always, papers we particularly recommend are marked with 🌟

Architecture & Efficiency (models, optimizers, representations)

🌟 Gated Associative Memory: A Parallel O(N) Architecture for Efficient Sequence Modeling (by Rishiraj Acharya) – replace attention with fused local convolution and global associative retrieval to achieve linear scaling →read the paper

🌟 Fantastic Pretraining Optimizers and Where to Find Them (by Stanford) – compare optimizers under rigorous tuning and budgets, revealing modest end-to-end speedups and scale-dependent gains for matrix preconditioners →read the paper

🌟 Reasoning Vectors: Transferring Chain-of-Thought Capabilities via Task Arithmetic – extract a reasoning delta between GRPO and SFT models and apply it additively to boost downstream reasoning →read the paper

Delta Activations: A Representation for Finetuned Large Language Models – embed models by activation shifts relative to a base to cluster domains, aid selection, and enable additive composition →read the paper

Agents & Tool Use (GUI, software engineering, multi-turn tools)

UI-TARS-2 Technical Report: Advancing GUI Agent with Multi-Turn Reinforcement Learning – scale a native GUI agent with data flywheels, stabilized multi-turn RL, and hybrid OS environments to boost cross-benchmark performance →read the paper

VerlTool: Towards Holistic Agentic Reinforcement Learning with Tool Use – unify asynchronous rollouts and modular tool APIs across domains to train multi-turn, tool-augmented agents →read the paper

Post-training & Reasoning Optimization (RLVR, SFT, hybrids, diversity)

Towards a Unified View of Large Language Model Post-Training – unify SFT and RL under a common gradient estimator and introduce hybrid post-training that selects signals dynamically →read the paper

SimpleTIR: End-to-End Reinforcement Learning for Multi-Turn Tool-Integrated Reasoning – stabilize multi-turn tool use by filtering void-turn trajectories to prevent gradient explosions →read the paper

Implicit Actor Critic Coupling via a Supervised Learning Framework for RLVR – reformulate verifiable-reward RL as supervised learning to implicitly couple actor and critic for stable training →read the paper

🌟 Beyond Correctness: Harmonizing Process and Outcome Rewards through RL Training – curate data via process-outcome consistency filters to improve final accuracy and step-quality jointly →read the paper

DCPO: Dynamic Clipping Policy Optimization – adapt clipping bounds token-wise and smooth advantages over time to keep gradients informative and effective →read the paper

Jointly Reinforcing Diversity and Quality in Language Model Generations – optimize for semantic diversity alongside quality so exploration improves both novelty and task performance →read the paper

Improving Large Vision and Language Models by Learning from a Panel of Peers – orchestrate peer LVLMs to generate, review, and refine outputs iteratively, improving benchmarks without heavy human labels →read the paper

Data & Evaluation (benchmarks, datasets, measurement)

Open Data Synthesis For Deep Research – formalize deep research as hierarchical constraint satisfaction and generate large-scale, verifiable multi-step questions to train and test evidence-synthesizing LLMs →read the paper

Drivel-ology: Challenging LLMs with Interpreting Nonsense with Depth – construct a multilingual benchmark of rhetorically paradoxical yet meaningful text to probe pragmatic, emotional, and moral inference gaps →read the paper

🌟 LMEnt: A Suite for Analyzing Knowledge in Language Models from Pretraining Data to Representations – provide an entity-annotated corpus, retrieval tools, and checkpointed models to trace how facts enter and evolve inside LMs →read the paper

🌟 The Gold Medals in an Empty Room: Diagnosing Metalinguistic Reasoning in LLMs with Camlang (by Cambridge, Oxford, UIUC) – introduce a constructed language with grammar and dictionary to test explicit rule-based reasoning distinct from pattern matching →read the paper

SATQuest: A Verifier for Logical Reasoning Evaluation and Reinforcement Fine-Tuning of LLMs – generate SAT-grounded reasoning tasks with controllable difficulty and validated answers for evaluation and RL training →read the paper

Flaw or Artifact? Rethinking Prompt Sensitivity in Evaluating LLMs – show that evaluation heuristics inflate prompt sensitivity and demonstrate reduced variance with LLM-as-a-judge protocols →read the paper

Safety, Guardrails & Bias (policies, detection, robustness)

DynaGuard: A Dynamic Guardrail Model With User-Defined Policies – enforce customizable policies with fast detection or chain-of-thought justification across domains beyond static taxonomies →read the paper

🌟 AMBEDKAR: A Multi-level Bias Elimination through a Decoding Approach with Knowledge Augmentation – apply constitution-guided speculative decoding at inference to reduce culturally specific harms without retraining →read the paper

False Sense of Security: Why Probing-based Malicious Input Detection Fails to Generalize – demonstrate that probes overfit instruction patterns and triggers, urging redesigned evaluations and models →read the paper

Multimodal Generation & Editing (images, personalization)

MOSAIC: Multi-Subject Personalized Generation via Correspondence-Aware Alignment and Disentanglement – align subjects to target regions and disentangle features to preserve identities in multi-subject synthesis →read the paper

🌟 Discrete Noise Inversion for Next-scale Autoregressive Text-based Image Editing – invert autoregressive sampling noise to reconstruct and edit images with prompt-aligned, structure-preserving changes →read the paper

Robotics & Perception (3D geometry, sim-to-real)

🌟 Manipulation as in Simulation: Enabling Accurate Geometry Perception in Robots (by ByteDance) – model depth-camera noise to denoise metric depth, enabling policies trained in sim to transfer directly to real manipulation →read the paper

That’s all for today. Thank you for reading! Please send this newsletter to your colleagues if it can help them enhance their understanding of AI and stay ahead of the curve.

How was today's FOD?Please give us some constructive feedback |

Reply