This Week in Turing Post:

Wednesday / AI 101 series: Rethinking causal attention

Friday / AI Literary Series: Episode 3 – How to Learn with AI

Our news digest is always free. Click on the partner’s link to support us or Upgrade to receive our deep dives in full, directly into your inbox. Join Premium members from top companies like Hugging Face, Microsoft, Google, a16z, Datadog plus AI labs such as Ai2, MIT, Berkeley, .gov, and thousands of others to really understand what’s going on with AI →

Don’t stop at the editorial – the models are worth checking out, as well as articles in the reading list

Quantum Whispers in the GPU Roar

“The promotion of the two executives underscores the tech industry and Wall Street’s focus on cloud computing strategy, as companies pour billions of dollars into expanding the infrastructure that powers AI.”

This line from Reuters about Oracle and its two new co-CEOs made me think about how we frame AI’s future. For Wall Street, the story is simple: more AI demand means more GPUs, more datacenters, more cloud contracts. That framing only got more solid today, with OpenAI and NVIDIA announcing a partnership to deploy 10 gigawatts of GPU infrastructure, backed by a potential $100 billion investment. Wall Street hears “AI” and immediately translates it to cloud capacity and silicon supply. Which makes it all the more important to pay attention to the quieter signals from research – where scaling is starting to look less like more metal, and more like smarter math. And it’s about quantum. (This editorial is also a nod towards “How to reduce the cost of running LLMs by 10-15x” by Devansh)

For a long time, even quantum researchers didn’t fully know what was possible. But AI has given this area a new push, and now we’re seeing research that shows inference scaling doesn’t have to be purely brute force. Three papers from just the past week suggest that AI might scale along a second axis: efficiency.

Compression: QKANs and quantum activation functions

The first paper is Quantum Variational Activation Functions Empower Kolmogorov-Arnold Networks. A mouthful, but the idea is sharp: instead of using fixed or spline-based nonlinearities in neural nets, you replace them with single-qubit variational circuits, dubbed DARUANs. These tiny quantum-inspired activations generate exponentially richer frequency spectra, which means the same expressive power with exponentially fewer parameters. The authors build Quantum Kolmogorov-Arnold Networks (QKANs) on top of this idea and show that they can outperform both MLPs and classical KANs with 30% fewer parameters on regression, classification, and even GPT-style generative tasks.

For inference scaling, it means: if you can get the same accuracy with exponentially fewer knobs to turn, you bend the cost curve. That is as important to a hyperscaler as another substation feeding power to a data hall.

Exactness: Coset sampling for lattice algorithms

The second is Exact Coset Sampling for Quantum Lattice Algorithms. At first glance, it looks like pure math, far from AI. But the key contribution is a subroutine that cancels out unknown offsets and produces exact, uniform cosets – making the subsequent Fourier sampling step provably correct.

Why does this matter for inference? Because reasoning models today rely on stochastic inference paths – meandering chains of thought, approximate searches, heuristic tool calls. Exactness is rare. Injecting mathematically guaranteed steps into probabilistic workflows could be one of the future directions for reliable AI inference. If QKANs are about compression, this paper is about precision: fewer wasted tokens, fewer dead-end paths, less variance in cost per query.

Hybridization: quantum-classical models in practice

The third paper is more pragmatic: Hybrid Quantum-Classical Model for Image Classification. These models dropped small quantum layers into classical CNNs. On CIFAR100 and STL10, they outperformed purely classical versions while training faster and using fewer parameters. This is not speculative theory, it is evidence that quantum components can already improve efficiency in standard AI tasks.

It means that you don’t need a fault-tolerant quantum computer to start seeing efficiency gains. A handful of qubits, simulated or physical, stitched into classical pipelines, can tilt the inference economics. The models trained faster and consumed less memory, the two metrics every hyperscaler is desperate to optimize.

What it means for inference scaling

Taken together, these papers suggest that scaling will not be only about throwing larger clusters at larger models. It might also be about extracting more from each parameter, cutting errors at the source, and blending quantum and classical strengths. Wall Street may see cloud capex as the only metric that matters, but research is pointing toward new efficiency primitives that could one day reshape that calculus.

And it is not lost on the companies like NVIDIA. Even when they announce their intention to invest up to $100 billion in OpenAI progressively as each gigawatt is deployed. Their quantum efforts are also growing. NVIDIA has built CUDA-Q, an open software platform for hybrid quantum-classical programming. It launched DGX Quantum, a reference architecture linking quantum control systems directly into AI supercomputers. It is opening a dedicated quantum research center with hardware partners, and Jensen Huang is aggressively investing into quantum startups like PsiQuantum (just raised $1B, saying it’s computer will be ready in two years), Quantinuum, and QuEra through NVentures, marking a major strategy shift in 2025 and legitimizing quantum’s timeline for commercial viability.

What we will see: The cloud build-out will continue. GPUs will remain central. But if you pay attention to the research pipeline, you see quantum ideas slipping into the story of inference scaling. They are still early, but they suggest that efficiency and structure may soon weigh as much as raw scale. It’s worth paying attention to. Soon it will all work.

Topic number two: With so much out there, attention gets stretched too thin. What matters is holding focus on the things that shape the long-term horizon. In our Attention Span#2, we discuss Melanie Mitchell’s “Magical Thinking on AI”, debunking some real examples when AI “felt” sentient. You should send this video to those who thinks it is Watch it here→

Curated Collections – LoRA is rolling

News from The Usual Suspects ©

Alibaba’s Agents Are Getting Uncomfortably Smart

While most labs are still trying to get their chatbots to use tools without melting down, Alibaba’s Tongyi Lab is building full-blown thinking systems – agents that plan, adapt, reason, and even know when to stop talking. Over six standout papers, they’re quietly redefining what agentic AI can be. And yes, all six papers from Tongyi Lab are accompanied by open-source code and datasets

WebWeaver kicks it off with a human-style research agent: a planner that updates its outline as it finds new evidence, and a writer that retrieves only what it needs, section by section. It tops every deep research benchmark — not by stuffing more context in, but by working smarter.

AgentFounder goes deeper, showing that if you start training models to behave like agents (instead of gluing on tool use later), you get far better results. They propose incorporating Agentic Continual Pre-training (Agentic CPT). It’s the first open 30B model to beat much larger closed-source systems on tool-heavy tasks.

WebSailor-V2 makes open-source agents competitive with the big boys. With a clever mix of simulation, real-world training, and a dataset designed to confuse them on purpose, it outperforms models more than 20x its size.

AgentScaler takes a different angle: instead of just training smarter models, it builds more diverse environments for them to learn agentic behavior – like calling APIs across weird and varied scenarios. Less fine-tuning, more bootstrapping real intelligence.

WebResearcher treats research as an ongoing process, not a one-shot prompt. Agents keep track of what they’ve learned, refine their reports over time, and synthesize insights from messy web data. Bonus: it can even generate its own training data.

ReSum tackles the long-context ceiling by teaching agents to summarize themselves as they go. This lets them keep reasoning over hundreds of interactions without forgetting what just happened. It’s memory meets metacognition.

Tongyi wants their systems to think, they want their agents to behave with structure, memory, and intent. And again – open-source!

Intel + Nvidia

Cats and dogs are dining together: Nvidia and Intel are co-developing x86 chips combining Intel CPUs with Nvidia RTX GPU chiplets for gaming PCs – think AMD’s APUs, but with extra sauce. Nvidia is also commissioning custom x86 CPUs from Intel for its data centers. Oh, and they’re sweetening the deal with a cool $5B investment in Intel. Silicon geopolitics just got very interesting.Crazy Rounds This Week

Groq raises $750M at a $6.9B valuation to chip away at Nvidia’s AI dominance with its LPU-powered inference machines.

Read moreReplit lands $250M at $3B valuation, launches Agent 3 to turn AI into your next full-stack teammate.

Figure AI tops $1B in funding, hits $39B valuation, and promises 100,000 humanoids in 4 years.

Follow us on 🎥 YouTube Twitter Hugging Face 🤗

We are reading/watching

Thinking, Searching, and Acting – A reflection on reasoning models by Nathan Lambert

A postmortem of three recent issues by Anthropic

Models to pay attention to

World Labs Marble – generate persistent, navigable 3D worlds from text or images with export to Gaussian splats and web-ready pipelines →see their tweet

DeepSeek-V3.1-Terminus – refine a frontier LLM with better language consistency, improved code/search agents, and updated inference tooling while keeping original capabilities →read the paper

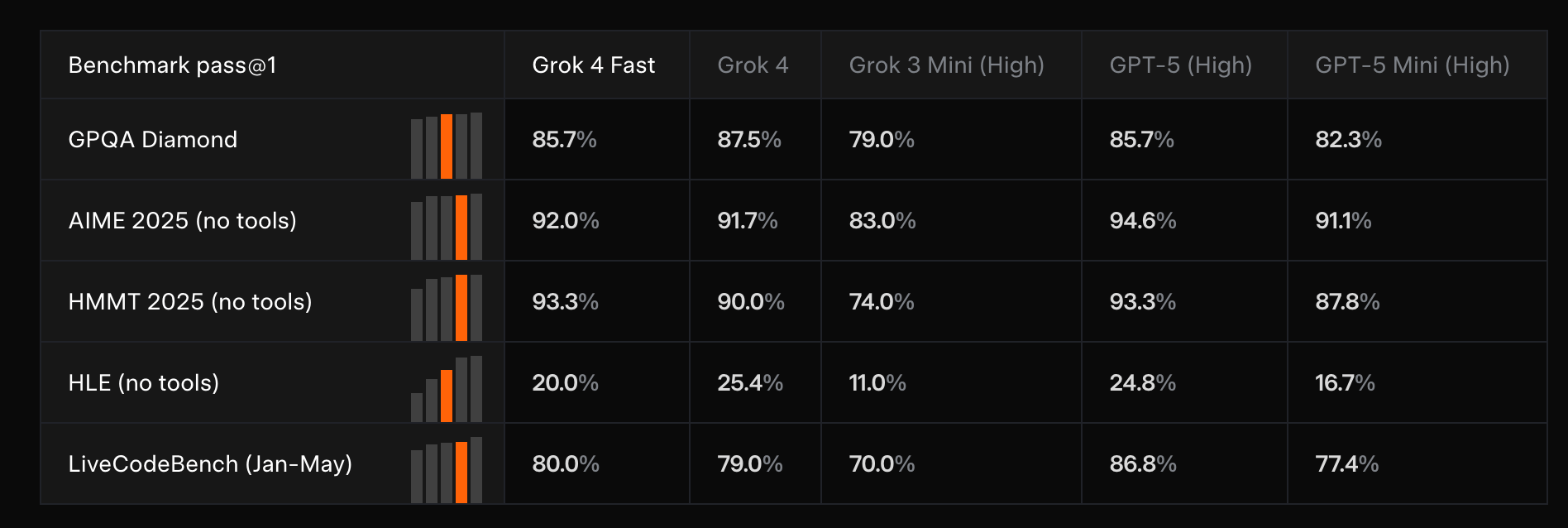

Grok 4 Fast – deliver high-accuracy, cost-efficient reasoning with native tool use, 2M-token context, and unified reasoning/non-reasoning modes →read the paper

Image Credit: Grok’s website

Magistral-Small-2509 – offer a 24B reasoning-centric, multimodal model with [THINK] trace tokens, 128k context, and improved formatting/persona for practical deployment →read the paper

Apertus – address data compliance and multilingual coverage by pretraining only on permissive sources, suppressing memorization with a Goldfish objective, and releasing full reproducible artifacts at 8B/70B scales →read the paper

SAIL-VL2 Technical Report – scale an open vision-language suite via curated multimodal data, progressive pretraining, and MoE/thinking-fusion post-training to reach SOTA across image/video reasoning →read the paper

Towards a Physics Foundation Model (GPhyT) – learn general physical dynamics from diverse simulations to zero-shot across domains with stable long-horizon rollouts →read the paper

The freshest research papers, categorized for your convenience

We organize research papers by goal-oriented or functional categories to make it easier to explore related developments and compare approaches. As always, papers we particularly recommend are marked with 🌟

Multimodal Foundations & Representation

Lost in Embeddings: Information Loss in Vision-Language Models – analyze how projection layers distort visual representations, quantifying patch-level loss and linking it to degraded VQA performance →read the paper

AToken: A Unified Tokenizer for Vision – design a shared 4D latent space for images, videos, and 3D, unifying reconstruction and semantic understanding →read the paper

🌟 MARS2 2025 Challenge on Multimodal Reasoning – benchmark multimodal reasoning across real-world and specialized tasks, releasing datasets and evaluating dozens of models →read the paper

LLM-I: LLMs are Naturally Interleaved Multimodal Creators – orchestrate diverse visual tools (search, diffusion, editing) under an RL-trained agent to surpass single-model multimodal systems →read the paper

Unleashing the Potential of Multimodal LLMs for Zero-Shot Spatio-Temporal Video Grounding – decouple queries into attribute and action cues for more precise video grounding →read the paper

Robotics, Action & World Models

RynnVLA-001: Using Human Demonstrations to Improve Robot Manipulation – pretrain on ego-centric manipulation videos and trajectories, bridging frame prediction with action representation →read the paper

🌟 World Modeling with Probabilistic Structure Integration (by Stanford Neurolab) – integrate causal structure extraction and probabilistic modeling into world models to yield controllable prediction handles →read the paper

🌟 ScaleCUA: Scaling Open-Source Computer Use Agents with Cross-Platform Data (Shanghai AI lab) – build large datasets across OSs to improve GUI automation and computer-use agents →read the paper

🌟 UI-S1: Advancing GUI Automation via Semi-online Reinforcement Learning (by Zhejiang, Tongyi, Alibaba) – bridge offline and online RL for GUI agents with semi-online rollouts and adaptive reward design →read the paper

Reasoning, Math & Tool Integration

THOR: Tool-Integrated Hierarchical Optimization via RL for Mathematical Reasoning – align step-level tool feedback with trajectory-level reasoning for math and code tasks →read the paper

EconProver: Towards More Economical Test-Time Scaling for Automated Theorem Proving – reduce token and sampling costs in theorem proving by dynamically switching chain-of-thought and diversifying RL passes →read the paper

🌟 Improving Context Fidelity via Native Retrieval-Augmented Reasoning (by DIRO, MetaGPT, Mila, McGill, Yale, CIFAR) – train models to integrate retrieved evidence directly in the reasoning process, raising answer reliability →read the paper

🌟 ToolRM: Outcome Reward Models for Tool-Calling Large Language Models (by IBM Research) – introduce benchmarks and train outcome-focused reward models specialized for tool-based reasoning →read the paper

Reinforcement Learning & Alignment Advances

🌟 Learning to Optimize Multi-Objective Alignment Through Dynamic Reward Weighting (by University of Notre Dame, Amazon) – adapt reward weights online to explore Pareto fronts in reasoning and alignment →read the paper

Single-stream Policy Optimization – replace group-based baselines with persistent global tracking for smoother, scalable RL optimization →read the paper

Reasoning over Boundaries: Enhancing Specification Alignment via Test-time Deliberation – use reflection and revision at test time to adaptively align with dynamic safety or behavioral specifications →read the paper

FlowRL: Matching Reward Distributions for LLM Reasoning – match full reward distributions instead of maximizing scalar signals, encouraging diverse valid reasoning paths →read the paper

Evolving Language Models without Labels – couple majority-vote stability with novelty-seeking variation to enable label-free self-improvement →read the paper

Test-Time Learning & In-Context Generalization

🌟 Is In-Context Learning Learning? (by Microsoft) – argue that ICL constitutes learning in a mathematical sense and empirically characterize its limits by ablating memorization, pretraining shifts, and prompt styles, showing sensitivity to exemplar distributions and chain-of-thought prompting at scale →read the paper

Privacy, Security & Unlearning

Scrub It Out! Erasing Sensitive Memorization in Code Language Models via Machine Unlearning – selectively remove memorized sensitive code segments without retraining full models →read the paper

The Sum Leaks More Than Its Parts: Compositional Privacy Risks and Mitigations in Multi-Agent Collaboration – study privacy risks emerging from multi-agent compositions and propose defenses →read the paper

Phi: Preference Hijacking in Multi-modal Large Language Models at Inference Time – show how optimized images can bias outputs toward attacker preferences without overt harm →read the paper

Compression, Efficiency & Specialized Training

🌟 Optimal Brain Restoration for Joint Quantization and Sparsification of LLMs (by ETH Zurich) – align pruning and quantization through error compensation to maximize efficiency without heavy retraining →read the paper

GAPrune: Gradient-Alignment Pruning for Domain-Aware Embeddings – prune with domain-aware importance scoring to preserve specialized capabilities while reducing size →read the paper

zELO: ELO-inspired Training Method for Rerankers and Embedding Models – reformulate ranking as an ELO-like optimization problem, training strong rerankers without supervision →read the paper

That’s all for today. Thank you for reading! Please send this newsletter to your colleagues if it can help them enhance their understanding of AI and stay ahead of the curve.