Computer Vision (CV) is a fascinating field within artificial intelligence that focuses on giving machines the ability to "see" and understand the world through images and videos. While its roots go back decades, as we’ve explored in The Dawn of Computer Vision: From Concept to Early Models (1950-70s) and Computer Vision in the 1980s: The Quiet Decade of Key Advancements, the 1990s and early 2010s were a particularly exciting time when foundational models and concepts paved the way for today's deep learning revolution in computer vision. Let's take a walk down memory lane and revisit some of the key breakthroughs!

We are opening our Historical Series on Computer Vision, which is now free to read. Please share it with anyone who might find it inspiring for their current research. If you would still like to support us, consider becoming a Premium member at 50% OFF. This offer is valid until May 7th

In the report prepared for the panel discussion "Machine Vision in the 90s: Applications and How to Get There" in 1990, the authors highlighted several concerns regarding the field of machine vision:

Fundamental Understanding: a significant gap in our understanding of how visual information is processed, whether by humans or machines. This gap makes it challenging to build effective machine vision systems, often requiring starting from scratch for each new application.

Modeling and Prediction Difficulties: It is hard to predict the performance of machine vision systems, which limits the potential of purely theoretical research and necessitates costly experiments.

Technological vs. Algorithmic Advancements: While there have been substantial advancements in the technology related to sensors and processing power, there has not been a corresponding advancement in the algorithms used in machine vision.

Slow Transfer to Practical Applications: The transition from research to practical, commercial applications has been sluggish, impacting the commercial viability and job prospects within the field.

Market and Economic Challenges: Many companies in the machine vision sector are struggling, and there is a general scaling back on automation by the industry.

Though all of the above was true, one of the most tangible problems for algorithms to advance was a lack of standardized datasets.

The Dataset that Revolutionized Image Recognition: Yann LeCun et al. and MNIST

In the early days of machine learning, researchers relied on small, custom collections of data, hindering fair comparisons between algorithms. Yann LeCun at that moment was deeply involved in developing convolutional neural networks (CNNs). We mentioned in the previous episode that the 80s closed with LeCun demonstrating the practical use of backpropagation in CNNs at Bell Labs in 1989, pioneering the recognition of handwritten digits and proving the effectiveness of neural networks in real-world applications. These specialized neural networks were designed to excel at image recognition tasks. Yet, LeCun needed a large, clean dataset to train and fully demonstrate the power of his CNNs. The existing datasets from the National Institute of Standards and Technology (NIST), while extensive, were filled with messy handwriting variations and imaging imperfections.

This is where LeCun's ingenuity came into play. He and his team took the raw NIST datasets and embarked on a meticulous transformation. They carefully normalized and centered the handwritten digits, and hand-selected a balanced subset of the most legible examples. The result was MNIST: a database of 60,000 training images and 10,000 test images that became the gold standard of its time.

The impact of MNIST was profound. Its standardized format and relative simplicity allowed researchers worldwide to easily compare their algorithms, focusing on the models themselves rather than time-consuming data cleaning. MNIST is still in demand today! It’s manageable size and relative ease of use made it a go-to tool for students and researchers just getting started in machine learning and early deep learning experimentation. The dataset is downloaded and studied regularly on such websites as Kaggle.

From MNIST to LeNet-5

The MNIST database of handwritten digits seems simple in retrospect, but its creation was a turning moment for deep learning. This carefully curated dataset allowed Yann LeCun to work on his CNN models – specifically LeNet-5 – with impressive accuracy, showcasing the architecture's remarkable potential for image recognition. LeNet-5 was introduced in the paper “Gradient-Based Learning Applied To Document Recognition” in 1998 by Yann LeCun, Leon Bottou, Yoshua Bengio, and Patrick Haffner. It’s one of the oldest CNN models and still in use.

LeNet-5 at a glimpse

The separate test set in MNIST helped LeCun combat overfitting, guiding architectural choices towards a model that could generalize to new examples. Moreover, MNIST's focus on a challenging, real-world problem – accurately reading handwritten digits – channeled research into practical directions.

LeNet-5, born from the work on MNIST, holds a special place in the history of deep learning:

Proof of Concept: LeNet-5's demonstrated success on MNIST became a strong proof of the power of CNNs for image recognition tasks. This prompted further research and investment in the field.

Foundation for Further Work: LeNet-5's architecture laid the groundwork for modern CNNs. Many of its core principles, although refined over time, persist to this day.

Real-World Impact: LeNet-5 was deployed in commercial systems used to read checks and zip codes, demonstrating the ability of deep learning to move beyond theory and into practical use.

Beyond the Limits of Perception: David Lowe's Development of SIFT

While LeNet-5 excelled at recognizing individual digits, the world around us is filled with more complex objects in varied scenes. But machines struggle to reliably recognize objects across varying scales, perspectives, and lighting conditions. Traditional feature detection methods stumbled in these realistic scenarios. David Lowe, a Canadian computer scientist, was highly dissatisfied with that and decided to try a new approach. The result was the Scale Invariant Feature Transform (SIFT). SIFT meticulously locates distinctive keypoints within an image, such as corners or prominent edges. Surrounding each keypoint, a unique descriptor is calculated, capturing local image patterns while remaining remarkably resilient to changes in scale, rotation, or illumination.

Despite an initial wave of skepticism and rejections:

I did submit papers on earlier versions of SIFT to both ICCV and CVPR (around 1997/98) and both were rejected. I then added more of a systems flavor and the paper was published at ICCV 1999, but just as a poster. By then I had decided the computer vision community was not interested, so I applied for a patent and intended to promote it just for industrial applications.

David Lowe (from Yann LeCun's website)

Lowe persisted in refining and demonstrating SIFT's capabilities. His pivotal publications in 1999 (Scale Invariant Feature Transform) and 2004 (Distinctive Image Features from Scale-Invariant Keypoints) catalyzed a shift in the CV community, establishing SIFT as a landmark algorithm.

Image Credit: The original paper

The implications of SIFT were profound. Its ability to match features across drastically different images empowered advances in image stitching, 3D reconstruction, and object recognition. Computer vision could finally venture outside controlled laboratory settings and into the complexity of the real world.

The Birth of Real-Time Face Detection: The Viola-Jones Algorithm

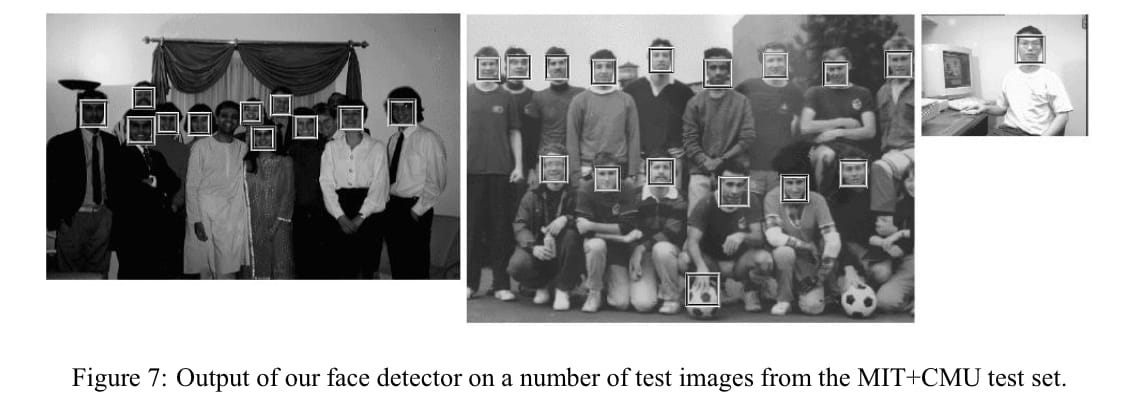

As we explore the evolution of computer vision, we've seen how LeNet-5 showcased the capabilities of CNNs in image recognition, while SIFT excelled in robust object recognition across varying conditions. Building on these advancements, the Viola-Jones algorithm introduced a targeted approach to real-time face detection. Unlike the computationally intensive LeNet-5 and the versatile SIFT, Viola-Jones emphasized speed and accuracy, transforming face detection technology with its innovative use of integral images for fast feature evaluation.

Paul Viola and Michael Jones introduced their paper "Rapid Object Detection using a Boosted Cascade of Simple Features" in 2001.

At the heart of this algorithm lies a series of ingenious ideas.

Firstly, instead of analyzing raw pixels, the algorithm employs 'Haar-like features'. These features act as tiny pattern detectors, resembling rectangles placed at different positions on an image. By calculating the differences in brightness between these rectangles, they can swiftly identify basic facial structures (eyes being darker than cheeks, for example).

Another key innovation is the 'integral image', a computational trick that allows near-instant calculation of Haar-like features regardless of their size. This was a performance game-changer.

Viola and Jones then used AdaBoost, a machine learning technique, to carefully select the most important Haar-like features out of a massive pool, building a powerful classifier.

Their final stroke of brilliance was the 'cascade of classifiers'. This means instead of applying a complex classifier to every patch of an image, a series of increasingly complex classifiers are used. Simple classifiers quickly eliminate most non-face regions, leaving only the most promising areas to be scrutinized by more computationally demanding classifiers further along the cascade. This is what allowed real-time performance.

Image Credit: The Original Paper

Jitendra Malik and the interpretation of visual information

One of the most prolific figures, both in terms of research and educational efforts starting in the 1990s, is Jitendra Malik.

His main focus from the very beginning was how computers could interpret visual information. The fundamental building blocks of images – edges, textures, and contours – became his area of research. Several landmark techniques and algorithms emerged from that and remain widely used today:

Anisotropic Diffusion (the paper): A method for smoothing images while preserving important details and edges. Vital in image processing and noise reduction.

Normalized Cuts (the paper): A powerful approach to image segmentation, grouping pixels into coherent regions. Now a staple in many computer vision applications.

Shape Context (the paper): A way to describe shapes that makes them easier for computers to compare and match, useful for object recognition.

High Dynamic Range Imaging (HDR) (the paper): Computational techniques to capture a greater range of light intensities, producing images closer to what the human eye sees.

His methods and approaches paved the way for object recognition, scene understanding, and a plethora of applications that rely on computers understanding what they're looking at. This fueled advances in image search, 3D modeling, and robotics, where the ability to recognize and manipulate objects is key.

The 2000s: Feature Engineering & Models

The first decade of the 2000s saw continued progress in feature-based techniques and object detection:

Pedro Felzenszwalb's Deformable Part Model (DPM) (the paper): This model treated objects as collections of parts, modeling their geometric relationships and excelling in tasks where objects needed to be pinpointed within images.

This period also saw the creation of crucial datasets that would fuel later progress. Pascal VOC Project provided standardized datasets and evaluation metrics for object recognition and also ran challenges from 2005 to 2012 evaluating performance on object class recognition.

But it was the ImageNet dataset, established by Fei-Fei Li, that became a true catalyst for many deep learning and AI achievements. Its massive size, diverse object categories, and role in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) provided the essential data and competitive framework to push the boundaries of machine perception.

In 2012, AlexNet – a CNN architecture introduced by Alex Krizhevsky in collaboration with Ilya Sutskever and Geoffrey Hinton – obliterated the competition at the 2012 ILSVRC. AlexNet showed definitively that bigger neural networks, trained on huge datasets, yielded better results. The revolution in deep learning has begun, but we will dive into ImageNet, AlexNet, and the incredible advancements that follow in the next episode. Stay tuned and share our historical series with your peers:

How did you like it?

We appreciate you!