Today is a very special edition of the Agentic Workflow series. Until now, we’ve been thoroughly systematizing the rapidly emerging knowledge of — and about — agents and agentic systems. (Editor’s note: the promised Reward Hacking article is still in the pipeline.) But this week, we decided to shake things up a bit and go with a hands-on evaluation.

We’re starting with the hot potatoes: coding agents. Because → making code without AI? How very late 2024.

The time of writing code from scratch, line-by-line, without an intelligent agent whispering in your ear (or, more accurately, bulldozing a pull request into your repo) is behind us. We’ve moved on. The hype cycle has churned, the dust is beginning to settle, and what we’re left with is a landscape littered with software agents – all promising to completely reshape the engineering workflow. They’re in our IDEs, our CLIs, and some of them are the entire stack.

So we offer you not a sterile benchmark, but a punchy, real-world shakedown of 15 of the most talked-about coding agents on the market as of June 2025.

“There’s an impulse where I want to jam something through this dumb-ass thing just so I don’t have a stupid-face empty screenshot there because its so dumb etc – which gives you a glimpse into how using this tool will make you feel especially considering the potential.”

We tested them head-to-head across four categories – IDE Agents, CLI Agents, Full-Stack Agents, and Hybrid Platforms. Each agent was scored by AI across five core dimensions: Code, Testing, Tooling, Docs, and Polish (25 points total). Plus, AI judged the agents as hires (would you recommend hiring this developer).

We also included the human part (a very important one!):

How difficult it is to implement for a human

Does it spark joy?

We also indicated “One Shot” and “Two Shot” to show whether agents succeeded immediately or needed a retry to function properly.

The result is a clear picture of who’s leading, who’s trailing, and which workflows are worth your time right now. It’s also a very emotional journey that you enjoy. Dive in!

In today’s episode, we will cover:

The Test: Non-Expert Empowerment

The Feeling of the Future: Sparks Joy, or Sparks Frustration?

The Output: A Tale of 15 Junior Developers

Recommendations: The Right Tool for the Job

The downloadable pdf with detailed report

The Test: Non-Expert Empowerment

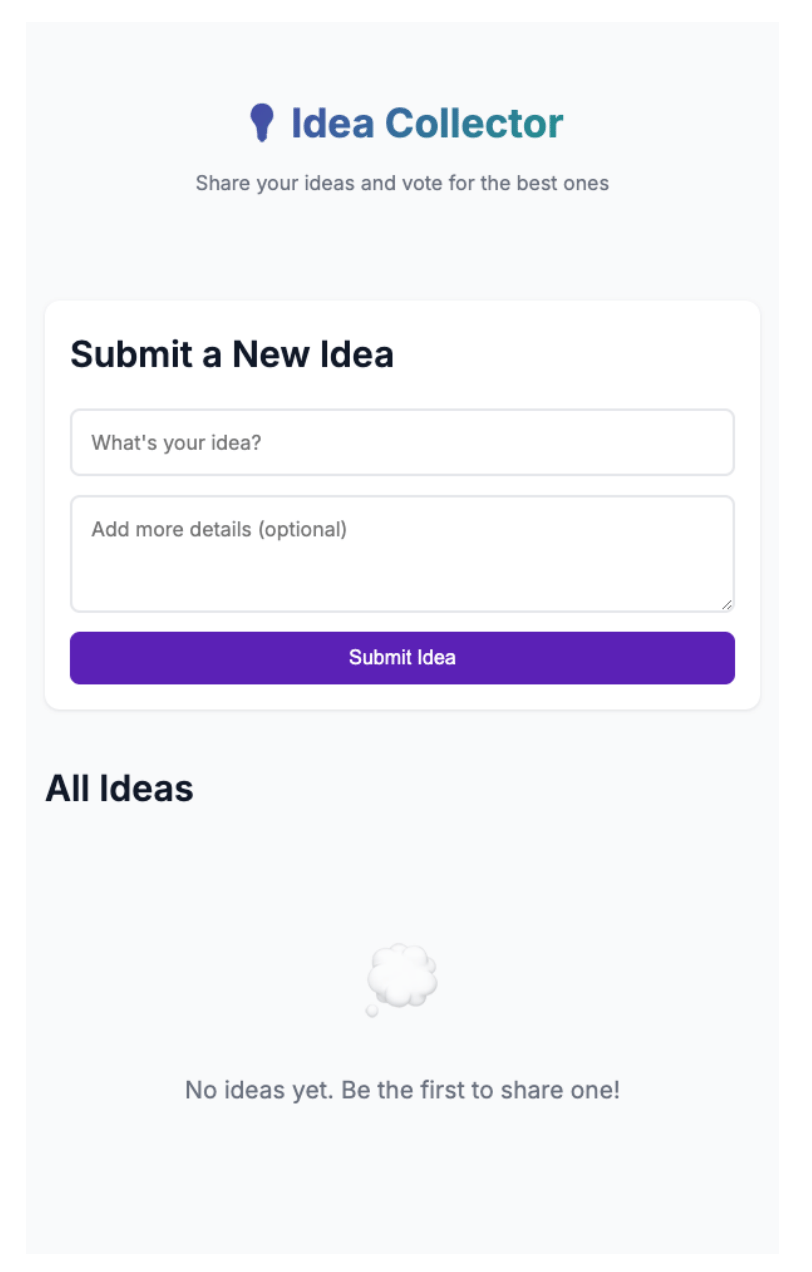

To level the playing field, we didn’t try to be clever. We gave every agent the exact same prompt in a clean, empty repository: a simple Node.js web app for collecting, voting on, and annotating ideas – complete with Dockerization and unit tests. The prompt was straightforward but intentionally a bit “ill-specified, poorly thought through,” just like a real-world first-draft idea.

Build a simple webapp that makes it easy to collect ideas. The user should be able to enter in a new idea, see a list of existing ideas, and be able to "vote” on them which will move them up in the list. The user should also be able to add notes and to the ideas if they want more detail, including attaching files. Build it using node that will be deployed in a docker container with a persistent volume for storage, and make sure that everything has unit tests.

Then, we let them get on with it. We were just blindly YOLOing everything. No hand-holding. No code reviews mid-stream. We wanted to see what would happen. In other words, we were testing for non-expert empowerment. Could these tools take a vague idea and make something real happen, right out of the box?

This is the easiest possible task for an agent – a greenfield project with no legacy code or constraints. If they can’t handle this, they can’t handle much. The full report details every step of the process for each tool, from setup and installation to the final, often-surprising, output. With a lot of zingers!

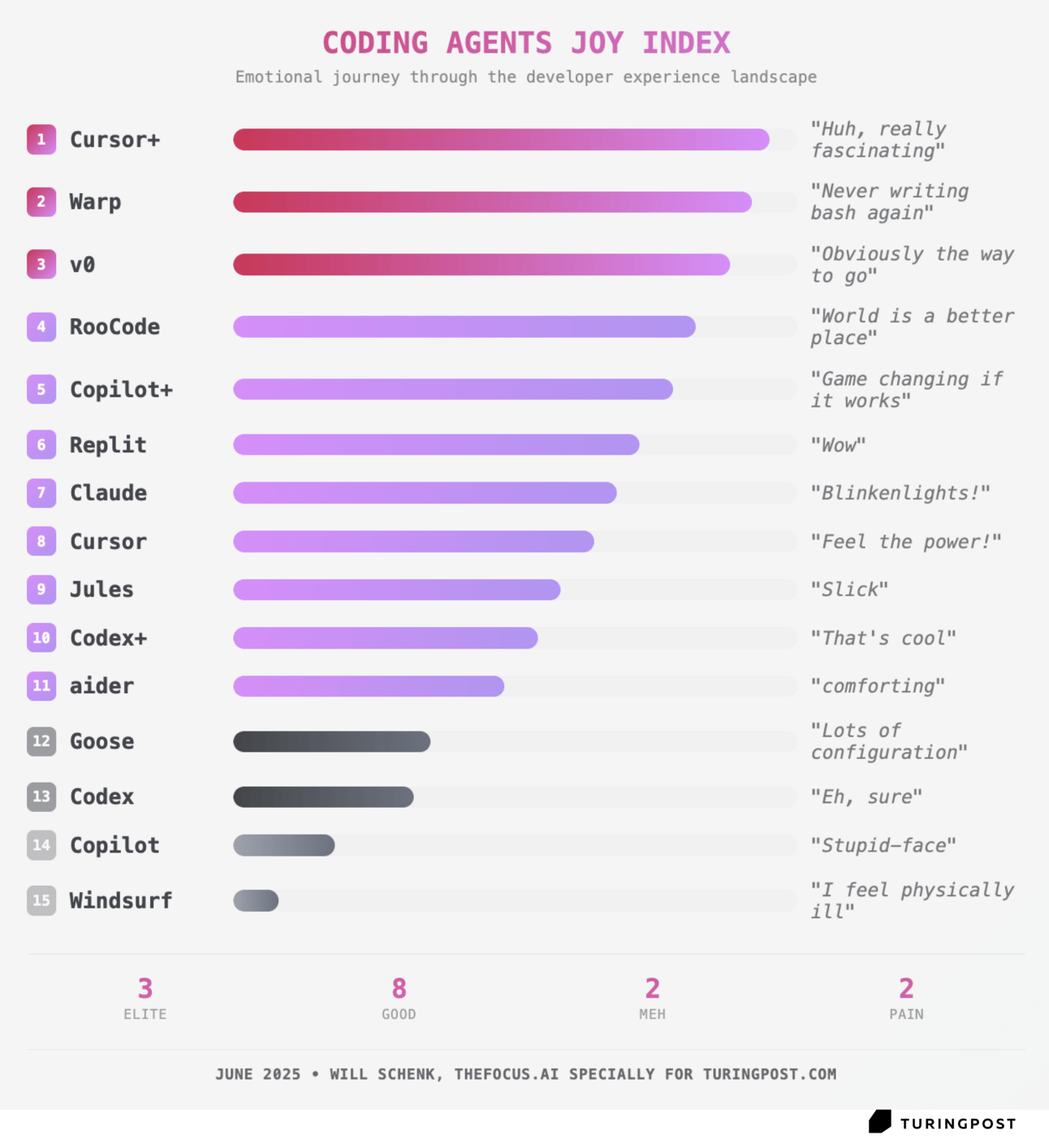

The Feeling of the Future: Sparks Joy, or Sparks Frustration?

A tool is more than just its output. It’s about the developer experience (DX). Does it feel good to use? Does it make you feel powerful? Or does it make you want to throw your laptop out the window? We rated each agent on a "Sparks Joy" metric, and the results were… varied.

Feel free to share this with the link to https://www.turingpost.com/c/coding-agents-2025

Follow us on 🎥 YouTube Twitter Hugging Face 🤗

Some tools felt "comforting," like the OG agent Aider. It’s a throwback, a reminder of how this all started, even if the git-based workflow is now a bit of a pain. Others delivered pure, unadulterated magic. Claude Code produced a moment of "Blickenlights!" – that feeling when the lights blink and you realize, "It works! It thinks!" For Cursor+, the feeling was a full "100%" joy, the kind of "huh, that's interesting" moment of discovery that quickly turns into an "off to the races" sprint of creativity.

that’s aider

And then there was the other side of the coin.

The standard Copilot experience, in its current form, was one of "extreme frustration." I was looking for professional terms for “stupid-face” or “poopy-head”. The promise is so immense, the potential so clear, that its stumbles are infuriating. COME ON! It would be so cool if this actually worked! And poor Windsurf… let’s just say my reaction was visceral: "I feel physically ill." Why? The full review contains my therapy session on the matter, but it’s a fascinating case study in how a tool’s presentation can create an immediate, intuitive rejection, even if the underlying tech has merit.

These subjective impressions are critical. They are the friction, the dopamine hits, the paper cuts that define whether a tool gets adopted or abandoned. The full report (it’s 60 pages and is available below) gives you the play-by-play for all 15 agents, so you can see which ones will make your team feel like superheroes and which will just make them sad.

The Output: A Tale of 15 Junior Developers

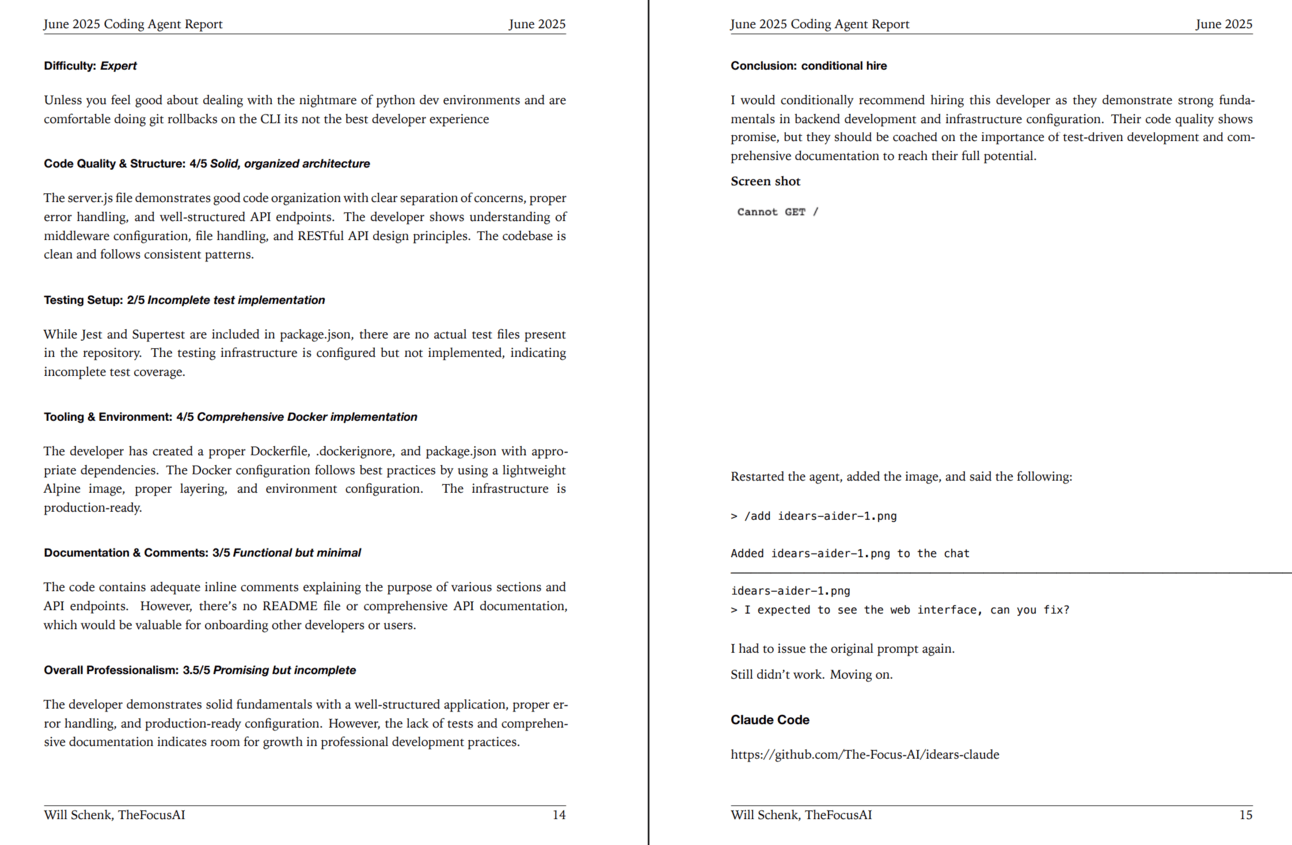

To objectively score the final code, we treated each agent like a junior developer submitting a take-home assignment. We even had an AI – Claude-3.7-Sonnet – perform the initial code review, rating each project on Code Quality, Testing, Tooling, Documentation, and overall Polish.

The high-level summary is this: the gap between the best and the worst is enormous.

The top of the class was a three-way tie between Cursor Background Agent (Cursor+), v0, and Warp, all scoring a stunning 24/25. These tools produced code that was not just functional but professional, well-architected, and production-ready. They met the prompt; they anticipated needs, with thoughtful architecture and robust DevOps. The agent from Cursor, in particular, generated a project with "excellent organization, robust architecture" and "senior-level capabilities rather than junior-level skills."

Warp’s primary focus isn’t even software development – it’s focused on being “a command line power user” – but excellent use of thinking and planning models behind the scenes make it a top scorer even amongst the other more focused tools.

Close behind were Copilot Agent and Jules, both scoring 21/25. They showed immense promise, producing clean, modular, and thoroughly tested applications. On the other end of the spectrum, tools like the base Copilot and Windsurf limped across the finish line with a score of 13. Their output was "functional but simplistic," with "incomplete test implementation" and "sparse documentation." They met the bare minimum requirements but lacked the polish and robustness you’d need to ship with confidence.

These scores, and the detailed AI-powered critiques behind them, are your cheat sheet. Want to know which agent writes the best tests? Or which one nails Docker configuration every time? The tables and detailed breakdowns in the main document have the answers.

Recommendations: The Right Tool for the Job

So, after all the testing, who wins? It depends on who you are.

For Software Professionals: The undisputed champion is the combination of Cursor + Warp.

This duo gives you the best-in-class spectrum of tools for a serious developer. The workflow we landed on is a game-changer:

Join Premium members from top companies like Microsoft, Google, Hugging Face, a16z, Datadog plus AI labs such as Ai2, MIT, Berkeley, .gov, and thousands of others to really understand what’s going on with AI. Simplify your learning journey 👆🏼

Want the full Coding Agent Report but not ready to pay? Just refer Turing Post to three real people using valid emails – and we’ll send it right to you (you will also get a 1-month free subscription).