The complexity of human reasoning remains a challenging frontier for large language models (LLMs) to master. LLMs often struggle with tasks that require multiple, sequential reasoning steps, such as arithmetic, commonsense, and symbolic reasoning.

One of the partial solutions to this challenge is chain-of-thought (CoT) prompting, a method proposed by Google Brain at NeurIPS 2022. This method involves making LLM generate a series of intermediate reasoning steps while presenting its output.

The introduction of CoT prompting improved large language models’ results in performing complex reasoning tasks. It has sparked the interest of researchers, leading to the development of more sophisticated methods based on the underlying idea of CoT, which we discuss extensively in our article.

In this short article, we decided to compile all the useful resources that could help you utilize CoT methods in your projects:

Methods that require you to write your prompt in a specific way:

Basic: zero-shot prompting, few-shot prompting

Chain-of-thought: Original method, self-consistency, zero-shot chain-of-thought -> Read our article and use these 7 resources to master prompt engineering

Automatic-Chain-of-Thought (Auto-CoT) proposes replacing the entire CoT framework with a single phrase: "Let's think step by step." → Original code from AWS

Program-of-Thoughts Prompting (PoT) suggested expressing the reasoning steps as Python programs by the LLM and delegating the computation to a Python interpreter instead of computing the result by the LLM itself → Original code

Multimodal Chain-of-Thought Reasoning (Multimodal-CoT) suggested incorporating language (text) and vision (images) modalities instead of working with just text → Original code from AWS

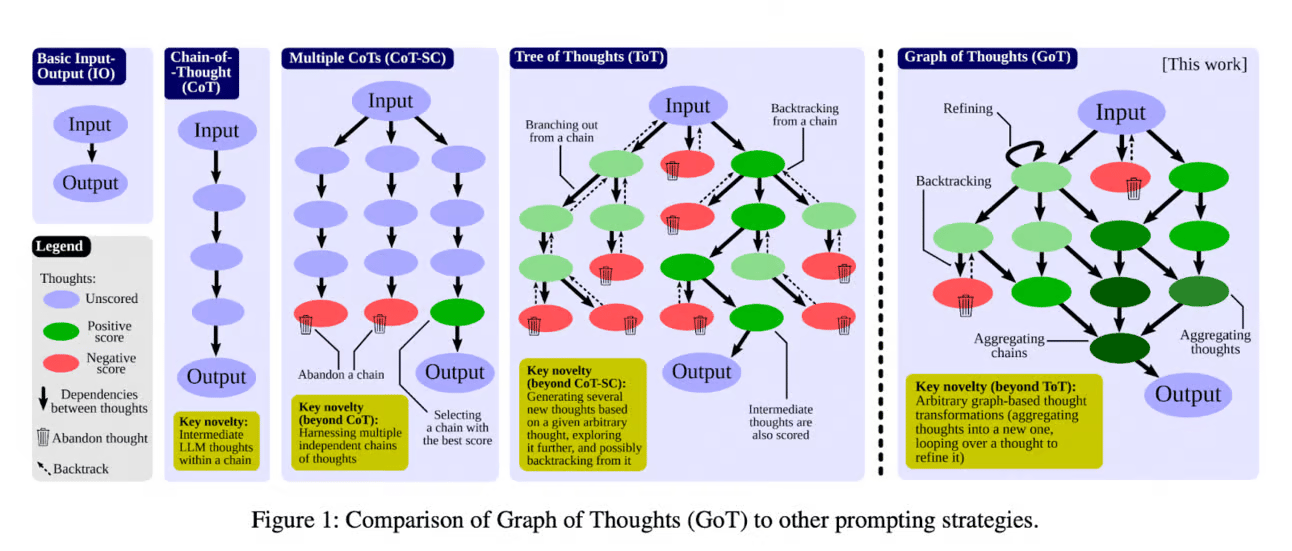

Tree-of-Thoughts (ToT) adopts a more human-like approach to problem-solving by framing each task as a search across a tree of possibilities where each node in this tree represents a partial solution. → Original code from the Princeton NLP team

Graph-of-Thoughts (GoT) leverages graph theory to represent the reasoning process → Original code

Algorithm-of-Thoughts (AoT) embeds algorithmic processes within prompts, enabling efficient problem-solving with fewer queries → Code for implementing AoT from Agora AI lab

Skeleton-of-Thought (SoT) is based on the idea of guiding the LLM itself to give a skeleton of the answer first and then write the overall answer parallelly instead of sequentially. → Original code