This post is part of the Premium offering: our Premium subscribers can submit their posts to be published on Turing Post. Please upgrade (if you haven’t yet) and send us your stories. Everything from the modern tech world will be considered.

This article is a modified extract of the first chapter of the Center for Curriculum Redesign’s (CCR) book Education for the Age of AI. It was submitted by the book’s author – Charles Fadel, Founder of CCR, Member of AI Experts Group at the OECD.

“The future is already here; it is just not evenly distributed” – William Gibson

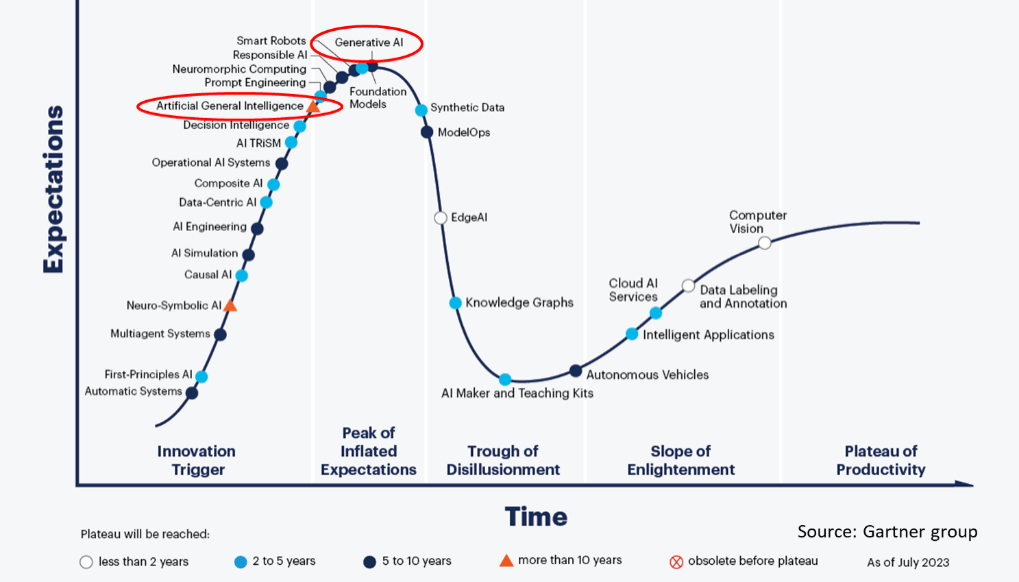

Since the introduction of ChatGPT a mere 14+ months ago, the world has been abuzz with the capabilities of so-called Generative AI. For long-time participants such as myself, this is indeed a significant step forward. But as usual, and for perhaps the 4th time since the 1960s (!), the hype about “AI supplanting humans” is deafening: “all jobs will disappear”, “chaos will reign”, etc. The scarier and louder voices get the attention, while the sober and realistic view gets ignored. Yet the Gartner Group agrees with our view that AGI and GenAI are at or reaching “peak hype”.

Image Credit: Gartner

This article sheds light on the reality and potency of the present, “Capable” phase, and explains why AGI and SuperIntelligence are still a distant threat/opportunity away, even in exponential times. Attention should be focused on the present, highly Capable phase, full of promise and abilities.

Four levels of AI

Let’s start with some basics: AI can be classified into four distinct levels, each representing a significant step in functionality:

Artificial Narrow Intelligence (ANI), aka Machine Learning/Deep Learning: These systems are designed to excel in performing a specific task or function. Some of today's familiar AI applications are games (Go, Stratego, etc.), to which one can add, for instance, artistic style transfer, protein folding resolution, and drug formulation design.

Artificial Capable Intelligence (ACI) possesses a broader and highly potent range of impactful capabilities:

The transition from ANI to AGI in global debates is a significant one, and many tend to make this jump without emphasizing the critical ACI stage in between. The leap between these two categories is enormous, and not appreciated by education researchers. Several respected figures in the AI world have come out in support of this focus on ACI:

Mustafa Suleyman, co-founder of DeepMind (Google) and of Inflection AI, who has coined this present phase as ACI.

Yann LeCun, director of AI research at Meta, has argued that humans overestimate the maturity of current AI systems: “Society is more likely to get ‘cat-level’ or ‘dog-level’ AI years before human-level AI”.

Gary Marcus, leading AGI critic and highly respected “voice of reason,” has quipped: “No fantasy about AGI survives contact with the real world.”

Stanford’s Fei-Fei Li, in an interview: “I respect the existential concern. I’m not saying it is silly and we should never worry about it. But, in terms of urgency, I’m more concerned about ameliorating the risks that are here and now.”

Bill Gates has recently admitted that “GPT-5 would not be much better than GPT-4” (and made no mention of AGI).

Even Sam Altman has finally admitted that “There are more breakthroughs required in order to get to AGI.”

The overhype can be explained by three possibilities:

LLM experts are fervently hoping to see their creation do it all, after decades of toiling against the odds. This “proud Dad syndrome” clouds their judgment, and sometimes makes them clutch at straws with unsubstantiated views.

The intense human tendency to anthropomorphize kicks in and makes even experts “over-patternize” (ironically!)

The need to raise enormous amounts of capital biases thinking.

Artificial General Intelligence (AGI) aims to mimic full human cognitive abilities. These systems would be able to understand, learn, and perform any intellectual task that a human being can. As Gary Marcus states, AGI is “a few paradigm shifts away.”

Artificial SuperIntelligence (ASI) imagines an AI that can compose masterful symphonies, devise groundbreaking scientific theories, and exhibit empathy and understanding in ways that surpass all human capabilities.

What is the potency of the Capable phase?

Technology evolves in a succession of “punctuated equilibria:”

Image Credit: CCR

This ACI phase we are in will benefit from a wide array of rapid improvements, which will be quite impactful, given the competitive pressure between Large Language Models (LLMs) (the main protagonists at this time being: Proprietary: OpenAI/GPT, Anthropic/Claude, Google/Gemini; Open-source: LLaMa, Falcon, Mistral).

Let’s discuss these improvements:

Software improvements

Continued growth in LLM tokens (both the number of tokens for training, as well as context window size, driven by improved processors and memory costs)

Continued algorithmic refinements of the LLMs; of particular note are Genetic Algorithms and hallucinations

Ji, Z. et al. (2022). Survey of hallucination in natural language generation.

LLMs with memory, allowing it to remember past interactions, for more relevant and personalized responses

Faster algorithms allowing for deeper context windows

LangChains and APIs (with higher rate limits) opening the doors for specialized corpora to be linked together

Multimodality models

Multi-agent models

Du, Y., Shuang, L., et al. (n.d.) Improving factuality and reasoning in language models through multiagent debate.

Consumer agents

Autonomous agents

Access to massive amounts of sensory data of far greater types, frequencies, and dynamic ranges than humans

Efforts towards smaller/cheaper models

All-in-one frameworks for easier implementation by non-experts

Plethora of increasingly robust developer tools.

Legions of developers (“He with the most developers wins”)

Blue OS Museum. (2023). Steve Ballmer at .NET presentation: Developers.

Verticalization to improve results and lower hallucinations: Health Care; Finance, etc

Bolton, E. et al. (2022) Stanford CFRM introduces PubMedGPT 2.7b; also Google/PALM, Matias, Y. & Corrado, G. (2023). Our latest health AI research updates.

Legions of prompt designers and experimenters, in every industry

Prompt optimizing

Yang, C., Wang, X. et al. (2023). Large Language Models as Optimizers;

Zhou, Y., Muresanu, A. I. et al. (2022). Large Language Models Are Human-Level Prompt Engineers.

Latent capabilities yet-to-be-discovered, that can probably be unlocked by fine-tuning and clever Reinforcement Learning reward policies

For example, Clevecode. (2023). “Can you beat a stochastic parrot?”

“More is Different”: Emergence situations with unsuspected capabilities. But this view is tempered by the semantic overreach of “emergence” rather than the use of “thresholding”; so “More is Different” could simply be “more is (just) more”

Bounded Regret. (2022). More is different for AI;

Ganguli, D., Hernandez, D., et al (2022). Predictability and Surprise in Large Generative Models.

Miller, K. (2023). AI’s ostensible emergent abilities are a mirage. Stanford: HAI; Rylan Schaeffer, Brando Miranda, Sanmi Koyejo. Are Emergent Abilities of Large Language Models a Mirage? NeurIPS 2023

Beyond LLMs-based systems, Reasoning Systems using Neuro-Symbolic AI

“Hyper-exponential”: AI accelerating AI (“Creating the AI researcher”)

Davidson, T. (2023). What a compute-centric framework says about take-off speeds. Open Philanthropy; Cotra, A. (2023). Language models surprised us. Planned Obsolescence.

Hardware improvements

Co-processors at the edge;

Smith, M. (2023). When AI unplugs, all bets are off. IEEE Spectrum.

AI-specific consumer devices;

Moore-Law-enabled faster GPUs;

Different processor architectures; etc.

Given all the accelerants highlighted above, one can easily conclude that an LLM in 2030 will be enormously better than GPT4, even if still incapable of AGI. To some extent, this might be the clash of the scientific and engineering mindset: the engineers can see how LLMs combined with traditional algorithms will be able to outperform humans in significantly more cognitive tasks than any product allows now, by just having better structures and “plumbing” between them.

But there is another topic that is important to discuss:

LLM Limitations

There are profound technical limitations to LLMs:

Severe anterograde amnesia, unable to form new relationships or memories beyond its intensive education.

“Hallucinations” (confabulations), that are endemic to LLMs: they refer to instances where the model generates incorrect, nonsensical, or unrelated output, despite being given coherent and relevant input.

Lack of world model, meaning: No real understanding of the content it serves, and inability to update knowledge on its own.

Recency: the freshness of its data depends on when the model was last trained; this is becoming less of an issue with recent models that are staying up-to-date.

According to Meta’s Yann LeCun: “One thing we know is that if future AI systems are built on the same blueprint as current LLMs, they may become highly knowledgeable but they will still be dumb. They will still hallucinate, they will still be difficult to control, and they will still merely regurgitate stuff they've been trained on. More importantly, they will still be unable to reason, unable to invent new things, or plan actions to fulfill objectives. And unless they can be trained from video, they still won't understand the physical world. Future systems will have to use a different architecture capable of understanding the world, capable of reasoning, and capable of planning to satisfy a set of objectives and guardrails.”

Potential saturation affecting the progress of LLMs

Technical Limitations:

Diminishing Returns: LLM developers are already conceding that further bulk of models is not the pathway anymore, compared to multimodality and better datasets.

Running out of useful Datasets (forecast for 2026): The scarcity of high-quality and diverse datasets is a notable limitation for LLMs.

Moore’s Law plateauing: The progression of LLMs is closely tied to advancements in computing power, with transistors reaching 1 nm.

The need for subsequent breakthroughs like Reasoning Systems (aka Neuro-Symbolic AI), pairing with Knowledge Graphs, etc.: But the recent $100B meltdown in the autonomous vehicle industry should remind everyone that breakthroughs are not available on demand…

Other Limitations: Management/ideological; Legal; Cost; Policy decisions; Geopolitical events; Environmental impact.

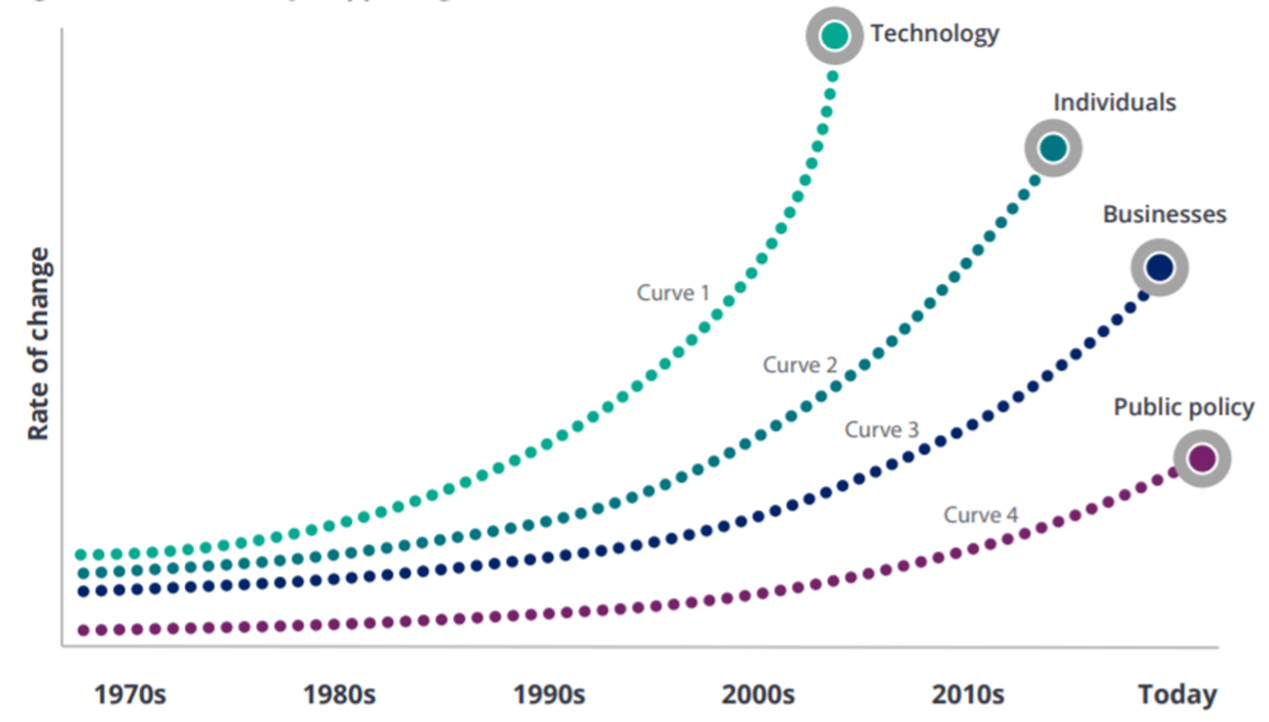

CCR remains concerned that AI’s progress will remain significantly faster than human’s ability to absorb it, despite all the limitations, per this diagram below:

Image Credit: Rewriting the rules for the digital age 2017 Deloitte Global Human Capital Trends

Conclusion

There is also good reason to be optimistic about AI and other technological advancements. Technology has the potential to improve human work and life and to augment our capacity to address global challenges. Vigilance is necessary to manage and reduce the risks associated with technology like AI, nonetheless, perceptions fueled by fear hinder progress and innovation. Embracing technology with a balance of caution and openness will allow society to leverage its benefits while preparing for its challenges.