Diving into the foundational models that once reshaped machine learning is extremely exciting – we can track what was invented and how it influenced today’s AI. One of these approaches is BERT, Bidirectional Encoder Representations from Transformers, developed by researchers at Google AI in 2018 (and baked into Google Search in 2019.)

This neural network architecture introduced a groundbreaking shift in natural language processing (NLP). BERT was the first to pre-train a deep Transformer in a truly bidirectional way. At its core, BERT is pre-trained language model (LM) that learns a deep representation of text by considering both left and right context simultaneously in all its layers, in other words, you guessed it, bidirectionally. This allows the model to develop a richer understanding of word meaning and context than was impossible with earlier one-directional LMs. BERT was also the first to bring “pre-train then fine-tune” paradigm to Transformers at scale. And it’s not just about the unique concept – BERT’s performance results firmly established it as a new standard in NLP.

Around 2020 the spotlight swung to giant autoregressive models (GPT‑3, PaLM, Llama, Gemini). They could generate text, not just label it, and the hype machine followed. In headlines and conference buzz, BERT looked passé – even though its dozens of spin‑offs (RoBERTa, DeBERTa, etc.) kept doing the heavy lifting for classification, ranking, and retrieval.

Lately BERT sees quiet a revival driven by pragmatism. 2024‑25 research (you might have seen ModernBERT and NeoBERT) is rediscovering its sweet spot: cheap, fast, and good enough when you don’t need a 70‑billion‑parameter chatbot. Specific capabilities of BERT has sparked a wave of novel models that also understand context more deeply. So if you hear a lot of BERT recently, it’s time to refresh your knowledge and discuss what is so special about BERT and what it is really capable of. We’ll also look at different models built on BERT concept and explore how BERT is implemented in retrieval tasks on the example of the freshest (today’s!) open-source release – ConstBERT from Pinecone. We also asked a couple of questions to one of the researchers behind it! It’s fascinating journey through the foundations and current innovations – so let's go.

In today’s episode, we will cover:

The main idea behind BERT: Overcoming the limitations of GPT

How does BERT work?

How effective is BERT, actually?

Cool family of BERT-based models

BERT and Retrieval – ConstBERT

General limitations

Conclusion

Sources and further reading

The main idea behind BERT: Overcoming the limitations of GPT

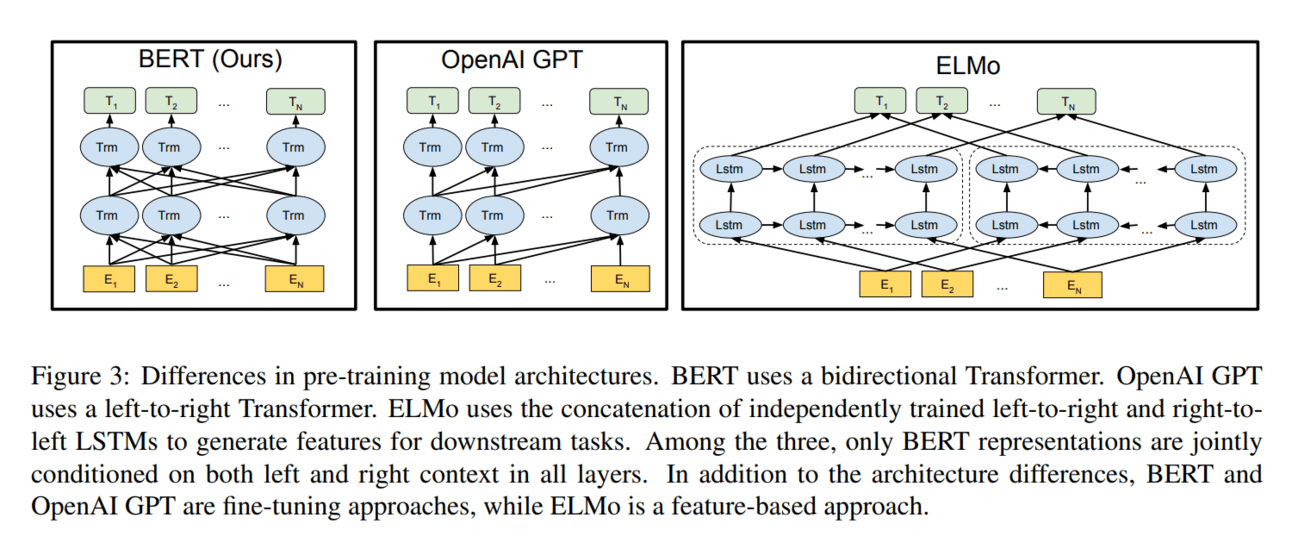

Some basics: Generative Pre-trained Transformer (GPT) is an language model based on transformer architecture, that mostly excels at handling sequences of data. Its main focus is generating stuff like texts, for example, writing essays, making summaries and classifications, generating code or continuing a conversation. It predicts the next word in a sentence, given the previous words. This means that GPT models process text only in one direction – from left to right (or right to left, depending on the model). While this works well for text generation, this unidirectional nature limits the model's ability to understand the full context of a word because it only considers previous words, not taking into account future ones. But what if we need to look a little bit more in the future?

Some tasks, like question answering, indeed require deeper understanding of relationships between words or sentences. That’s why back in 2018 (these 7 years feel like a century ago!), researchers from Google AI Language developed a bidirectional model, called Bidirectional Encoder Representations from Transformers, or simply, BERT. This model looks at both the left and right context of a word at the same time, which helps it better understand the meaning of sentences. It’s trained on a lot of text without needing labeled data, which makes it very flexible. Another notable thing is that BERT’s pre-trained language representations can be easily adapted to various NLP tasks by fine-tuning a task-specific output layer or head.

Image Credit: BERT original paper

Each task, such as question answering or sentence classification, has its own fine-tuned version of the model, but all versions are initialized from the same pre-trained model.

Here is how this idea is realized from the technical side and what allows BERT to achieve state-of-the-art (SOTA) performance.

How does BERT work?

Join Premium members from top companies like Microsoft, Google, Hugging Face, a16z, Datadog plus AI labs such as Ai2, MIT, Berkeley, .gov, and thousands of others to really understand what’s going on with AI. Simplify your learning journey 👆🏼