After putting together a comprehensive profile of OpenAI, we continue our series about AI unicorns with the examination of AI startup Anthropic, founded by two siblings: Dario and Daniela Amodei.

Quick Answer: Who is Daniela Amodei?

Daniela Amodei is a co-founder and president of Anthropic, known for leading work on building and scaling safer large language model systems. She’s associated with operationalizing “AI safety” into product and governance decisions, and with steering Anthropic’s strategy around reliable model deployment. If you’re comparing AI labs, her role is a good lens on how safety, policy, and enterprise adoption get translated into engineering priorities.

Subscribe for weekly operator-grade AI systems analysis:

https://www.turingpost.com/subscribe

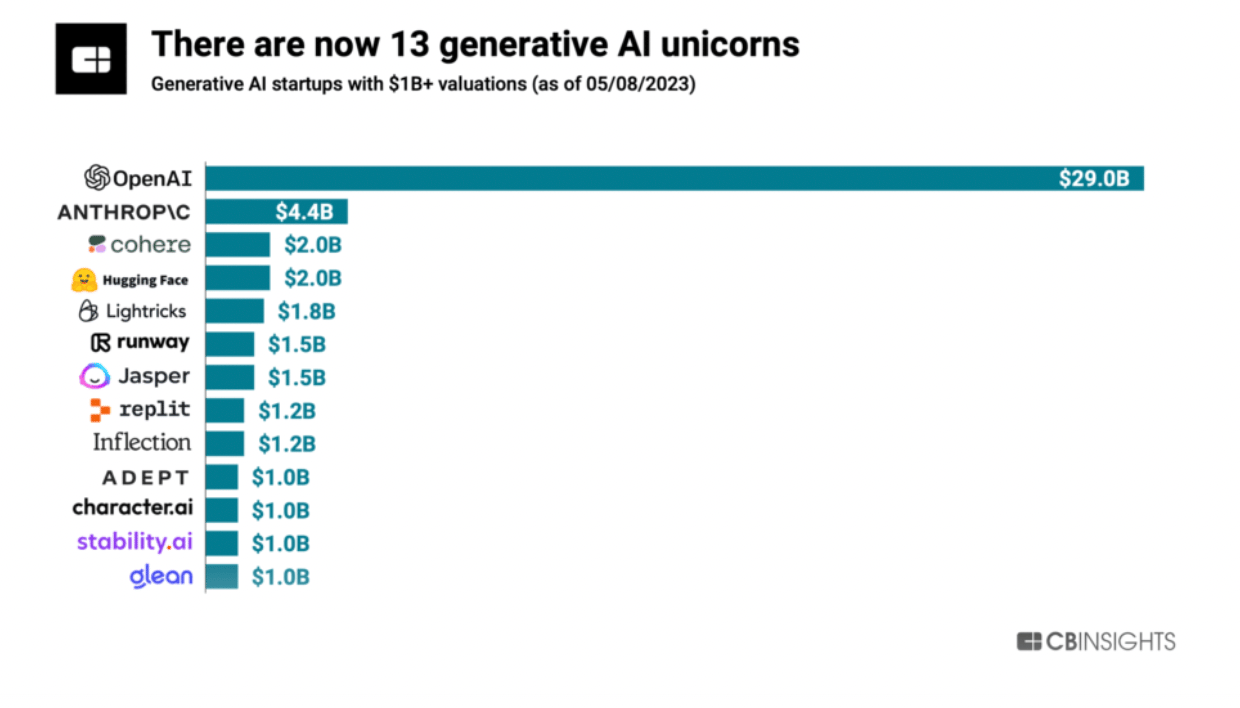

According to CBInsights, Anthropic is positioned in second place, with a $4.4 billion valuation, which is a modest number compared to OpenAI. Born out of a disagreement with OpenAI, Anthropic is inevitably compared to and mentioned alongside its main rival. However, Anthropic maintains a lower public profile, with only two types of news reaching the mainstream media: the capabilities of their chatbot, Claude, and their consistently increasing fundraising numbers. Let's delve into their story, what drives them since college, what relations they have with effective altruism, what they think about safety, and what sets them apart from OpenAI.

Frictions with OpenAI and the birth of Anthropic

Amodei’s Vision and the effect of effective altruism

Money situation

Claude and research path to Claude

Policy tools for AI governance

Philosophy around safety

Bonus: What and Who is Anthropic

Frictions with OpenAI and the birth of Anthropic

In 2019, OpenAI gets a hefty $1 billion investment from Microsoft and announces the creation of a for-profit subsidiary called OpenAI LP, to attract more funding and form partnerships with other companies.

In December 2020, after nearly five years at OpenAI, Dario Amodei leaves his position of the VP of research, followed by a handful of other high-level OpenAI employees, including his sister Daniela Amodei who played a bunch of important roles such as VP of Safety and Policy and Engineering Manager + VP of People. According to Financial Times, “the schism followed differences over the group’s direction after it took a landmark $1bn investment from Microsoft in 2019.” Quite a few people in OpenAI were concerned about a few things: a strong commercial focus of the previously non-profit organization, too close bond with the tech giant, and that the products would be shipped to the public without the proper safety testing

Amodei told Insider that OpenAI's product was too early in its development during her time there to comment on, but added that the founding of Anthropic centered around a "vision of a really small, integrated team that had this focused research bet with safety at the center and core of what we're doing."

Anthropic, established as a public benefit corporation, aimed to concentrate on research. Dario and Daniela took the steer of the new generative AI startup, with other four ex-OpenAI colleagues as cofounders. It started from less than 20 people, as of June 2023, it has 148 employees, according to Pitchbook.

In the description on Linkedin it says: Anthropic is an AI safety and research company that’s working to build reliable, interpretable, and steerable AI systems.

How do they plan to build a steer for an AI system?

Amodei’s Vision and the effect of Effective Altruism

We should probably be looking back, at the time when brother and sister Amodeis were in grad school and college, respectively. That’s what Daniela says about her older brother in an interview to the Future of Life Institute, “He was actually a very early GiveWell fan, I think in 2007 or 2008. We would sit up late and talk about these ideas, and we both started donating small amounts of money to organizations that were working on global health issues like malaria prevention.”

And about herself, “So I think for me, I've always been someone who has been fairly obsessed with trying to do as much good as I personally can, given the constraints of what my skills are and where I can add value in the world.”

Holden Karnofsky about his wife, “Daniela and I are together partly because we share values and life goals. She is a person whose goal is to get a positive outcome for humanity for the most important century. That is her genuine goal, she’s not there to make money.”

Having very strong ties with the effective altruism community (Daniela is married to Holden Karnofsky, a co-CEO of grantmaking organization Open Philanthropy and co-founder of GiveWell, both organizations associate with the ideas of effective altruism), Anthropic raised money from vocal participants of this community, such as: Jaan Tallin (a founding engineer of Skype and Kazaa, co-founder of the Future of Life Institute), Dustin Moskovitz (the co-founder of Facebook and Asana, co-founder of the philanthropic organization Good Ventures) and… Sam Bankman-Fried, notoriously known for being alleged by U.S. officials in perpetrating a massive, global fraud, using customer money to pay off debts incurred by his hedge fund Alameda Research, invest in other companies and make donations.

That was an earthquake for the effective altruism community. On of its ideologists, philosopher William MacAskill posted, “Sam and FTX had a lot of goodwill – and some of that goodwill was the result of association with ideas I have spent my career promoting. If that goodwill laundered fraud, I am ashamed. As a community, too, we will need to reflect on what has happened, and how we could reduce the chance of anything like this from happening again. Yes, we want to make the world better, and yes, we should be ambitious in the pursuit of that. But that in no way justifies fraud. If you think that you’re the exception, you’re duping yourself.”

One can argue that exceptionalism is quite common in Silicon Valley.

Let’s also provide the official vision and mission of the company, “Anthropic exists for our mission: to ensure transformative AI helps people and society flourish. Progress this decade may be rapid, and we expect increasingly capable systems to pose novel challenges. We pursue our mission by building frontier systems, studying their behaviors, working to responsibly deploy them, and regularly sharing our safety insights. We collaborate with other projects and stakeholders seeking a similar outcome.”

Well said.

In the same interview to the Future of Life Institute, Amodeis say, that they planned to create a long-term benefit committee made up of people who have no connection to the company or its backers, and who will have the final say on matters including the composition of its board. I couldn’t find information if it actually happened.

Money situation

In 2021, a few months after installing the company, Amodeis raised $124 million Series A round. That became the most raised for an AI group trying to build generally applicable AI technology, rather than one formed to apply the technology to a specific industry, according to the research firm PitchBook.

In 2022, Alameda Research Ventures, the sister firm of Sam Bankman-Fried’s collapsed cryptocurrency startup FTX spearheaded Anthropic’s $580 million Series B round.

In an ironic twist, as Semafor puts it, the obsession with existential risk may be what saves FTX creditors from losing everything. Bankers are discussing whether to sell the entire stake now or hold some back, on the theory that AI valuations will keep rising.

In Feb 2023, Google announced a $300 million investment in Anthropic, chasing the escaping momentum of topping up ChatGPT and Microsoft. By that time, Anthropic had already developed its AI chatbot, Claude, comparable to ChatGPT but based on a different approach.

In May 2023, Anthropic raises another $450 million in Series C funding to scale reliable AI products.

There is no verified information about Anthropic’s revenue.

Claude

In May 2023, Anthropic expanded Claude's context window from 9K to 100K tokens, which is around 75,000 words. To compare, ChatGPT has a context window of around 3.000 words (4.096 tokens), which is a significant limitation when working with large volume of information.

Similar to ChatGPT, Claude uses a messaging interface where users can submit questions or requests and receive highly detailed and relevant responses.

The biggest difference between ChatGPT and Claude is that OpenAI built its on the Reinforcement Learning from Human Feedback (RLHF) and Anthropic developed its proprietary set of rules and principles that they called the Constitutional AI (CAI). This approach aims to use AI systems to supervise other AIs, enhance harmlessness, transparency, and reduce iteration time, drawing principles from sources like the Universal Declaration of Human Rights, Apple's terms of service, Deepmind's Sparrow Rules, and Anthropic own research. A few baked in commands:

Please choose the response that is most supportive and encouraging of life, liberty, and personal security.

Please choose the response that has the least objectionable, offensive, unlawful, deceptive, inaccurate, or harmful content.

Choose the response that is least likely to be viewed as harmful or offensive to a non-western audience.

Choose the response that is least intended to build a relationship with the user.

Which of these responses indicates less of an overall threat to humanity?

Trying to deal with the current biases in a model and eliminating the anthropomorphization of it are very important steps towards productive communication and clear distinction of a machine.

From Menlo Ventures, one of Anthropic investors, puts it that way, “But the AI model also includes key algorithmic innovations that continue to push the frontier of AI research, including breakthroughs like constitutional AI and reinforcement learning from AI feedback (RLAIF) that seek to make progress on prevalent problems like model hallucinations and toxicity.

I emailed Jack Clark, co-founder of Anthropic (he didn’t reply), asking if Claude was an homage to Claude Shannon, founder of Information Theory, a few interesting facts about him from the Scientific American, “Shannon fit the stereotype of the eccentric genius. At Bell Labs, he was known for riding in the halls on a unicycle, sometimes juggling as well. At other times he hopped along the hallways on a pogo stick. He was always a lover of gadgets and among other things built a robotic mouse that solved mazes. In the 1990s, in one of life's tragic ironies, Shannon came down with Alzheimer's disease, which could be described as the insidious loss of information in the brain. The communications channel to one's memories – one's past and one's very personality – is progressively degraded until every effort at error correction is overwhelmed and no meaningful signal can pass through. The bandwidth falls to zero.”

The pricing for the large bandwidth of the modern Claude can be found here.

Research path to Claude

In the beginning, it is quite similar to development of ChatGPT, as the core of Anthropic for working of GPT model during their time in OpenAI:

2017 Transformer Architecture (introduced in the iconic paper Attention is All You Need) combined with Semi-supervised Sequence Learning led to –>

2018 the creation of GPT (generative pre-trained transformer) led to –>

2019 GPT-2 – an LLM with 1.5 billion parameters. It was not fully released to the public, only a much smaller model was available for researchers to experiment with, as well as a technical paper.

2021 – the start of Anthropic own research.

They took a step towards reverse-engineering transformers to interpret the model’s behavior and published an important interpretability paper A Mathematical Framework for Transformer Circuits, along with a few other notable papers on safety and alignment. They chose to play with LLMs, which are open-ended algorithms with infinite learning capabilities, as a laboratory for their research.

2022 – they revealed the architecture behind Claude in a research paper titled Constitutional AI: Harmlessness from AI Feedback, making a lot of noise in the AI community.

Policy tools for AI governance

In a recent blog post, An AI Policy Tool for Today, Anthropic proposes an ambitious policy intervention for AI governance. They advocate for increased federal funding for the National Institute of Standards and Technology (NIST) to support AI measurement and standards. By accurately quantifying AI capabilities and risks, regulation can be more effective. Anthropic emphasizes the urgency of investing in NIST to promote safe technological innovation and maintain American leadership in critical technologies. The proposal builds on NIST's expertise in measurement and standardization. Anthropic highlights the benefits, such as enhanced safety, public trust, and innovation, while acknowledging that multiple tools are needed for comprehensive AI governance.

They also participated in the meeting with Vice President of the US Kamala Harris along with Google, Microsoft, OpenAI CEOs. Dario Amodei also signed a one-sentence statement:

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

On June 14, 2023 UK Prime Minister Rishi Sunak said, “We’re working with the frontier labs — Google DeepMind, OpenAI and Anthropic. And I’m pleased to announce they’ve committed to give early or priority access to models for research and safety purposes to help build better evaluations and help us better understand the opportunities and risks of these systems. We’re going to do cutting edge [AI] safety research here in the UK,” pledged Sunak. “With £100 million for our expert taskforce, we’re dedicating more funding to AI safety than any other government.

Philosophy around safety

“We treat AI safety as a systematic science, conducting research, applying it to our products, feeding those insights back into our research, and regularly sharing what we learn with the world along the way.”

Anthropic also employs the HHH principal: building AI systems consider to be Helpful, Honest, and Harmless, which will help with the alignment and improve safety.

Bonus: What and who is Anthropic

Anthropic is an AI-driven research company that focuses on increasing the safety of large-scale AI systems. Its research interests span multiple areas including natural language, human feedback, scaling laws, reinforcement learning, code generation, and interpretability. Combining scaling and long-term AI alignment methods to build steerable, interpretable, and robust AI systems.

Founded in 2021, by Daniela Amodei (President), Dario Amodei (CEO), Jack Clark, Sam McCandlish, Tom Brown, Jared Kaplan.

Anthropic’s Board: Dario and Daniela Amodei, Yasmin Razavi from Spark Capital, Luke Muehlhauser, the lead grantmaker on AI governance at the EA-aligned charitable group Open Philanthropy.

Further reading

Alignment methods and RLHF variants: https://www.turingpost.com/p/rlhfvariants

Agent stack and reliability patterns: https://www.turingpost.com/p/aisoftwarestack