Images, text, audio, video, motor signals – AI models now work with everything: generating, analyzing, and editing complex content like videos and simulations. Yet even mixing just two types of data remains a technical challenge for both models and developers.

Our reality is inherently multimodal, so our models must be as well. The race among top AI systems like Gemini, ChatGPT, Claude, and DeepSeek to become all-rounders – coupled with surging global interest in embodied AI – only confirms this trajectory.

Multimodal fusion is the key to building AI that actually understands our world – not just text or images in isolation, but how they work together, the way humans naturally perceive reality.

So today we'll explore how data mixing actually happens, what challenges emerge along the way, and what strategies developers typically employ to merge modalities. We'll also dive into a more detailed data fusion workflow through the lens of a fascinating new approach from Meta AI and KAUST called Mixture of States (MoS). This method mixes data at the state vector level within each layer using a learnable router.

Now, let's start from the basics!

In today’s episode, we will cover:

Multimodal fusion and alignment

Main types of multimodal fusion

When does the data combination happen?

How does the multimodal fusion happen?

How to build a multimodal model? The key architectures

What’s new in multimodal fusion? Mixture of States (MoS)

How does MoS work?

Implementation and performance boost

Not without limitations

Conclusion / Why is multimodal fusion important?

Sources and further reading

Multimodal fusion and alignment

Multimodal data fusion typically serves two main goals:

Modalities complement each other – for example, an image can add visual context to a text description, enabling more accurate predictions.

Some modalities lack data – when one type of data is scarce, information from another modality can help fill the gap through knowledge transfer.

There are two fundamental processes critical to multimodal data quality that remain challenging in multimodal learning:

Alignment ensures that data from different sources are synchronized and correctly matched – for example, a caption describes the right image, or a video frame corresponds to the correct text description. Alignment helps maximize the utility of whatever data is available. Once achieved, the next step is →

Fusion combines the aligned pieces of information into a single, complete prediction. Fusion creates a unified representation that captures more detail than any single modality alone. It determines when and how the data is mixed.

When alignment and fusion work together, they unlock powerful multimodal applications: image-text matching, video understanding, multimodal QA, cross-modal retrieval (like using text to find an image), combining facial expressions with voice tone for emotion recognition, and more.

Today we'll focus on the more complex part – fusion – which enables richer interaction between modalities. So let's explore when and how it occurs.

Main types of multimodal fusion

When does the data combination happen?

This classification pinpoints the timing:

Early fusion merges modalities at the feature extraction stage, right at the start. It excels at capturing interactions early but can be sensitive to noise.

Late fusion combines predictions from separate models at the end and proves especially useful when some modalities may be missing.

Hybrid fusion blends early and late fusion approaches.

In reality, these categories blur together – actual fusion often happens at multiple levels simultaneously, making how fusion occurs the more critical question.

How does the multimodal fusion happen?

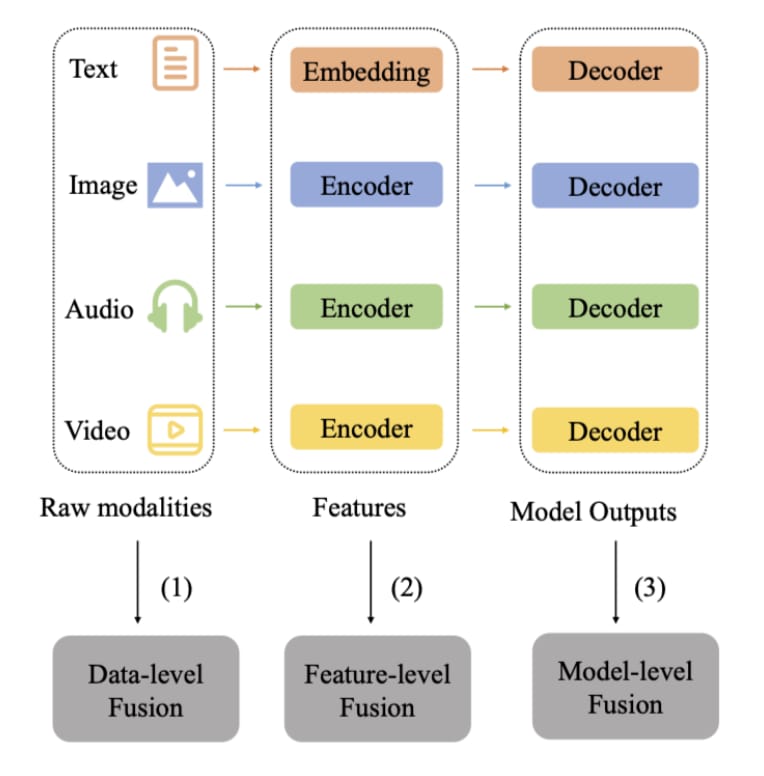

One of the common architectures for AI models is an encoder-decoder architecture, where an encoder extracts meaningful features from the input, and a decoder transforms them into the desired output. This is an entire transformation process and multimodal fusion can appear at different stages of it:

Data-level fusion: All raw data from different modalities is combined before being fed into a shared encoder. For example, in autonomous driving we need to combine raw camera images with LiDAR point clouds for better accuracy.

Feature-level fusion: Firstly, each modality is encoded separately with its own specialized encoder. Then, features from different layers (early, middle, late) are fused together. In this case, we get the mixture of fine-grained and abstract information.

Model-level fusion: Each modality has its own complete model, and fusion happens only at the outputs level after the decoding stage.

Image Credit: Multimodal Alignment and Fusion: A Survey

There are some broader, more advanced ways to mix modalities, because in practice models need a few tricks to make it work properly. These methods include:

Attention-based fusion: Uses attention mechanisms (queries, keys, and values) to control which parts of each modality the model should focus on. The model dynamically weighs and selects the most relevant cross-modal features.

Here, each modality is encoded separately, a connector or adapter (MLP, attention module, Q-Former) maps them into a shared space, and then a Transformer, or LLM fuses them commonly with multi-head attention.

Some of the models that use this type of fusion: CLIP, ViLT, Qwen-VL, VILA, BLIP.

Image Credit: Multimodal Alignment and Fusion: A Survey

Graphical fusion: Represents multimodal data as graphs.

The main thing why many choose graphs is because they capture structured relationships between modalities:

Join Premium members from top companies like Microsoft, Nvidia, Google, Hugging Face, OpenAI, a16z, plus AI labs such as Ai2, MIT, Berkeley, .gov, and thousands of others to really understand what’s going on with AI. Learn the basics and go deeper👆🏼