⚡️ From our partners: Lightning-fast cloud storage that won't break the bank

Fast doesn’t have to be expensive. Cloud Native Qumulo on AWS proves it. Cut costs by up to 80% with all the benefits of on-prem storage, optimized for the Cloud. Whether migrating existing workloads to AWS or building new data and AI-driven services, Qumulo delivers:

More than 1.6TB/s of sustained throughput to keep your databases humming.

Virtually unlimited performance and scalability.

Production-ready deployment in as little as 6 minutes.

This Week in Turing Post:

Wednesday / AI 101 series: Models you HAVE to know about

Friday / Special: Best books of 2025 – your reading list for the holidays

Our news digest is always free. Click on the partner’s link to support us or Upgrade to receive our deep dives in full, directly into your inbox.

From Getting Used to AI to Making AI Useful

Back in June, I wrote something that felt true then – and feels even truer now: We thought that 2025 was the year of agents but it turned out to be the year we got used to AI.

“It's the year the magic fades into the background, becoming so essential that we forget it was ever magic at all. It's the difference between remembering the screeching song of your dial-up modem and just checking your Wi-Fi speed."

And if 2025 was the year we got used to AI, then 2026 is the year we demand it actually work and know how to verify it does.

The Infrastructure Pivot: From More to Better

Mark Russinovich, CTO at Microsoft Azure, sees this shift playing out at the most fundamental level – infrastructure. "The industry shifts from chasing raw GPU totals to maximizing effective throughput and the quality of intelligence produced per watt and per dollar," he explains. “AI growth isn’t about just building more datacenters. It’s about making computing power count. The next wave of AI infrastructure will pack computing power densely across distributed, regionally linked supercomputers. These globally flexible systems act like air traffic control for AI workloads, dynamically routing jobs so nothing sits idle. If one slows, another moves instantly. This federated, policy aware, cost per token-optimized architecture becomes the sustainable, adaptable blueprint that will power AI innovation at global scale.”

It's an elegant vision. And one that computer scientist Denis Giffeler views with characteristic European pragmatism: "2026 is shaping up to be a fine year for artificial intelligence – and a strenuous one for the electricity meters." While major players will keep unveiling models that "fundamentally redefine everything – at least until next Tuesday," governments (led by the European Union) will ensure that progress never escapes unchecked. Meanwhile, China will demonstrate "what happens when you spend less time braking and more time building."

The Reliability Reckoning

But beneath the infrastructure debates and regulatory theater, something more fundamental is shifting. We've gotten used to AI. Now we need to verify it, to trust it.

Shanea Leven, CEO of Empromptu, puts it bluntly: "2023-2025 were about demos. 2026 will be about production accuracy. Enterprises will stop paying for 'magic moments' and start demanding systems that operate with 95%+ reliability."

Ollie Carmichael, VP of product of Adarga, sees this playing out specifically in high-stakes sectors: "The honeymoon phase for 'black box' AI in defence, finance, healthcare will end. These industries will realize that while neural networks offer generalized power, they cannot mathematically guarantee the precision required for audit-ready decisions." He predicts a pivot toward pragmatic neuro-symbolic AI – not necessarily at the algorithmic level, but as an architectural shift. The gap between what Model-as-a-Service vendors promise and what enterprise production requires will fuel the rise of the Forward Deployed Engineer, the "critical human element needed to engineer reliability into probabilistic systems."

Christopher Wendling, co-founder of IntelliTrade and creator of the L7A forecasting system, goes deeper still, predicting that 2026 will be the year we finally confront the limits of backprop – and discover the power of Utilitarian Intelligence: “backpropagation is structurally incapable of delivering reliability in the domains that need it most. We will see a shift away from the belief that scaling neural networks will solve everything, and toward a deeper understanding of why these models hallucinate, drift, and fail out-of-distribution.

The watershed moment will come from something as old and simple as the XOR example: a problem that is trivial for humans but exposes the brittle, geometry-blind nature of backprop. As people revisit this forgotten lesson, they will recognize the broader pattern — that gradient-fitted models do not discover structure. They approximate surfaces. And approximation breaks the moment the data distribution shifts even slightly.” In high-stakes applications, he argues, "hallucination is not a curiosity; it is disqualifying." The future isn't bigger models. "It's truer models – models that preserve structure, respect uncertainty, and survive the noise of the real world."

The Experience Transformation: Beyond the Chat Box

Remember those hours we spent arguing about prompt engineering versus context engineering? About whether we were vibe-coding or co-coding? In 2026, those debates become obsolete.

Shanea Leven, co-founder and CEO of Empromptu, predicts a fundamental UX shift: "AI stops asking you to repeat yourself and starts anticipating what you need. Persistent memory, anticipatory UI, individualized workflows – AI becomes the OS layer of all software. The chat box dies in 2026."

We asked in June whether we were collaborators or callable tools for our AI – whether we needed a term like "bot-y call" for when AI summons us. The answer emerging in 2026: neither. We become what Leven calls "AI Operators" – the most valuable role won't be prompt engineering or ML engineering, but "orchestrating AI-native systems toward business outcomes. This becomes the new product manager."

And behind the scenes, Tyler Suard, author of "Enterprise RAG: Scaling Retrieval Augmented Generation", sees infrastructure evolving to match: an "Internet of Agents" or "Agentic Internet" emerging alongside the first AI-designed drug reaching market – twin symbols of AI moving from demonstration to production deployment.

The Platform Wars Begin

Lee Caswell from Nutanix frames what comes next as a decade-long battle: "We're entering a decade of platform wars, where success won't hinge on individual features but on the strength and flexibility of the entire platform." He predicts businesses will move "from AI-first to AI-smart," re-evaluating where AI actually makes sense – just as they did with cloud adoption.

The winners, he argues, will be those embracing openness: "choice of containers, choice of LLMs, and choice of GPUs." And perhaps most tellingly, Shanea Leven predicts "on-prem AI makes a comeback – not because of security theater, but because companies are done with unpredictable behavior and want actual control."

Tolga Evren, AI technologist from KoçDigital, offers the most radical prediction: "In 2026, 'exe' will die in the software world. Instead, 'language' will be the new exe."

Big Changes in Coding

My two favorites come from Steve Yagge, ex-Sourcegraph.

#1: “If you’re still using an IDE by next summer, you’re not a good engineer anymore.” Oof.

#2: “Next year will be the year of technical people coding.” I totally agree!

If you want to know what he and other experts recommend to stay relevant, you need to → watch this

What It All Means

The Adoption Gap Becomes Real

In the world of people reading this newsletter, AI is already here, and there is no way back. Still, many people have not tried it, and some openly promise they never will. Like people holding on to their horses when machines arrived. History keeps repeating this pattern: people who refused telephones, computers, the internet. Not because they were wrong in principle, but because they underestimated compounding advantage.

People whose deeper fear is that their work is meaningless and easily replaceable.

That gap turns structural in 2026. And never before will the divide be this visible.

Verification Over Belief

But the winning mindset is not blind adoption. Disciplined use wins. That means implemented verification. AI stops being magical (still very impressive though!) and starts being consequential. Knowing how to check outputs, constrain systems, and detect failure becomes a core skill, not a niche concern. AI literacy AI becomes a baseline skill (but who will teach it and where is a separate question).

From Tool Users to System Owners

Human roles shift accordingly. The advantage goes to people who treat AI as a system to be shaped, supervised, and corrected — not a shortcut to answers. The future belongs to Operators: people who decide where AI belongs, where it does not, and how it is allowed to act.

What will we be following most closely for breakthroughs:

AI science, mundane tasks in robotics, new architectures, new types of education.

Whatever 2026 brings, there will always be room for uncertainty and surprise. I want to end today’s editorial with Denis Giffeler’s words: “2026 will be magnificent. Especially for those with enough humor to endure it.”

Curated – Context Engineering

Follow us on 🎥 YouTube Twitter Hugging Face 🤗

We are reading

How to Teach LLMs to Reason for 50 Cents by Devansh (AI Made Simple)

John Schulman on dead ends, scaling RL, and building research institutions – an interview by Cursor CEO

News from the usual suspects

AI2 lays it bare with Olmo 3

AI2 unveils Olmo 3, a fully open suite of language models at 7B and 32B scale, designed for long-context reasoning, coding, function calling, and more. The crown jewel, Olmo 3.1 Think 32B, claims the title of the strongest openly available thinking model. Every checkpoint, dataset, and dependency is included – true open-source, no half-measures.Anthropic blooms in alignment

Anthropic releases Bloom, an open-source tool that auto-generates behavioral evaluations for AI models – turning what was once painstaking alignment work into a matter of configuration. Bloom crafts and judges hundreds of scenarios targeting specific traits like sycophancy or sabotage. With strong correlation to human judgment and model comparison benchmarks, Bloom positions itself as alignment’s newest workhorse – scalable, precise, and refreshingly automated.Plus → Anthropic ships updates to Claude Code:

A lot of news from OpenAI

OpenAI opens the app floodgates

ChatGPT is now officially an app platform. Developers can submit apps for review and distribution inside ChatGPT, with a built-in directory to showcase them. Think mini tools that turn chats into actions—ordering groceries, editing slides, or house hunting—all inside the interface. App discovery, monetization, and surfacing via @ mentions or context cues hint at OpenAI’s bigger play: turning ChatGPT into an operating system for everything.Codex 5.2 gets serious

OpenAI’s GPT‑5.2-Codex lands with agentic finesse, handling large-scale software engineering and cybersecurity tasks like a pro. With long-context memory, tighter tool use, and native Windows support, it can now refactor, migrate, and defend codebases with precision. It even helped uncover new React vulnerabilities.OpenAI monitors the minds of machines

OpenAI introduces a rigorous framework for evaluating “chain-of-thought monitorability” – a fancy way of asking: Can we understand what our AIs are thinking before they act? The answer: yes, but not without nuance. Longer reasoning helps, bigger models muddle things, and “thinking out loud” may become a critical safety layer as AI scales.OpenAI Image is finally faster

OpenAI just rolled out GPT Image 1.5, an upgraded image model now embedded in ChatGPT. Expect faster generation (up to 4x), smarter edits that preserve likeness and lighting, and a dedicated image creation hub for those struck by visual inspiration. API users get it too, now at 20% less per image.

Qwen paints in layers

Another image model, this time from Qwen: Qwen-Image-Layered drops the flat canvas act – literally. This new model from Qwen (via ModelScope and Hugging Face) dissects images into editable RGBA layers, enabling object-level edits like repositioning, recoloring, or deleting without mangling the rest. It’s Photoshop’s spirit, deep-learned and recursive.Google

FunctionGemma calls the shots – locally

Google’s FunctionGemma brings structured function calling to the edge, tuning its 270M-parameter Gemma model for reliable, on-device task execution. Whether parsing calendar requests or powering games offline, it bridges chat and code with surgical precision. Fine-tuning boosts accuracy by 27 points, and yes – it runs on a Jetson Nano (meaning lightweight).Gemini 3 Flash: frontier intelligence built for speed

Researchers from Google released Gemini 3 Flash, a fast, cost-efficient LLM with frontier-level reasoning. It scored 90.4% on GPQA Diamond and 81.2% on MMMU Pro, rivaling larger models. Flash is 3× faster than Gemini 2.5 Pro and uses 30% fewer tokens. Priced at $0.50/1M input and $3/1M output tokens, it excels in multimodal tasks, achieving 78% on SWE-bench Verified and powers real-time app prototyping, video analysis, and intelligent in-game assistants.

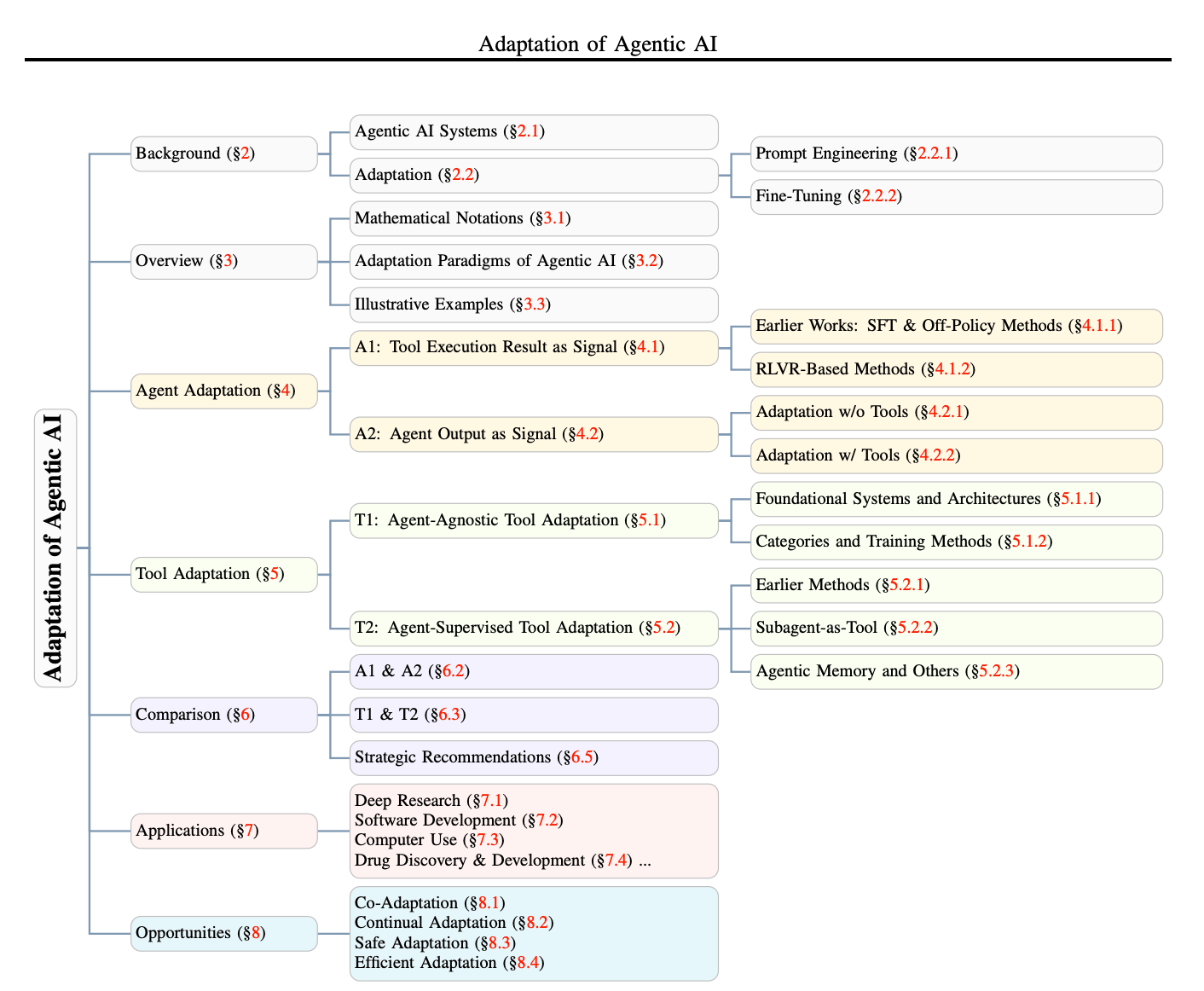

Conceptual foundation to highlight – Adaptation of Agentic AI

Research this week

(as always, 🌟 indicates papers that we recommend to pay attention to)

Reliability, evaluation, and verification

🌟 MMGR: Multi-Modal Generative Reasoning – Introduces a reasoning-aware benchmark that tests whether image and video generators obey physical, logical, spatial, and temporal constraints rather than merely looking good →read the paper

Robust and Calibrated Detection of Authentic Multimedia Content – Proposes a calibrated resynthesis-based detector that targets high-precision authenticity verification and improves robustness against compute-limited adaptive attacks →read the paper

🌟 Are We on the Right Way to Assessing LLM-as-a-Judge? (Huazhong, Maryland) – Introduces an annotation-free evaluation suite for LLM judges that measures preference stability and transitivity, surfacing reliability failure modes in judging →read the paper

When Reasoning Meets Its Laws – Formalizes “laws of reasoning” and benchmarks monotonicity and compositionality of reasoning behavior, then finetunes models to better obey these properties →read the paper

Probing Scientific General Intelligence of LLMs with Scientist-Aligned Workflows – Defines and operationalizes scientific general intelligence through workflow-centric tasks and a large benchmark, then applies test-time RL to boost novelty under retrieval →read the paper

Reinforcement learning recipes for reasoning and exploration

🌟 Nemotron-Cascade: Scaling Cascaded Reinforcement Learning for General-Purpose Reasoning Models (NVIDIA) – Proposes domain-wise cascaded RL stages that reduce cross-domain training friction while improving broad reasoning performance and mode switching →read the paper

JustRL: Scaling a 1.5B LLM with a Simple RL Recipe – Demonstrates that a single-stage, fixed-hyperparameter RL setup can scale cleanly and compete with more complex pipelines, with ablations showing some “standard tricks” can backfire →read the paper

Differentiable Evolutionary Reinforcement Learning – Introduces a bilevel framework that learns reward functions via differentiable meta-optimization over structured reward primitives, aiming to automate reward design →read the paper

Can LLMs Guide Their Own Exploration? Gradient-Guided Reinforcement Learning for LLM Reasoning – Proposes exploration rewards based on novelty in the model’s own gradient update directions, steering sampling toward trajectories that meaningfully diversify learning signals →read the paper

Exploration v.s. Exploitation: Rethinking RLVR through Clipping, Entropy, and Spurious Reward – Analyzes why spurious rewards and entropy reduction can improve RLVR training, attributing gains to mechanisms like clipping bias and reward misalignment dynamics →read the paper

Zoom-Zero: Reinforced Coarse-to-Fine Video Understanding via Temporal Zoom-in – Introduces a coarse-to-fine RL framework that rewards accurate temporal localization and assigns credit to the tokens responsible for grounding versus answering →read the paper

Efficient inference and context mechanics

🌟 Fast and Accurate Causal Parallel Decoding using Jacobi Forcing – Introduces a progressive distillation approach that converts autoregressive models into efficient parallel decoders while preserving causal priors and enabling strong KV-cache compatibility →read the paper

Sliding Window Attention Adaptation – Proposes practical adaptation recipes that recover long-context quality when switching full-attention pretrained models to sliding-window attention for large inference speedups →read the paper

🌟 RePo: Language Models with Context Re-Positioning (Sakana AI) – Proposes learned, differentiable token positioning that reflects contextual dependencies, improving robustness to noisy and structured contexts and supporting longer inputs →read the paper

HyperVL: An Efficient and Dynamic Multimodal Large Language Model for Edge Devices – Introduces an on-device multimodal design that reduces visual encoding cost via adaptive resolution prediction and multi-scale consistency, improving latency and power on mobile hardware →read the paper

Memory and agent foundations

🌟 Memory in the Age of AI Agents – Maps the agent-memory landscape with a clearer taxonomy of forms, functions, and dynamics, and consolidates benchmarks and frameworks to reduce terminological chaos →read the paper

Embodied and egocentric physical intelligence

🌟 PhysBrain: Human Egocentric Data as a Bridge from Vision Language Models to Physical Intelligence – Proposes a pipeline that converts human first-person video into schema-driven, grounded supervision for embodiment and transfers it to robot planning and control →read the paper

Architecture experiments and theory-driven design

🌟 Physics of Language Models: Part 4.1, Architecture Design and the Magic of Canon Layers (FAIR) – Introduces canon layers and a controlled synthetic pretraining methodology to isolate architectural effects and improve information flow and reasoning-related capabilities →read the paper

That’s all for today. Thank you for reading! It’s shorter today due to Columbus Day. Hope you can also have some time off. Please send this newsletter to colleagues if it can help them enhance their understanding of AI and stay ahead of the curve.